diff --git a/.github/workflows/deepeval-results.yml b/.github/workflows/deepeval-results.yml

index 0e0a2ae86..865cff5b7 100644

--- a/.github/workflows/deepeval-results.yml

+++ b/.github/workflows/deepeval-results.yml

@@ -37,9 +37,6 @@ jobs:

if: steps.cached-poetry-dependencies.outputs.cache-hit != 'true'

run: poetry install --no-interaction

- - name: Run tests without pytest

- run: poetry run python tests/test_without_pytest.py

-

- name: Run deepeval tests and capture output

run: poetry run deepeval test run tests/test_quickstart.py > output.txt 2>&1

diff --git a/.github/workflows/test.yml b/.github/workflows/test.yml

index 14dd22dbb..381a8c2e0 100644

--- a/.github/workflows/test.yml

+++ b/.github/workflows/test.yml

@@ -65,4 +65,4 @@ jobs:

env:

OPENAI_API_KEY: ${{ secrets.OPENAI_API_KEY }}

run: |

- poetry run pytest tests/ --ignore=tests/test_llm_metric.py --ignore=tests/test_overall_score.py

+ poetry run pytest tests/ --ignore=tests/test_llm_metric.py

diff --git a/README.md b/README.md

index 2f71d3678..d07f3f891 100644

--- a/README.md

+++ b/README.md

@@ -73,7 +73,7 @@ from deepeval.evaluator import assert_test

def test_case():

input = "What if these shoes don't fit?"

- context = "All customers are eligible for a 30 day full refund at no extra costs."

+ context = ["All customers are eligible for a 30 day full refund at no extra costs."]

# Replace this with the actual output from your LLM application

actual_output = "We offer a 30-day full refund at no extra costs."

@@ -118,7 +118,7 @@ deepeval test run test_chatbot.py

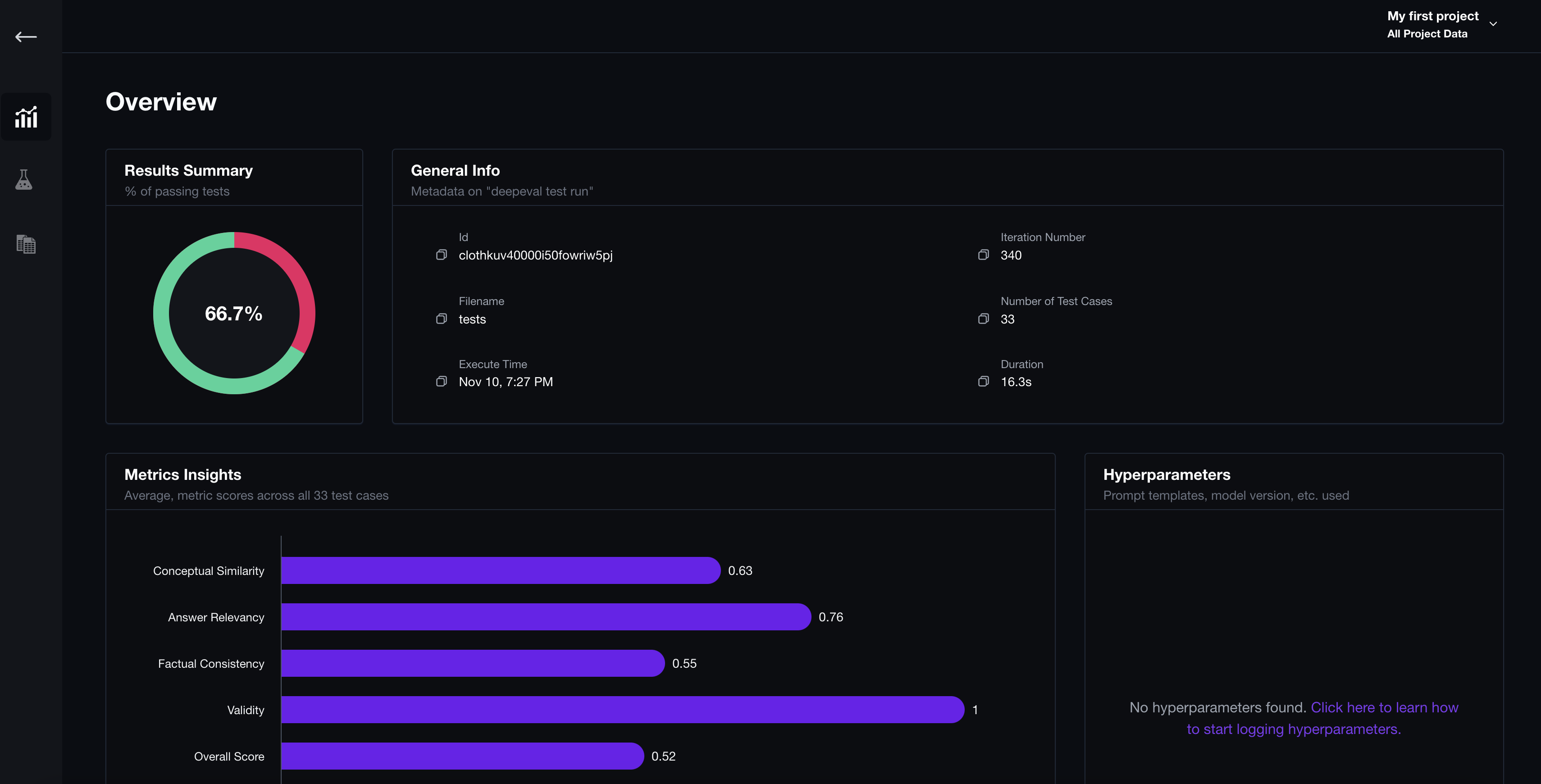

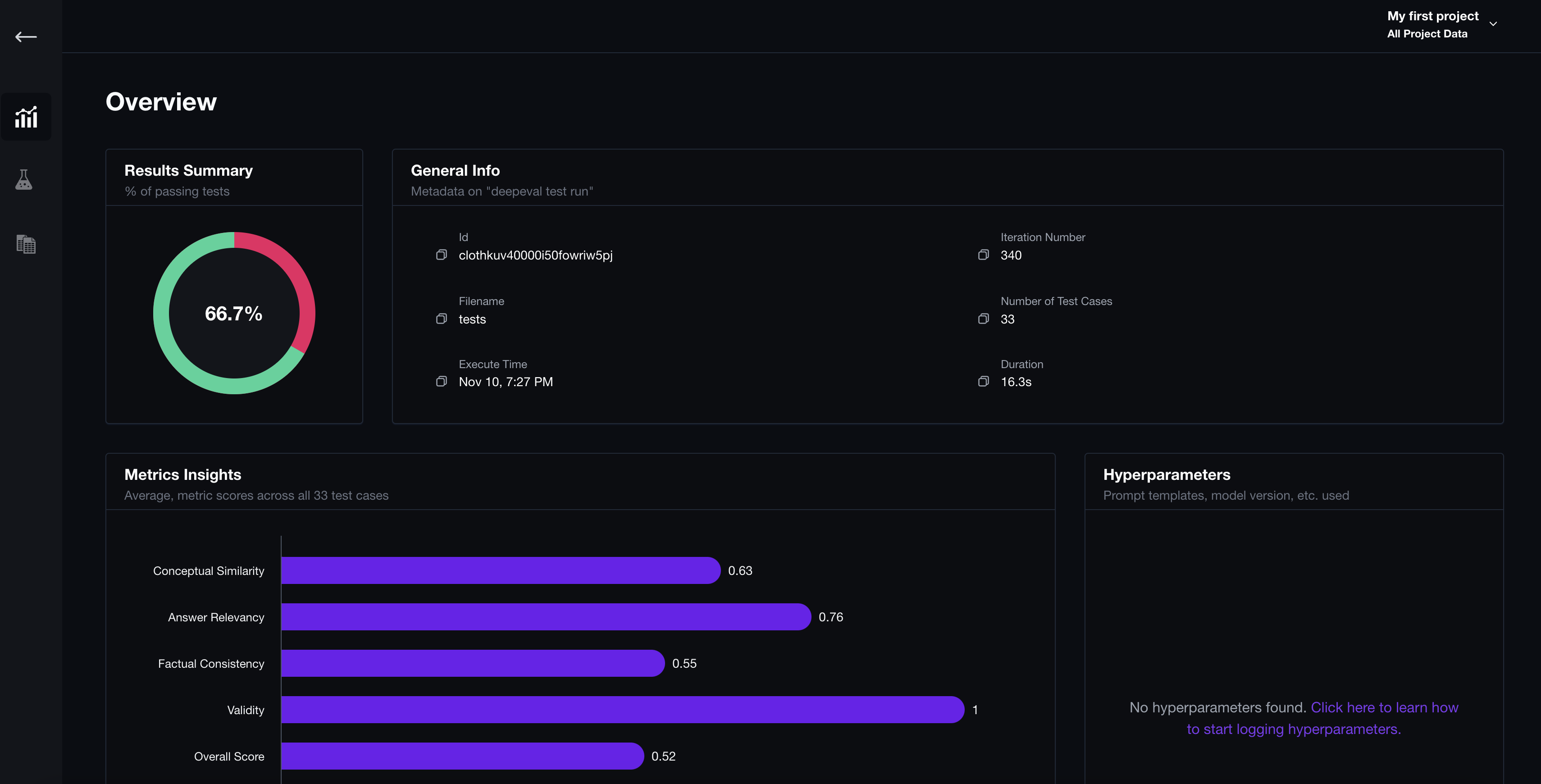

You should see a link displayed in the CLI once the test has finished running. Paste it into your browser to view the results!

-

+

diff --git a/deepeval/_version.py b/deepeval/_version.py

index 171aba412..9d0d5a8eb 100644

--- a/deepeval/_version.py

+++ b/deepeval/_version.py

@@ -1 +1 @@

-__version__: str = "0.20.17"

+__version__: str = "0.20.19"

diff --git a/deepeval/cli/test.py b/deepeval/cli/test.py

index 2e6d3344d..239327c7f 100644

--- a/deepeval/cli/test.py

+++ b/deepeval/cli/test.py

@@ -2,8 +2,6 @@

import typer

import os

from typing_extensions import Annotated

-from deepeval.metrics.overall_score import assert_overall_score

-from .cli_key_handler import set_env_vars

from typing import Optional

from deepeval.test_run import test_run_manager, TEMP_FILE_NAME

from deepeval.utils import delete_file_if_exists

@@ -17,67 +15,6 @@

app = typer.Typer(name="test")

-def sample():

- set_env_vars()

- print("Sending sample test results...")

- print(

- "If this is your first time running these models, it may take a while."

- )

- try:

- query = "How does photosynthesis work?"

- output = "Photosynthesis is the process by which green plants and some other organisms use sunlight to synthesize foods with the help of chlorophyll pigment."

- expected_output = "Photosynthesis is the process by which green plants and some other organisms use sunlight to synthesize food with the help of chlorophyll pigment."

- context = "Biology"

-

- assert_overall_score(query, output, expected_output, context)

-

- except AssertionError as e:

- pass

- try:

- query = "What is the capital of France?"

- output = "The capital of France is Paris."

- expected_output = "The capital of France is Paris."

- context = "Geography"

-

- assert_overall_score(query, output, expected_output, context)

-

- except AssertionError as e:

- pass

- try:

- query = "What are the major components of a cell?"

- output = "Cells have many major components, including the cell membrane, nucleus, mitochondria, and endoplasmic reticulum."

- expected_output = "Cells have several major components, such as the cell membrane, nucleus, mitochondria, and endoplasmic reticulum."

- context = "Biology"

- minimum_score = 0.8 # Adjusting the minimum score threshold

-

- assert_overall_score(

- query, output, expected_output, context, minimum_score

- )

-

- except AssertionError as e:

- pass

-

- try:

- query = "What is the capital of Japan?"

- output = "The largest city in Japan is Tokyo."

- expected_output = "The capital of Japan is Tokyo."

- context = "Geography"

-

- assert_overall_score(query, output, expected_output, context)

- except AssertionError as e:

- pass

-

- try:

- query = "Explain the theory of relativity."

- output = "Einstein's theory of relativity is famous."

- expected_output = "Einstein's theory of relativity revolutionized our understanding of space, time, and gravity."

- context = "Physics"

-

- assert_overall_score(query, output, expected_output, context)

- except AssertionError as e:

- pass

-

-

def check_if_valid_file(test_file_or_directory: str):

if "::" in test_file_or_directory:

test_file_or_directory, test_case = test_file_or_directory.split("::")

diff --git a/deepeval/dataset.py b/deepeval/dataset.py

index 4433fe27b..d8a9fb1a4 100644

--- a/deepeval/dataset.py

+++ b/deepeval/dataset.py

@@ -1,593 +1,220 @@

-"""Class for Evaluation Datasets

-"""

+from typing import List, Optional

+from dataclasses import dataclass

+import pandas as pd

import json

-import random

-import time

-from collections import UserList

-from datetime import datetime

-from typing import Any, Callable, List, Optional

-from tabulate import tabulate

-

-from deepeval.evaluator import run_test

-from deepeval.metrics.base_metric import BaseMetric

+from deepeval.metrics import BaseMetric

from deepeval.test_case import LLMTestCase

-from dataclasses import asdict

+from deepeval.evaluator import evaluate

-class EvaluationDataset(UserList):

- """Class for Evaluation Datasets - which are a list of test cases"""

+@dataclass

+class EvaluationDataset:

+ test_cases: List[LLMTestCase]

- def __init__(self, test_cases: List[LLMTestCase]):

- self.data: List[LLMTestCase] = test_cases

+ def __init__(self, test_cases: List[LLMTestCase] = []):

+ self.test_cases = test_cases

- @classmethod

- def from_csv(

- cls, # Use 'cls' instead of 'self' for class methods

- csv_filename: str,

- query_column: Optional[str] = None,

- expected_output_column: Optional[str] = None,

- context_column: Optional[str] = None,

- output_column: Optional[str] = None,

- id_column: str = None,

- metrics: List[BaseMetric] = None,

- ):

- import pandas as pd

-

- df = pd.read_csv(csv_filename)

- if query_column is not None and query_column in df.columns:

- querys = df[query_column].values

- else:

- querys = [None] * len(df)

- if (

- expected_output_column is not None

- and expected_output_column in df.columns

- ):

- expected_outputs = df[expected_output_column].values

- else:

- expected_outputs = [None] * len(df)

- if context_column is not None and context_column in df.columns:

- contexts = df[context_column].values

- else:

- contexts = [None] * len(df)

- if output_column is not None and output_column in df.columns:

- outputs = df[output_column].values

- else:

- outputs = [None] * len(df)

- if id_column is not None:

- ids = df[id_column].values

- else:

- ids = [None] * len(df)

-

- # Initialize the 'data' attribute as an empty list

- cls.data = []

-

- for i, query_data in enumerate(querys):

- cls.data.append(

- LLMTestCase(

- input=query_data,

- expected_output=expected_outputs[i],

- context=contexts[i],

- id=ids[i] if id_column else None,

- actual_output=outputs[i] if output_column else None,

- )

- )

- return cls(cls.data)

+ def add_test_case(self, test_case: LLMTestCase):

+ self.test_cases.append(test_case)

- def from_test_cases(self, test_cases: list):

- self.data = test_cases

+ def __iter__(self):

+ return iter(self.test_cases)

- @classmethod

- def from_hf_dataset(

- cls,

- dataset_name: str,

- split: str,

- query_column: str,

- expected_output_column: str,

- context_column: str = None,

- output_column: str = None,

- id_column: str = None,

+ def evaluate(self, metrics: List[BaseMetric]):

+ return evaluate(self.test_cases, metrics)

+

+ def load_csv(

+ self,

+ file_path: str,

+ input_col_name: str,

+ actual_output_col_name: str,

+ expected_output_col_name: Optional[str] = None,

+ context_col_name: Optional[str] = None,

+ context_col_delimiter: str = ";",

):

"""

- Load test cases from a HuggingFace dataset.

+ Load test cases from a CSV file.

+

+ This method reads a CSV file, extracting test case data based on specified column names. It creates LLMTestCase objects for each row in the CSV and adds them to the Dataset instance. The context data, if provided, is expected to be a delimited string in the CSV, which this method will parse into a list.

Args:

- dataset_name (str): The name of the HuggingFace dataset to load.

- split (str): The split of the dataset to load (e.g., 'train', 'test').

- query_column (str): The column in the dataset corresponding to the query.

- expected_output_column (str): The column in the dataset corresponding to the expected output.

- context_column (str, optional): The column in the dataset corresponding to the context. Defaults to None.

- output_column (str, optional): The column in the dataset corresponding to the output. Defaults to None.

- id_column (str, optional): The column in the dataset corresponding to the ID. Defaults to None.

+ file_path (str): Path to the CSV file containing the test cases.

+ input_col_name (str): The column name in the CSV corresponding to the input for the test case.

+ actual_output_col_name (str): The column name in the CSV corresponding to the actual output for the test case.

+ expected_output_col_name (str, optional): The column name in the CSV corresponding to the expected output for the test case. Defaults to None.

+ context_col_name (str, optional): The column name in the CSV corresponding to the context for the test case. Defaults to None.

+ context_delimiter (str, optional): The delimiter used to separate items in the context list within the CSV file. Defaults to ';'.

Returns:

- EvaluationDataset: An instance of EvaluationDataset containing the loaded test cases.

- """

- try:

- from datasets import load_dataset

- except ImportError:

- raise ImportError(

- "The 'datasets' library is missing. Please install it using pip: pip install datasets"

- )

+ None: The method adds test cases to the Dataset instance but does not return anything.

- hf_dataset = load_dataset(dataset_name, split=split)

- test_cases = []

+ Raises:

+ FileNotFoundError: If the CSV file specified by `file_path` cannot be found.

+ pd.errors.EmptyDataError: If the CSV file is empty.

+ KeyError: If one or more specified columns are not found in the CSV file.

- for i, row in enumerate(hf_dataset):

- test_cases.append(

- LLMTestCase(

- input=row[query_column],

- expected_output=row[expected_output_column],

- context=row[context_column] if context_column else None,

- actual_output=row[output_column] if output_column else None,

- id=row[id_column] if id_column else None,

- )

- )

- return cls(test_cases)

-

- @classmethod

- def from_json(

- cls,

- json_filename: str,

- query_column: str,

- expected_output_column: str,

- context_column: str,

- output_column: str,

- id_column: str = None,

- ):

- """

- This is for JSON data in the format of key-value array pairs.

- {

- "query": ["What is the customer success number", "What is the customer success number"],

- "context": ["Context 1", "Context 2"],

- "output": ["Output 1", "Output 2"]

- }

-

- if the JSON data is in a list of dictionaries, use from_json_list

+ Note:

+ The CSV file is expected to contain columns as specified in the arguments. Each row in the file represents a single test case. The method assumes the file is properly formatted and the specified columns exist. For context data represented as lists in the CSV, ensure the correct delimiter is specified.

"""

- with open(json_filename, "r") as f:

- data = json.load(f)

- test_cases = []

+ df = pd.read_csv(file_path)

- for i, query in enumerate(data[query_column]):

- test_cases.append(

- LLMTestCase(

- input=data[query_column][i],

- expected_output=data[expected_output_column][i],

- context=data[context_column][i],

- actual_output=data[output_column][i],

- id=data[id_column][i] if id_column else None,

- )

+ inputs = self._get_column_data(df, input_col_name)

+ actual_outputs = self._get_column_data(df, actual_output_col_name)

+ expected_outputs = self._get_column_data(

+ df, expected_output_col_name, default=None

+ )

+ contexts = [

+ context.split(context_col_delimiter) if context else []

+ for context in self._get_column_data(

+ df, context_col_name, default=""

)

- return cls(test_cases)

-

- @classmethod

- def from_json_list(

- cls,

- json_filename: str,

- query_column: str,

- expected_output_column: str,

- context_column: str,

- output_column: str,

- id_column: str = None,

- ):

- """

- This is for JSON data in the format of a list of dictionaries.

- [

- {"query": "What is the customer success number", "expected_output": "What is the customer success number", "context": "Context 1", "output": "Output 1"},

]

- """

- with open(json_filename, "r") as f:

- data = json.load(f)

- test_cases = []

- for i, query in enumerate(data):

- test_cases.append(

+

+ for input, actual_output, expected_output, context in zip(

+ inputs, actual_outputs, expected_outputs, contexts

+ ):

+ self.add_test_case(

LLMTestCase(

- input=data[i][query_column],

- expected_output=data[i][expected_output_column],

- context=data[i][context_column],

- actual_output=data[i][output_column],

- id=data[i][id_column] if id_column else None,

+ input=input,

+ actual_output=actual_output,

+ expected_output=expected_output,

+ context=context,

)

)

- return cls(test_cases)

-

- @classmethod

- def from_dict(

- cls,

- data: List[dict],

- query_key: str,

- expected_output_key: str,

- context_key: str = None,

- output_key: str = None,

- id_key: str = None,

+

+ def _get_column_data(self, df: pd.DataFrame, col_name: str, default=None):

+ return (

+ df[col_name].values

+ if col_name in df.columns

+ else [default] * len(df)

+ )

+

+ def load_json_list(

+ self,

+ file_path: str,

+ input_key_name: str,

+ actual_output_key_name: str,

+ expected_output_key_name: Optional[str] = None,

+ context_key_name: Optional[str] = None,

):

"""

- Load test cases from a list of dictionaries.

+ Load test cases from a JSON file.

+

+ This method reads a JSON file containing a list of objects, each representing a test case. It extracts the necessary information based on specified key names and creates LLMTestCase objects to add to the Dataset instance.

Args:

- data (List[dict]): The list of dictionaries containing the test case data.

- query_key (str): The key in each dictionary corresponding to the query.

- expected_output_key (str): The key in each dictionary corresponding to the expected output.

- context_key (str, optional): The key in each dictionary corresponding to the context. Defaults to None.

- output_key (str, optional): The key in each dictionary corresponding to the output. Defaults to None.

- id_key (str, optional): The key in each dictionary corresponding to the ID. Defaults to None.

- metrics (List[BaseMetric], optional): The list of metrics to be associated with the test cases. Defaults to None.

+ file_path (str): Path to the JSON file containing the test cases.

+ input_key_name (str): The key name in the JSON objects corresponding to the input for the test case.

+ actual_output_key_name (str): The key name in the JSON objects corresponding to the actual output for the test case.

+ expected_output_key_name (str, optional): The key name in the JSON objects corresponding to the expected output for the test case. Defaults to None.

+ context_key_name (str, optional): The key name in the JSON objects corresponding to the context for the test case. Defaults to None.

Returns:

- EvaluationDataset: An instance of EvaluationDataset containing the loaded test cases.

- """

- test_cases = []

- for i, case_data in enumerate(data):

- test_cases.append(

- LLMTestCase(

- input=case_data[query_key],

- expected_output=case_data[expected_output_key],

- context=case_data[context_key] if context_key else None,

- actual_output=case_data[output_key] if output_key else None,

- id=case_data[id_key] if id_key else None,

- )

- )

- return cls(test_cases)

-

- def to_dict(self):

- return [asdict(x) for x in self.data]

+ None: The method adds test cases to the Dataset instance but does not return anything.

- def to_csv(self, csv_filename: str):

- import pandas as pd

+ Raises:

+ FileNotFoundError: If the JSON file specified by `file_path` cannot be found.

+ ValueError: If the JSON file is not valid or if required keys (input and actual output) are missing in one or more JSON objects.

- df = pd.DataFrame(self.data)

- df.to_csv(csv_filename, index=False)

-

- def to_json(self, json_filename: str):

- with open(json_filename, "w") as f:

- json.dump(self.data, f)

+ Note:

+ The JSON file should be structured as a list of objects, with each object containing the required keys. The method assumes the file format and keys are correctly defined and present.

+ """

+ try:

+ with open(file_path, "r") as file:

+ json_list = json.load(file)

+ except FileNotFoundError:

+ raise FileNotFoundError(f"The file {file_path} was not found.")

+ except json.JSONDecodeError:

+ raise ValueError(f"The file {file_path} is not a valid JSON file.")

+

+ # Process each JSON object

+ for json_obj in json_list:

+ if (

+ input_key_name not in json_obj

+ or actual_output_key_name not in json_obj

+ ):

+ raise ValueError(

+ "Required fields are missing in one or more JSON objects"

+ )

- def from_hf_evals(self):

- raise NotImplementedError

+ input = json_obj[input_key_name]

+ actual_output = json_obj[actual_output_key_name]

+ expected_output = json_obj.get(expected_output_key_name)

+ context = json_obj.get(context_key_name)

- def from_df(self):

- raise NotImplementedError

+ self.add_test_case(

+ LLMTestCase(

+ input=input,

+ actual_output=actual_output,

+ expected_output=expected_output,

+ context=context,

+ )

+ )

- def __repr__(self):

- return f"{self.__class__.__name__}({self.data})"

+ def load_hf_dataset(

+ self,

+ dataset_name: str,

+ input_field_name: str,

+ actual_output_field_name: str,

+ expected_output_field_name: Optional[str] = None,

+ context_field_name: Optional[str] = None,

+ split: str = "train",

+ ):

+ """

+ Load test cases from a Hugging Face dataset.

- def sample(self, n: int = 5):

- if len(self.data) <= n:

- n = len(self.data)

- result = random.sample(self.data, n)

- return [asdict(r) for r in result]

+ This method loads a specified dataset and split from Hugging Face's datasets library, then iterates through each entry to create and add LLMTestCase objects to the Dataset instance based on specified field names.

- def head(self, n: int = 5):

- return self.data[:n]

+ Args:

+ dataset_name (str): The name of the Hugging Face dataset to load.

+ split (str): The split of the dataset to load (e.g., 'train', 'test', 'validation'). Defaults to 'train'.

+ input_field_name (str): The field name in the dataset corresponding to the input for the test case.

+ actual_output_field_name (str): The field name in the dataset corresponding to the actual output for the test case.

+ expected_output_field_name (str, optional): The field name in the dataset corresponding to the expected output for the test case. Defaults to None.

+ context_field_name (str, optional): The field name in the dataset corresponding to the context for the test case. Defaults to None.

- def __getitem__(self, index):

- return self.data[index]

+ Returns:

+ None: The method adds test cases to the Dataset instance but does not return anything.

- def __setitem__(self, index, value):

- self.data[index] = value

+ Raises:

+ ValueError: If the required fields (input and actual output) are not found in the dataset.

+ FileNotFoundError: If the specified dataset is not available in Hugging Face's datasets library.

+ datasets.DatasetNotFoundError: Specific Hugging Face error if the dataset or split is not found.

+ json.JSONDecodeError: If there is an issue in reading or processing the dataset.

- def __delitem__(self, index):

- del self.data[index]

+ Note:

+ Ensure that the dataset structure aligns with the expected field names. The method assumes each dataset entry is a dictionary-like object.

+ """

- def run_evaluation(

- self,

- completion_fn: Callable[[str], str] = None,

- outputs: List[str] = None,

- test_filename: str = None,

- max_retries: int = 3,

- min_success: int = 1,

- metrics: List[BaseMetric] = None,

- ) -> str:

- """Run evaluation with given metrics"""

- if completion_fn is None:

- assert outputs is not None

-

- table: List[List[Any]] = []

-

- headers: List[str] = [

- "Test Passed",

- "Metric Name",

- "Score",

- "Output",

- "Expected output",

- "Message",

- ]

- results = run_test(

- test_cases=self.data,

- metrics=metrics,

- raise_error=True,

- max_retries=max_retries,

- min_success=min_success,

- )

- for result in results:

- table.append(

- [

- result.success,

- result.metric_name,

- result.score,

- result.output,

- result.expected_output,

- "",

- ]

- )

- if test_filename is None:

- test_filename = (

- f"test-result-{datetime.now().__str__().replace(' ', '-')}.txt"

- )

- with open(test_filename, "w") as f:

- f.write(tabulate(table, headers=headers))

- print(f"Saved to {test_filename}")

- for t in table:

- assert t[0] == True, t[-1]

- return test_filename

-

- def review(self):

- """A bulk editor for reviewing synthetic data."""

try:

- from dash import (

- Dash,

- Input,

- Output,

- State,

- callback,

- dash_table,

- dcc,

- html,

- )

- except ModuleNotFoundError:

- raise Exception(

- """You will need to run `pip install dash` to be able to review tests that were automatically created."""

+ from datasets import load_dataset

+ except ImportError:

+ raise ImportError(

+ "The 'datasets' library is missing. Please install it using pip: pip install datasets"

)

+ hf_dataset = load_dataset(dataset_name, split=split)

- table_data = [

- {"input": x.query, "expected_output": x.expected_output}

- for x in self.data

- ]

- app = Dash(

- __name__,

- external_stylesheets=[

- "https://cdn.jsdelivr.net/npm/bootswatch@5.3.1/dist/darkly/bootstrap.min.css"

- ],

- )

-

- app.layout = html.Div(

- [

- html.H1("Bulk Review Test Cases", style={"marginLeft": "20px"}),

- html.Button(

- "Add Test case",

- id="editing-rows-button",

- n_clicks=0,

- style={

- "padding": "8px",

- "backgroundColor": "purple", # Added purple background color

- "color": "white",

- "border": "2px solid purple", # Added purple border

- "marginLeft": "20px",

- },

- ),

- html.Div(

- dash_table.DataTable(

- id="adding-rows-table",

- columns=[

- {

- "name": c.title().replace("_", " "),

- "id": c,

- "deletable": True,

- "renamable": True,

- }

- for i, c in enumerate(["input", "expected_output"])

- ],

- data=table_data,

- editable=True,

- row_deletable=True,

- style_data_conditional=[

- {

- "if": {"row_index": "odd"},

- "backgroundColor": "rgb(40, 40, 40)",

- "color": "white",

- },

- {

- "if": {"row_index": "even"},

- "backgroundColor": "rgb(30, 30, 30)",

- "color": "white",

- },

- {

- "if": {"state": "selected"},

- "backgroundColor": "white",

- "color": "white",

- },

- ],

- style_header={

- "backgroundColor": "rgb(30, 30, 30)",

- "color": "white",

- "fontWeight": "bold",

- "padding": "10px", # Added padding

- },

- style_cell={

- "padding": "10px", # Added padding

- "whiteSpace": "pre-wrap", # Wrap cell contents

- "maxHeight": "200px",

- },

- ),

- style={"padding": "20px"}, # Added padding

- ),

- html.Div(style={"margin-top": "20px"}),

- html.Button(

- "Save To CSV",

- id="save-button",

- n_clicks=0,

- style={

- "padding": "8px",

- "backgroundColor": "purple", # Added purple background color

- "color": "white",

- "border": "2px solid purple", # Added purple border

- "marginLeft": "20px",

- },

- ),

- dcc.Input(

- id="filename-input",

- type="text",

- placeholder="Enter filename (.csv format)",

- style={

- "padding": "8px",

- "backgroundColor": "rgb(30, 30, 30)",

- "color": "white",

- "marginLeft": "20px",

- "border": "2px solid purple", # Added purple border

- "width": "200px", # Edited width

- },

- value="review-test.csv",

- ),

- html.Div(id="code-output"),

- ],

- style={"padding": "20px"}, # Added padding

- )

-

- @callback(

- Output("adding-rows-table", "data"),

- Input("editing-rows-button", "n_clicks"),

- State("adding-rows-table", "data"),

- State("adding-rows-table", "columns"),

- )

- def add_row(n_clicks, rows, columns):

- if n_clicks > 0:

- rows.append({c["id"]: "" for c in columns})

- return rows

-

- @callback(

- Output("save-button", "n_clicks"),

- Input("save-button", "n_clicks"),

- State("adding-rows-table", "data"),

- State("adding-rows-table", "columns"),

- State("filename-input", "value"),

- )

- def save_data(n_clicks, rows, columns, filename):

- if n_clicks > 0:

- import csv

-

- with open(filename, "w", newline="") as f:

- writer = csv.DictWriter(

- f, fieldnames=[c["id"] for c in columns]

- )

- writer.writeheader()

- writer.writerows(rows)

- return n_clicks

-

- @app.callback(

- Output("code-output", "children"),

- Input("save-button", "n_clicks"),

- State("filename-input", "value"),

- )

- def show_code(n_clicks, filename):

- if n_clicks > 0:

- code = f"""

- from deepeval.dataset import EvaluationDataset

-

- # Replace 'filename.csv' with the actual filename

- ds = EvaluationDataset.from_csv('{filename}')

-

- # Access the data in the CSV file

- # For example, you can print a few rows

- print(ds.sample())

- """

- return html.Div(

- [

- html.P(

- "Code to load the CSV file back into a dataset for testing:"

- ),

- html.Pre(code, className="language-python"),

- ],

- style={"padding": "20px"}, # Added padding

+ # Process each entry in the dataset

+ for entry in hf_dataset:

+ if (

+ input_field_name not in entry

+ or actual_output_field_name not in entry

+ ):

+ raise ValueError(

+ "Required fields are missing in one or more dataset entries"

)

- else:

- return ""

-

- app.run(debug=False)

- def add_evaluation_query_answer_pairs(

- self,

- openai_api_key: str,

- context: str,

- n: int = 3,

- model: str = "openai/gpt-3.5-turbo",

- ):

- """Utility function to create an evaluation dataset using ChatGPT."""

- new_dataset = create_evaluation_query_answer_pairs(

- openai_api_key=openai_api_key, context=context, n=n, model=model

- )

- self.data += new_dataset.data

- print(f"Added {len(new_dataset.data)}!")

+ input = entry[input_field_name]

+ actual_output = entry[actual_output_field_name]

+ expected_output = entry.get(expected_output_field_name)

+ context = entry.get(context_field_name)

-

-def make_chat_completion_request(prompt: str, openai_api_key: str):

- import openai

-

- openai.api_key = openai_api_key

- response = openai.ChatCompletion.create(

- model="gpt-3.5-turbo",

- messages=[

- {"role": "system", "content": "You are a helpful assistant."},

- {"role": "user", "content": prompt},

- ],

- )

- return response.choices[0].message.content

-

-

-def generate_chatgpt_output(prompt: str, openai_api_key: str) -> str:

- max_retries = 3

- retry_delay = 1

- for attempt in range(max_retries):

- try:

- expected_output = make_chat_completion_request(

- prompt=prompt, openai_api_key=openai_api_key

- )

- break

- except Exception as e:

- print(f"Error occurred: {e}")

- if attempt < max_retries - 1:

- print(f"Retrying in {retry_delay} seconds...")

- time.sleep(retry_delay)

- retry_delay *= 2

- else:

- raise

-

- return expected_output

-

-

-def create_evaluation_query_answer_pairs(

- openai_api_key: str,

- context: str,

- n: int = 3,

- model: str = "openai/gpt-3.5-turbo",

-) -> EvaluationDataset:

- """Utility function to create an evaluation dataset using GPT."""

- prompt = f"""You are generating {n} sets of of query-answer pairs to create an evaluation dataset based on the below context.

-Context: {context}

-

-Respond in JSON format in 1 single line without white spaces an array of JSON with the keys `query` and `answer`. Do not use any other keys in the response.

-JSON:"""

- for _ in range(3):

- try:

- responses = generate_chatgpt_output(

- prompt, openai_api_key=openai_api_key

+ self.add_test_case(

+ LLMTestCase(

+ input=input,

+ actual_output=actual_output,

+ expected_output=expected_output,

+ context=context,

+ )

)

- responses = json.loads(responses)

- break

- except Exception as e:

- print(e)

- return EvaluationDataset(test_cases=[])

-

- test_cases = []

- for response in responses:

- test_case = LLMTestCase(

- input=response["query"],

- expected_output=response["answer"],

- context=context,

- # store this as None for now

- actual_output="-",

- )

- test_cases.append(test_case)

-

- dataset = EvaluationDataset(test_cases=test_cases)

- return dataset

diff --git a/deepeval/evaluator.py b/deepeval/evaluator.py

index 63322ce9f..74d80a10f 100644

--- a/deepeval/evaluator.py

+++ b/deepeval/evaluator.py

@@ -1,16 +1,15 @@

"""Function for running test

"""

-import os

-import warnings

-from typing import List, Optional, Union

+

+from typing import List

import time

from dataclasses import dataclass

-from .retry import retry

+import copy

-from .metrics import BaseMetric

-from .test_case import LLMTestCase, TestCase

+from deepeval.progress_context import progress_context

+from deepeval.metrics import BaseMetric

+from deepeval.test_case import LLMTestCase

from deepeval.test_run import test_run_manager

-import sys

@dataclass

@@ -18,110 +17,122 @@ class TestResult:

"""Returned from run_test"""

success: bool

- score: float

- metric_name: str

- query: str

- output: str

+ metrics: List[BaseMetric]

+ input: str

+ actual_output: str

expected_output: str

- metadata: Optional[dict]

- context: str

-

- def __post_init__(self):

- """Ensures score is between 0 and 1 after initialization"""

- original_score = self.score

- self.score = min(max(0, self.score), 1)

- if self.score != original_score:

- warnings.warn(

- "The score was adjusted to be within the range [0, 1]."

- )

-

- def __gt__(self, other: "TestResult") -> bool:

- """Greater than comparison based on score"""

- return self.score > other.score

-

- def __lt__(self, other: "TestResult") -> bool:

- """Less than comparison based on score"""

- return self.score < other.score

+ context: List[str]

def create_test_result(

test_case: LLMTestCase,

success: bool,

- metric: float,

+ metrics: list[BaseMetric],

) -> TestResult:

if isinstance(test_case, LLMTestCase):

return TestResult(

success=success,

- score=metric.score,

- metric_name=metric.__name__,

- query=test_case.input if test_case.input else "-",

- output=test_case.actual_output if test_case.actual_output else "-",

- expected_output=test_case.expected_output

- if test_case.expected_output

- else "-",

- metadata=None,

+ metrics=metrics,

+ input=test_case.input,

+ actual_output=test_case.actual_output,

+ expected_output=test_case.expected_output,

context=test_case.context,

)

else:

raise ValueError("TestCase not supported yet.")

-def run_test(

- test_cases: Union[TestCase, LLMTestCase, List[LLMTestCase]],

+def execute_test(

+ test_cases: List[LLMTestCase],

metrics: List[BaseMetric],

- max_retries: int = 1,

- delay: int = 1,

- min_success: int = 1,

- raise_error: bool = False,

+ save_to_disk: bool = False,

) -> List[TestResult]:

- if isinstance(test_cases, TestCase):

- test_cases = [test_cases]

-

- test_results = []

+ test_results: TestResult = []

+ test_run_manager.save_to_disk = save_to_disk

+ count = 0

for test_case in test_cases:

- failed_metrics = []

+ success = True

for metric in metrics:

test_start_time = time.perf_counter()

- # score = metric.measure(test_case)

- metric.score = metric.measure(test_case)

- success = metric.is_successful()

+ metric.measure(test_case)

test_end_time = time.perf_counter()

run_duration = test_end_time - test_start_time

- # metric.score = score

test_run_manager.get_test_run().add_llm_test_case(

test_case=test_case,

metrics=[metric],

run_duration=run_duration,

+ index=count,

)

test_run_manager.save_test_run()

- test_result = create_test_result(test_case, success, metric)

- test_results.append(test_result)

- if not success:

- failed_metrics.append((metric.__name__, metric.score))

+ if not metric.is_successful():

+ success = False

- if raise_error and failed_metrics:

- raise AssertionError(

- f"Metrics {', '.join([f'{name} (Score: {score})' for name, score in failed_metrics])} failed."

+ count += 1

+ test_result = create_test_result(

+ test_case, success, copy.deepcopy(metrics)

)

+ test_results.append(test_result)

return test_results

-def assert_test(

- test_cases: Union[LLMTestCase, List[LLMTestCase]],

+def run_test(

+ test_case: LLMTestCase,

metrics: List[BaseMetric],

- max_retries: int = 1,

- delay: int = 1,

- min_success: int = 1,

) -> List[TestResult]:

- """Assert a test"""

- return run_test(

- test_cases=test_cases,

- metrics=metrics,

- max_retries=max_retries,

- delay=delay,

- min_success=min_success,

- raise_error=True,

- )

+ with progress_context("Executing run_test()..."):

+ test_result = execute_test([test_case], metrics, False)[0]

+ print_test_result(test_result)

+ print("\n" + "-" * 70)

+ return test_result

+

+

+def assert_test(test_case: LLMTestCase, metrics: List[BaseMetric]):

+ # len(execute_test(...)) is always 1 for assert_test

+ test_result = execute_test([test_case], metrics, True)[0]

+ if not test_result.success:

+ failed_metrics = [

+ metric

+ for metric in test_result.metrics

+ if not metric.is_successful()

+ ]

+ failed_metrics_str = ", ".join(

+ [

+ f"{metric.__name__} (score: {metric.score}, minimum_score: {metric.minimum_score})"

+ for metric in failed_metrics

+ ]

+ )

+ raise AssertionError(f"Metrics {failed_metrics_str} failed.")

+

+

+def evaluate(test_cases: List[LLMTestCase], metrics: List[BaseMetric]):

+ with progress_context("Evaluating testcases..."):

+ test_results = execute_test(test_cases, metrics, True)

+ for test_result in test_results:

+ print_test_result(test_result)

+ print("\n" + "-" * 70)

+

+ test_run_manager.wrap_up_test_run(display_table=False)

+ return test_results

+

+

+def print_test_result(test_result: TestResult):

+ print("\n" + "=" * 70 + "\n")

+ print("Metrics Summary\n")

+ for metric in test_result.metrics:

+ if not metric.is_successful():

+ print(

+ f" - ❌ {metric.__name__} (score: {metric.score}, minimum_score: {metric.minimum_score})"

+ )

+ else:

+ print(

+ f" - ✅ {metric.__name__} (score: {metric.score}, minimum_score: {metric.minimum_score})"

+ )

+

+ print("\nFor test case:\n")

+ print(f" - input: {test_result.input}")

+ print(f" - actual output: {test_result.actual_output}")

+ print(f" - expected output: {test_result.expected_output}")

+ print(f" - context: {test_result.context}")

diff --git a/deepeval/metrics/__init__.py b/deepeval/metrics/__init__.py

index 68e51d1ab..bb4a2301c 100644

--- a/deepeval/metrics/__init__.py

+++ b/deepeval/metrics/__init__.py

@@ -1 +1,8 @@

-from .base_metric import *

+from .base_metric import BaseMetric

+from .answer_relevancy import AnswerRelevancyMetric

+from .base_metric import BaseMetric

+from .conceptual_similarity import ConceptualSimilarityMetric

+from .factual_consistency import FactualConsistencyMetric

+from .judgemental_gpt import JudgementalGPT

+from .llm_eval_metric import LLMEvalMetric

+from .ragas_metric import RagasMetric

diff --git a/deepeval/metrics/answer_relevancy.py b/deepeval/metrics/answer_relevancy.py

index a26f3c3c2..9c4219109 100644

--- a/deepeval/metrics/answer_relevancy.py

+++ b/deepeval/metrics/answer_relevancy.py

@@ -1,6 +1,5 @@

from deepeval.singleton import Singleton

from deepeval.test_case import LLMTestCase

-from deepeval.evaluator import assert_test

from deepeval.metrics.base_metric import BaseMetric

import numpy as np

@@ -77,31 +76,3 @@ def is_successful(self) -> bool:

@property

def __name__(self):

return "Answer Relevancy"

-

-

-def assert_answer_relevancy(

- query: str,

- output: str,

- minimum_score: float = 0.5,

- model_type: str = "default",

-):

- metric = AnswerRelevancyMetric(

- minimum_score=minimum_score, model_type=model_type

- )

- test_case = LLMTestCase(input=query, actual_output=output)

- assert_test(test_case, metrics=[metric])

-

-

-def is_answer_relevant(

- query: str,

- output: str,

- minimum_score: float = 0.5,

- model_type: str = "default",

-) -> bool:

- """Check if the output is relevant to the query."""

-

- metric = AnswerRelevancyMetric(

- minimum_score=minimum_score, model_type=model_type

- )

- test_case = LLMTestCase(input=query, actual_output=output)

- return metric.measure(test_case) >= minimum_score

diff --git a/deepeval/metrics/base_metric.py b/deepeval/metrics/base_metric.py

index 138dcb909..8299e2d35 100644

--- a/deepeval/metrics/base_metric.py

+++ b/deepeval/metrics/base_metric.py

@@ -1,11 +1,13 @@

from abc import abstractmethod

from deepeval.test_case import LLMTestCase

+from typing import Optional

class BaseMetric:

# set an arbitrary minimum score that will get over-ridden later

score: float = 0

+ reason: Optional[str] = None

@property

def minimum_score(self) -> float:

@@ -18,7 +20,7 @@ def minimum_score(self, value: float):

# Measure function signature is subject to be different - not sure

# how applicable this is - might need a better abstraction

@abstractmethod

- def measure(self, test_case: LLMTestCase, *args, **kwargs):

+ def measure(self, test_case: LLMTestCase, *args, **kwargs) -> float:

raise NotImplementedError

def _get_init_values(self):

diff --git a/deepeval/metrics/bias_classifier.py b/deepeval/metrics/bias_classifier.py

index a8571d180..45d510a6a 100644

--- a/deepeval/metrics/bias_classifier.py

+++ b/deepeval/metrics/bias_classifier.py

@@ -6,10 +6,9 @@

import warnings

from typing import Optional, List

-from deepeval.metrics.base_metric import BaseMetric

+from deepeval.metrics.base_metric import BaseMetric

from deepeval.test_case import LLMTestCase, LLMTestCaseParams

-from ..evaluator import assert_test

class UnBiasedMetric(BaseMetric):

@@ -84,27 +83,3 @@ def assert_successful(self):

@property

def __name__(self):

return "Bias Score"

-

-

-def assert_unbiased(

- evaluation_params: List[LLMTestCaseParams],

- input: str,

- actual_output: str,

- minimum_score: float = 0.5,

-): # see paper for rationale https://arxiv.org/pdf/2208.05777.pdf

- metric = UnBiasedMetric(

- evaluation_params=evaluation_params, minimum_score=minimum_score

- )

- test_case = LLMTestCase(input=input, actual_output=actual_output)

- assert_test(test_case, [metric])

-

-

-def is_unbiased(

- text: str,

- minimum_score: float = 0.5,

-) -> bool:

- """Check if the text is unbiased."""

-

- metric = UnBiasedMetric(minimum_score=minimum_score)

- test_case = LLMTestCase(input="placeholder", actual_output=text)

- return metric.measure(test_case) >= minimum_score

diff --git a/deepeval/metrics/conceptual_similarity.py b/deepeval/metrics/conceptual_similarity.py

index aefb6034f..49131c6b7 100644

--- a/deepeval/metrics/conceptual_similarity.py

+++ b/deepeval/metrics/conceptual_similarity.py

@@ -5,7 +5,6 @@

from deepeval.singleton import Singleton

from deepeval.test_case import LLMTestCase

from deepeval.utils import cosine_similarity

-from deepeval.evaluator import assert_test

from deepeval.progress_context import progress_context

from deepeval.metrics.base_metric import BaseMetric

@@ -39,35 +38,12 @@ def measure(self, test_case: LLMTestCase) -> float:

test_case.actual_output, test_case.expected_output

)

self.score = cosine_similarity(vectors[0], vectors[1])

+ self.success = self.score >= self.minimum_score

return float(self.score)

def is_successful(self) -> bool:

- return bool(self.score >= self.minimum_score)

+ return self.success

@property

def __name__(self):

return "Conceptual Similarity"

-

-

-def assert_conceptual_similarity(

- output: str, expected_output: str, minimum_score=0.7

-):

- metric = ConceptualSimilarityMetric(minimum_score=minimum_score)

- test_case = LLMTestCase(

- input="placeholder",

- actual_output=output,

- expected_output=expected_output,

- )

- assert_test(test_case, [metric])

-

-

-def is_conceptually_similar(

- output: str, expected_output: str, minimum_score=0.7

-) -> bool:

- metric = ConceptualSimilarityMetric(minimum_score=minimum_score)

- test_case = LLMTestCase(

- input="placeholder",

- actual_output=output,

- expected_output=expected_output,

- )

- return metric.measure(test_case) >= minimum_score

diff --git a/deepeval/metrics/factual_consistency.py b/deepeval/metrics/factual_consistency.py

index e64f0a444..a4a6ef6e5 100644

--- a/deepeval/metrics/factual_consistency.py

+++ b/deepeval/metrics/factual_consistency.py

@@ -3,7 +3,6 @@

from deepeval.test_case import LLMTestCase

from deepeval.utils import chunk_text, softmax

from deepeval.metrics.base_metric import BaseMetric

-from deepeval.evaluator import assert_test

from deepeval.progress_context import progress_context

from sentence_transformers import CrossEncoder

@@ -67,27 +66,3 @@ def is_successful(self) -> bool:

@property

def __name__(self):

return "Factual Consistency"

-

-

-def is_factually_consistent(

- output: str, context: str, minimum_score: float = 0.3

-) -> bool:

- """Check if the output is factually consistent with the context."""

-

- metric = FactualConsistencyMetric(minimum_score=minimum_score)

- test_case = LLMTestCase(

- input="placeholder", actual_output=output, context=context

- )

- return metric.measure(test_case) >= minimum_score

-

-

-def assert_factual_consistency(

- output: str, context: list[str], minimum_score: float = 0.3

-):

- """Assert that the output is factually consistent with the context."""

-

- metric = FactualConsistencyMetric(minimum_score=minimum_score)

- test_case = LLMTestCase(

- input="placeholder", actual_output=output, context=context

- )

- assert_test(test_case, [metric])

diff --git a/deepeval/metrics/judgemental_gpt.py b/deepeval/metrics/judgemental_gpt.py

new file mode 100644

index 000000000..b735993be

--- /dev/null

+++ b/deepeval/metrics/judgemental_gpt.py

@@ -0,0 +1,71 @@

+from deepeval.metrics.base_metric import BaseMetric

+from deepeval.test_case import LLMTestCaseParams, LLMTestCase

+from typing import List

+from pydantic import BaseModel

+from deepeval.api import Api

+

+

+class JudgementalGPTResponse(BaseModel):

+ score: float

+ reason: str

+

+

+class JudgementalGPTRequest(BaseModel):

+ text: str

+ criteria: str

+

+

+class JudgementalGPT(BaseMetric):

+ def __init__(

+ self,

+ name: str,

+ criteria: str,

+ evaluation_params: List[LLMTestCaseParams],

+ minimum_score: float = 0.5,

+ ):

+ self.criteria = criteria

+ self.name = name

+ self.evaluation_params = evaluation_params

+ self.minimum_score = minimum_score

+ self.success = None

+ self.reason = None

+

+ @property

+ def __name__(self):

+ return self.name

+

+ def measure(self, test_case: LLMTestCase):

+ text = """"""

+ for param in self.evaluation_params:

+ value = getattr(test_case, param.value)

+ text += f"{param.value}: {value} \n\n"

+

+ judgemental_gpt_request_data = JudgementalGPTRequest(

+ text=text, criteria=self.criteria

+ )

+

+ try:

+ body = judgemental_gpt_request_data.model_dump(

+ by_alias=True, exclude_none=True

+ )

+ except AttributeError:

+ body = judgemental_gpt_request_data.dict(

+ by_alias=True, exclude_none=True

+ )

+ api = Api()

+ result = api.post_request(

+ endpoint="/v1/judgemental-gpt",

+ body=body,

+ )

+ response = JudgementalGPTResponse(

+ score=result["score"],

+ reason=result["reason"],

+ )

+ self.reason = response.reason

+ self.score = response.score / 10

+ self.success = self.score >= self.minimum_score

+

+ return self.score

+

+ def is_successful(self):

+ return self.success

diff --git a/deepeval/metrics/llm_eval_metric.py b/deepeval/metrics/llm_eval_metric.py

index db00cf30e..b7c8a0d73 100644

--- a/deepeval/metrics/llm_eval_metric.py

+++ b/deepeval/metrics/llm_eval_metric.py

@@ -8,6 +8,8 @@

from deepeval.chat_completion.retry import call_openai_with_retry

from pydantic import BaseModel

import openai

+from langchain.chat_models import ChatOpenAI

+from langchain.callbacks.streaming_stdout import StreamingStdOutCallbackHandler

class LLMEvalMetricResponse(BaseModel):

@@ -20,15 +22,20 @@ def __init__(

name: str,

criteria: str,

evaluation_params: List[LLMTestCaseParams],

- model: Optional[str] = "gpt-4",

+ evaluation_steps: str = "",

+ model: Optional[str] = "gpt-4-1106-preview",

minimum_score: float = 0.5,

+ **kwargs,

):

self.criteria = criteria

self.name = name

self.model = model

- self.evaluation_steps = ""

+ self.evaluation_steps = evaluation_steps

self.evaluation_params = evaluation_params

self.minimum_score = minimum_score

+ self.deployment_id = None

+ if "deployment_id" in kwargs:

+ self.deployment_id = kwargs["deployment_id"]

@property

def __name__(self):

@@ -51,10 +58,9 @@ def measure(self, test_case: LLMTestCase):

self.evaluation_steps = self.generate_evaluation_steps()

score = self.evaluate(test_case)

- score = float(score) * 2 / 10

-

+ self.score = float(score) * 2 / 10

self.success = score >= self.minimum_score

- return score

+ return self.score

def is_successful(self):

return self.success

@@ -62,20 +68,16 @@ def is_successful(self):

def generate_evaluation_steps(self):

prompt: dict = evaluation_steps_template.format(criteria=self.criteria)

- res = call_openai_with_retry(

- lambda: openai.ChatCompletion.create(

- model=self.model,

- messages=[

- {

- "role": "system",

- "content": "You are a helpful assistant.",

- },

- {"role": "user", "content": prompt},

- ],

- )

+ model_kwargs = {}

+ if self.deployment_id is not None:

+ model_kwargs["deployment_id"] = self.deployment_id

+

+ chat_completion = ChatOpenAI(

+ model_name=self.model, model_kwargs=model_kwargs

)

- return res.choices[0].message.content

+ res = call_openai_with_retry(lambda: chat_completion.invoke(prompt))

+ return res.content

def evaluate(self, test_case: LLMTestCase):

text = """"""

@@ -89,24 +91,29 @@ def evaluate(self, test_case: LLMTestCase):

text=text,

)

+ model_kwargs = {

+ "top_p": 1,

+ "frequency_penalty": 0,

+ "stop": None,

+ "presence_penalty": 0,

+ }

+ if self.deployment_id is not None:

+ model_kwargs["deployment_id"] = self.deployment_id

+

+ chat_completion = ChatOpenAI(

+ model_name=self.model, max_tokens=5, n=20, model_kwargs=model_kwargs

+ )

+

res = call_openai_with_retry(

- lambda: openai.ChatCompletion.create(

- model=self.model,

- messages=[{"role": "system", "content": prompt}],

- max_tokens=5,

- top_p=1,

- frequency_penalty=0,

- presence_penalty=0,

- stop=None,

- # logprobs=5,

- n=20,

+ lambda: chat_completion.generate_prompt(

+ [chat_completion._convert_input(prompt)]

)

)

total_scores = 0

count = 0

- for content in res.choices:

+ for content in res.generations[0]:

try:

total_scores += float(content.message.content)

count += 1

diff --git a/deepeval/metrics/overall_score.py b/deepeval/metrics/overall_score.py

deleted file mode 100644

index a71d1da30..000000000

--- a/deepeval/metrics/overall_score.py

+++ /dev/null

@@ -1,76 +0,0 @@

-"""Overall Score

-"""

-

-from deepeval.test_case import LLMTestCase

-from deepeval.singleton import Singleton

-from deepeval.test_case import LLMTestCase

-from deepeval.metrics.answer_relevancy import AnswerRelevancyMetric

-from deepeval.metrics.conceptual_similarity import ConceptualSimilarityMetric

-from deepeval.metrics.factual_consistency import FactualConsistencyMetric

-from deepeval.metrics.base_metric import BaseMetric

-from deepeval.evaluator import assert_test

-

-

-class OverallScoreMetric(BaseMetric, metaclass=Singleton):

- def __init__(self, minimum_score: float = 0.5):

- self.minimum_score = minimum_score

- self.answer_relevancy = AnswerRelevancyMetric()

- self.factual_consistency_metric = FactualConsistencyMetric()

- self.conceptual_similarity_metric = ConceptualSimilarityMetric()

-

- def __call__(self, test_case: LLMTestCase):

- score = self.measure(test_case=test_case)

- self.success = score > self.minimum_score

- return score

-

- def measure(

- self,

- test_case: LLMTestCase,

- ) -> float:

- metadata = {}

- if test_case.context is not None:

- factual_consistency_score = self.factual_consistency_metric.measure(

- test_case

- )

- metadata["factual_consistency"] = float(factual_consistency_score)

-

- if test_case.input is not None:

- answer_relevancy_score = self.answer_relevancy.measure(test_case)

- metadata["answer_relevancy"] = float(answer_relevancy_score)

-

- if test_case.expected_output is not None:

- conceptual_similarity_score = (

- self.conceptual_similarity_metric.measure(test_case)

- )

- metadata["conceptual_similarity"] = float(

- conceptual_similarity_score

- )

-

- overall_score = sum(metadata.values()) / len(metadata)

-

- self.success = bool(overall_score > self.minimum_score)

- return overall_score

-

- def is_successful(self) -> bool:

- return self.success

-

- @property

- def __name__(self):

- return "Overall Score"

-

-

-def assert_overall_score(

- query: str,

- output: str,

- expected_output: str,

- context: str,

- minimum_score: float = 0.5,

-):

- metric = OverallScoreMetric(minimum_score=minimum_score)

- test_case = LLMTestCase(

- input=query,

- actual_output=output,

- expected_output=expected_output,

- context=context,

- )

- assert_test(test_case, metrics=[metric])

diff --git a/deepeval/metrics/ragas_metric.py b/deepeval/metrics/ragas_metric.py

index 5e53a1452..989011bbe 100644

--- a/deepeval/metrics/ragas_metric.py

+++ b/deepeval/metrics/ragas_metric.py

@@ -55,7 +55,7 @@ def measure(self, test_case: LLMTestCase):

context_relevancy_score = scores["context_relevancy"]

self.success = context_relevancy_score >= self.minimum_score

self.score = context_relevancy_score

- return context_relevancy_score

+ return self.score

def is_successful(self):

return self.success

@@ -108,7 +108,7 @@ def measure(self, test_case: LLMTestCase):

answer_relevancy_score = scores["answer_relevancy"]

self.success = answer_relevancy_score >= self.minimum_score

self.score = answer_relevancy_score

- return answer_relevancy_score

+ return self.score

def is_successful(self):

return self.success

@@ -159,7 +159,7 @@ def measure(self, test_case: LLMTestCase):

faithfulness_score = scores["faithfulness"]

self.success = faithfulness_score >= self.minimum_score

self.score = faithfulness_score

- return faithfulness_score

+ return self.score

def is_successful(self):

return self.success

@@ -212,7 +212,7 @@ def measure(self, test_case: LLMTestCase):

context_recall_score = scores["context_recall"]

self.success = context_recall_score >= self.minimum_score

self.score = context_recall_score

- return context_recall_score

+ return self.score

def is_successful(self):

return self.success

@@ -265,7 +265,7 @@ def measure(self, test_case: LLMTestCase):

harmfulness_score = scores["harmfulness"]

self.success = harmfulness_score >= self.minimum_score

self.score = harmfulness_score

- return harmfulness_score

+ return self.score

def is_successful(self):

return self.success

@@ -317,7 +317,7 @@ def measure(self, test_case: LLMTestCase):

coherence_score = scores["coherence"]

self.success = coherence_score >= self.minimum_score

self.score = coherence_score

- return coherence_score

+ return self.score

def is_successful(self):

return self.success

@@ -369,7 +369,7 @@ def measure(self, test_case: LLMTestCase):

maliciousness_score = scores["maliciousness"]

self.success = maliciousness_score >= self.minimum_score

self.score = maliciousness_score

- return maliciousness_score

+ return self.score

def is_successful(self):

return self.success

@@ -421,7 +421,7 @@ def measure(self, test_case: LLMTestCase):

correctness_score = scores["correctness"]

self.success = correctness_score >= self.minimum_score

self.score = correctness_score

- return correctness_score

+ return self.score

def is_successful(self):

return self.success

@@ -473,7 +473,7 @@ def measure(self, test_case: LLMTestCase):

conciseness_score = scores["conciseness"]

self.success = conciseness_score >= self.minimum_score

self.score = conciseness_score

- return conciseness_score

+ return self.score

def is_successful(self):

return self.success

@@ -540,7 +540,7 @@ def measure(self, test_case: LLMTestCase):

# 'answer_relevancy': 0.874}

self.success = ragas_score >= self.minimum_score

self.score = ragas_score

- return ragas_score

+ return self.score

def is_successful(self):

return self.success

@@ -548,16 +548,3 @@ def is_successful(self):

@property

def __name__(self):

return "RAGAS"

-

-

-def assert_ragas(

- test_case: LLMTestCase,

- metrics: List[str] = None,

- minimum_score: float = 0.3,

-):

- """Asserts if the Ragas score is above the minimum score"""

- metric = RagasMetric(metrics, minimum_score)

- score = metric.measure(test_case)

- assert (

- score >= metric.minimum_score

- ), f"Ragas score {score} is below the minimum score {metric.minimum_score}"

diff --git a/deepeval/metrics/ranking_similarity.py b/deepeval/metrics/ranking_similarity.py

deleted file mode 100644

index 426ba5112..000000000

--- a/deepeval/metrics/ranking_similarity.py

+++ /dev/null

@@ -1,205 +0,0 @@

-# Testing for ranking similarity

-from typing import Any, List, Optional, Union

-

-import numpy as np

-from tqdm import tqdm

-

-from ..test_case import LLMTestCase

-from .base_metric import BaseMetric

-from ..evaluator import assert_test

-

-

-class RBO:

- """

- This class will include some similarity measures between two different

- ranked lists.

- """

-

- def __init__(

- self,

- S: Union[List, np.ndarray],

- T: Union[List, np.ndarray],

- verbose: bool = False,

- ) -> None:

- """

- Initialize the object with the required lists.

- Examples of lists:

- S = ["a", "b", "c", "d", "e"]

- T = ["b", "a", 1, "d"]

-

- Both lists reflect the ranking of the items of interest, for example,

- list S tells us that item "a" is ranked first, "b" is ranked second,

- etc.

-

- Args:

- S, T (list or numpy array): lists with alphanumeric elements. They

- could be of different lengths. Both of the them should be

- ranked, i.e., each element"s position reflects its respective

- ranking in the list. Also we will require that there is no

- duplicate element in each list.

- verbose: If True, print out intermediate results. Default to False.

- """

-

- assert type(S) in [list, np.ndarray]

- assert type(T) in [list, np.ndarray]

-

- assert len(S) == len(set(S))

- assert len(T) == len(set(T))

-

- self.S, self.T = S, T

- self.N_S, self.N_T = len(S), len(T)

- self.verbose = verbose

- self.p = 0.5 # just a place holder

-

- def assert_p(self, p: float) -> None:

- """Make sure p is between (0, 1), if so, assign it to self.p.

-

- Args:

- p (float): The value p.

- """

- assert 0.0 < p < 1.0, "p must be between (0, 1)"

- self.p = p

-

- def _bound_range(self, value: float) -> float:

- """Bounds the value to [0.0, 1.0]."""

-

- try:

- assert 0 <= value <= 1 or np.isclose(1, value)

- return value

-

- except AssertionError:

- print("Value out of [0, 1] bound, will bound it.")

- larger_than_zero = max(0.0, value)

- less_than_one = min(1.0, larger_than_zero)

- return less_than_one

-

- def rbo(

- self,

- k: Optional[float] = None,

- p: float = 1.0,

- ext: bool = False,

- ) -> float:

- """

- This the weighted non-conjoint measures, namely, rank-biased overlap.

- Unlike Kendall tau which is correlation based, this is intersection

- based.

- The implementation if from Eq. (4) or Eq. (7) (for p != 1) from the

- RBO paper: http://www.williamwebber.com/research/papers/wmz10_tois.pdf

-

- If p = 1, it returns to the un-bounded set-intersection overlap,

- according to Fagin et al.

- https://researcher.watson.ibm.com/researcher/files/us-fagin/topk.pdf

-

- The fig. 5 in that RBO paper can be used as test case.

- Note there the choice of p is of great importance, since it

- essentially control the "top-weightness". Simply put, to an extreme,

- a small p value will only consider first few items, whereas a larger p

- value will consider more items. See Eq. (21) for quantitative measure.

-

- Args:

- k: The depth of evaluation.

- p: Weight of each agreement at depth d:

- p**(d-1). When set to 1.0, there is no weight, the rbo returns

- to average overlap.

- ext: If True, we will extrapolate the rbo, as in Eq. (23).

-

- Returns:

- The rbo at depth k (or extrapolated beyond).

- """

-

- if not self.N_S and not self.N_T:

- return 1 # both lists are empty

-

- if not self.N_S or not self.N_T:

- return 0 # one list empty, one non-empty

-

- if k is None:

- k = float("inf")

- k = min(self.N_S, self.N_T, k)

-

- # initialize the agreement and average overlap arrays

- A, AO = [0] * k, [0] * k

- if p == 1.0:

- weights = [1.0 for _ in range(k)]

- else:

- self.assert_p(p)

- weights = [1.0 * (1 - p) * p**d for d in range(k)]

-

- # using dict for O(1) look up

- S_running, T_running = {self.S[0]: True}, {self.T[0]: True}

- A[0] = 1 if self.S[0] == self.T[0] else 0

- AO[0] = weights[0] if self.S[0] == self.T[0] else 0

-

- for d in tqdm(range(1, k), disable=~self.verbose):

- tmp = 0

- # if the new item from S is in T already

- if self.S[d] in T_running:

- tmp += 1

- # if the new item from T is in S already

- if self.T[d] in S_running:

- tmp += 1

- # if the new items are the same, which also means the previous

- # two cases did not happen

- if self.S[d] == self.T[d]:

- tmp += 1

-

- # update the agreement array

- A[d] = 1.0 * ((A[d - 1] * d) + tmp) / (d + 1)

-

- # update the average overlap array

- if p == 1.0:

- AO[d] = ((AO[d - 1] * d) + A[d]) / (d + 1)

- else: # weighted average

- AO[d] = AO[d - 1] + weights[d] * A[d]

-

- # add the new item to the running set (dict)

- S_running[self.S[d]] = True

- T_running[self.T[d]] = True

-

- if ext and p < 1:

- return self._bound_range(AO[-1] + A[-1] * p**k)

-

- return self._bound_range(AO[-1])

-

-

-class RankingSimilarity(BaseMetric):

- def __init__(self, minimum_score: float = 0.1):

- self.minimum_score = minimum_score

-

- def __call__(self, test_case: LLMTestCase):

- score = self.measure(test_case.retrieval_context, test_case.context)

- return score

-

- def measure(self, test_case: LLMTestCase):

- list_1 = [str(x) for x in test_case.retrieval_context]

- list_2 = [str(x) for x in test_case.context]

- scorer = RBO(list_1, list_2)

- result = scorer.rbo(p=0.9, ext=True)

- self.success = result > self.minimum_score

- return result

-

- def is_successful(self):

- return self.success

-

- @property

- def __name__(self):

- return "Ranking Similarity"

-

-

-def assert_ranking_similarity(

- input: str,

- actual_output: str,

- context: List[str],

- retrieval_context: List[str],

- minimum_score,

- expected_output: str = "-",

-):

- scorer = RankingSimilarity(minimum_score=minimum_score)

- test_case = LLMTestCase(

- input=input,

- actual_output=actual_output,

- expected_output=expected_output,

- context=context,

- retrieval_context=retrieval_context,

- )

- assert_test(test_case, [scorer])

diff --git a/deepeval/metrics/scoring.py b/deepeval/metrics/scoring.py

index 4278c7e25..1ea499cfa 100644

--- a/deepeval/metrics/scoring.py

+++ b/deepeval/metrics/scoring.py

@@ -7,6 +7,7 @@

from deepeval.metrics._summac_model import SummaCZS

+# TODO: More scores are to be added

class Scorer:

"""This class calculates various Natural Language Processing (NLP) evaluation score.

@@ -14,8 +15,6 @@ class Scorer:

Which also uses an external model (BERTScore) in the scoring logic.

"""

- # Todo: More metrics are to be added

-

@classmethod

def rouge_score(

cls, target: str, prediction: str, score_type: str

@@ -208,7 +207,54 @@ def PII_score(

raise NotImplementedError()

@classmethod

- def toxic_score(

- cls, target: str, prediction: str, model: Optional[Any] = None

- ) -> float:

- raise NotImplementedError()

+ def neural_toxic_score(

+ cls, prediction: str, model: Optional[Any] = None

+ ) -> Union[float, dict]:

+ """

+ Calculate the toxicity score of a given text prediction using the Detoxify model.

+

+ Args:

+ prediction (str): The text prediction to evaluate for toxicity.

+ model (Optional[str], optional): The variant of the Detoxify model to use.

+ Available variants: 'original', 'unbiased', 'multilingual'.

+ If not provided, the 'original' variant is used by default.

+

+ Returns:

+ Union[float, dict]: The mean toxicity score, ranging from 0 (non-toxic) to 1 (highly toxic),

+ and also a dictionary containing different types of toxicity score.

+

+ For each model, we get mean toxicity score and a dictionary containing different toxicity score types.

+ Examples:

+ If model is 'original', we get the a dict with the following keys:

+ - 'toxicity',

+ - 'severe_toxicity',

+ - 'obscene',

+ - 'threat'

+ - 'insult'

+ - 'identity_attack'

+

+ If model is 'unbiased', we get a dict with the same as keys as 'original', but

+ along with `sexual_explicit`.

+

+ If the model is 'multilingual', we get a dict same as the unbiasd one.

+ """

+ try:

+ from detoxify import Detoxify

+ except ImportError as e:

+ print(e)

+

+ device = "cuda" if torch.cuda.is_available() else "cpu"

+ if model is not None:

+ assert model in [

+ "original",

+ "unbiased",

+ "multilingual",

+ ], "Invalid model. Available variants: original, unbiased, multilingual"

+ detoxify_model = Detoxify(model, device=device)

+ else:

+ detoxify_model = Detoxify("original", device=device)

+ toxicity_score_dict = detoxify_model.predict(prediction)

+ mean_toxicity_score = sum(list(toxicity_score_dict.values())) / len(

+ toxicity_score_dict

+ )

+ return mean_toxicity_score, toxicity_score_dict

diff --git a/deepeval/metrics/toxic_classifier.py b/deepeval/metrics/toxic_classifier.py

index 71fd5a8e1..48a8eab61 100644

--- a/deepeval/metrics/toxic_classifier.py

+++ b/deepeval/metrics/toxic_classifier.py

@@ -7,7 +7,6 @@

from deepeval.singleton import Singleton

from deepeval.test_case import LLMTestCase, LLMTestCaseParams

from deepeval.metrics.base_metric import BaseMetric

-from deepeval.evaluator import assert_test

class DetoxifyModel(metaclass=Singleton):

@@ -74,7 +73,8 @@ def measure(self, test_case: LLMTestCase):

# Check if the average score meets the minimum requirement

self.success = average_score >= self.minimum_score

- return average_score

+ self.score = average_score

+ return self.score

def is_successful(self):

return self.success

@@ -82,16 +82,3 @@ def is_successful(self):

@property

def __name__(self):

return "Toxicity"

-

-

-def assert_non_toxic(

- evaluation_params: List[LLMTestCaseParams],

- input: str,

- actual_output: str,

- minimum_score: float = 0.5,

-):

- metric = NonToxicMetric(

- evaluation_params=evaluation_params, minimum_score=minimum_score

- )

- test_case = LLMTestCase(input=input, actual_output=actual_output)

- assert_test(test_case, [metric])

diff --git a/deepeval/old-dataset.py b/deepeval/old-dataset.py

new file mode 100644

index 000000000..4433fe27b

--- /dev/null

+++ b/deepeval/old-dataset.py

@@ -0,0 +1,593 @@

+"""Class for Evaluation Datasets

+"""

+import json

+import random

+import time

+from collections import UserList

+from datetime import datetime

+from typing import Any, Callable, List, Optional

+

+from tabulate import tabulate

+

+from deepeval.evaluator import run_test

+from deepeval.metrics.base_metric import BaseMetric

+from deepeval.test_case import LLMTestCase

+from dataclasses import asdict

+

+

+class EvaluationDataset(UserList):

+ """Class for Evaluation Datasets - which are a list of test cases"""

+

+ def __init__(self, test_cases: List[LLMTestCase]):

+ self.data: List[LLMTestCase] = test_cases

+

+ @classmethod

+ def from_csv(

+ cls, # Use 'cls' instead of 'self' for class methods

+ csv_filename: str,

+ query_column: Optional[str] = None,

+ expected_output_column: Optional[str] = None,

+ context_column: Optional[str] = None,

+ output_column: Optional[str] = None,

+ id_column: str = None,

+ metrics: List[BaseMetric] = None,

+ ):

+ import pandas as pd

+

+ df = pd.read_csv(csv_filename)

+ if query_column is not None and query_column in df.columns:

+ querys = df[query_column].values

+ else:

+ querys = [None] * len(df)

+ if (

+ expected_output_column is not None

+ and expected_output_column in df.columns

+ ):

+ expected_outputs = df[expected_output_column].values

+ else:

+ expected_outputs = [None] * len(df)

+ if context_column is not None and context_column in df.columns:

+ contexts = df[context_column].values

+ else:

+ contexts = [None] * len(df)

+ if output_column is not None and output_column in df.columns:

+ outputs = df[output_column].values

+ else:

+ outputs = [None] * len(df)

+ if id_column is not None:

+ ids = df[id_column].values

+ else:

+ ids = [None] * len(df)

+

+ # Initialize the 'data' attribute as an empty list

+ cls.data = []

+

+ for i, query_data in enumerate(querys):

+ cls.data.append(

+ LLMTestCase(

+ input=query_data,

+ expected_output=expected_outputs[i],

+ context=contexts[i],

+ id=ids[i] if id_column else None,

+ actual_output=outputs[i] if output_column else None,

+ )

+ )

+ return cls(cls.data)

+

+ def from_test_cases(self, test_cases: list):

+ self.data = test_cases

+

+ @classmethod

+ def from_hf_dataset(

+ cls,

+ dataset_name: str,

+ split: str,

+ query_column: str,

+ expected_output_column: str,

+ context_column: str = None,

+ output_column: str = None,

+ id_column: str = None,

+ ):

+ """

+ Load test cases from a HuggingFace dataset.

+

+ Args:

+ dataset_name (str): The name of the HuggingFace dataset to load.

+ split (str): The split of the dataset to load (e.g., 'train', 'test').

+ query_column (str): The column in the dataset corresponding to the query.

+ expected_output_column (str): The column in the dataset corresponding to the expected output.

+ context_column (str, optional): The column in the dataset corresponding to the context. Defaults to None.

+ output_column (str, optional): The column in the dataset corresponding to the output. Defaults to None.

+ id_column (str, optional): The column in the dataset corresponding to the ID. Defaults to None.

+

+ Returns:

+ EvaluationDataset: An instance of EvaluationDataset containing the loaded test cases.

+ """

+ try:

+ from datasets import load_dataset

+ except ImportError:

+ raise ImportError(

+ "The 'datasets' library is missing. Please install it using pip: pip install datasets"

+ )

+

+ hf_dataset = load_dataset(dataset_name, split=split)

+ test_cases = []

+

+ for i, row in enumerate(hf_dataset):

+ test_cases.append(

+ LLMTestCase(

+ input=row[query_column],

+ expected_output=row[expected_output_column],

+ context=row[context_column] if context_column else None,

+ actual_output=row[output_column] if output_column else None,

+ id=row[id_column] if id_column else None,

+ )

+ )

+ return cls(test_cases)

+

+ @classmethod

+ def from_json(

+ cls,

+ json_filename: str,

+ query_column: str,

+ expected_output_column: str,

+ context_column: str,

+ output_column: str,

+ id_column: str = None,

+ ):

+ """

+ This is for JSON data in the format of key-value array pairs.

+ {

+ "query": ["What is the customer success number", "What is the customer success number"],

+ "context": ["Context 1", "Context 2"],

+ "output": ["Output 1", "Output 2"]

+ }

+

+ if the JSON data is in a list of dictionaries, use from_json_list

+ """