-

+

+

+

+

+

+

+

- + + Home + + + + + + + + + + + + +

- + + Tutorials + + + + + + + + + + + + +

- + + Benchmarks + + + + + + + + + +

- + + Projects + + + + + + + + + +

- + + Research Group + + + + +

Benchmarks

+Large Language Models (LLM)

+ + +For running LLM benchmarks, see the MLC container documentation.

Small Language Models (SLM)

+ + +Small language models are generally defined as having fewer than 7B parameters (Llama-7B shown for reference)

+For more data and info about running these models, see the SLM tutorial and MLC container documentation.

Vision Language Models (VLM)

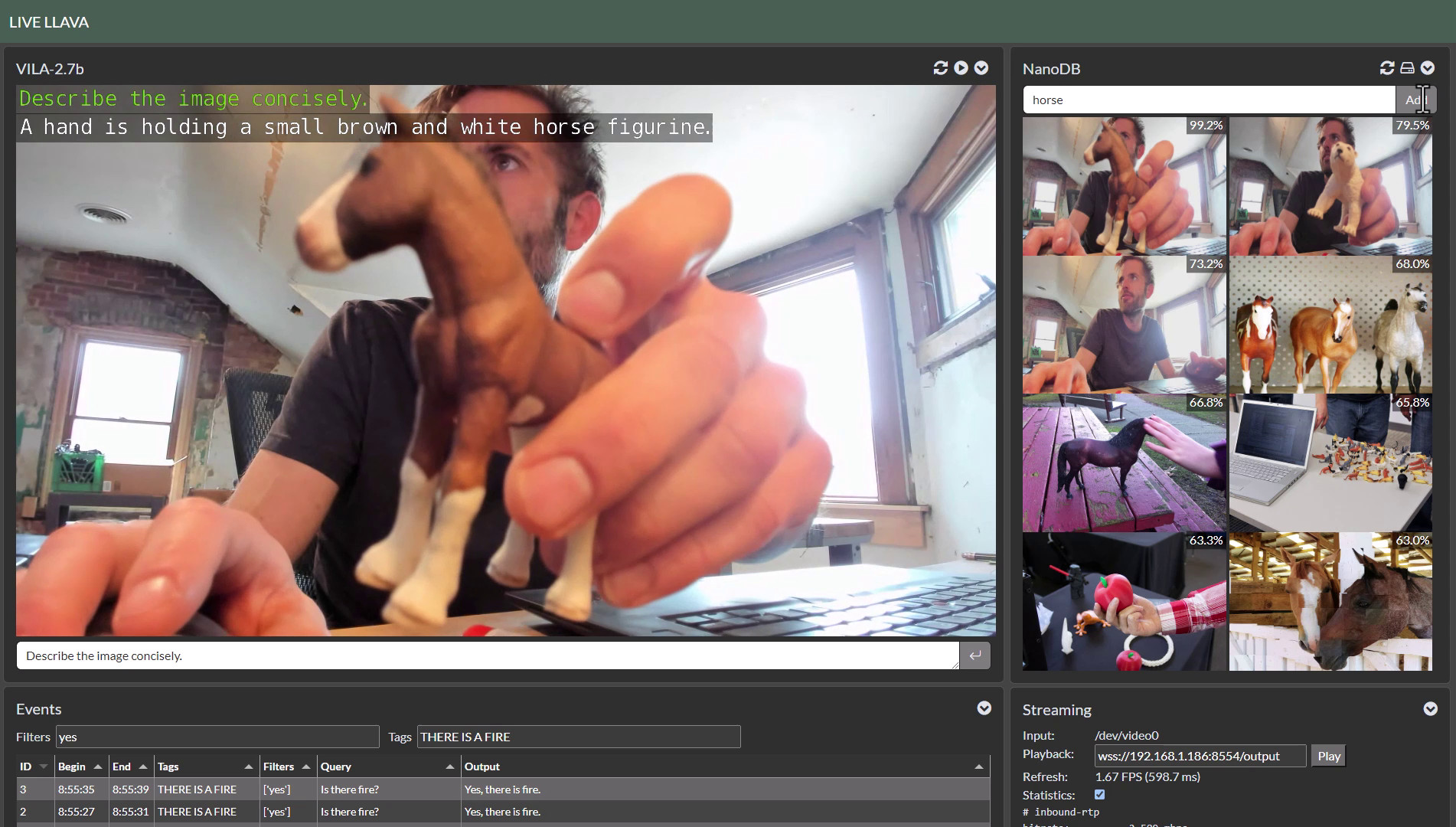

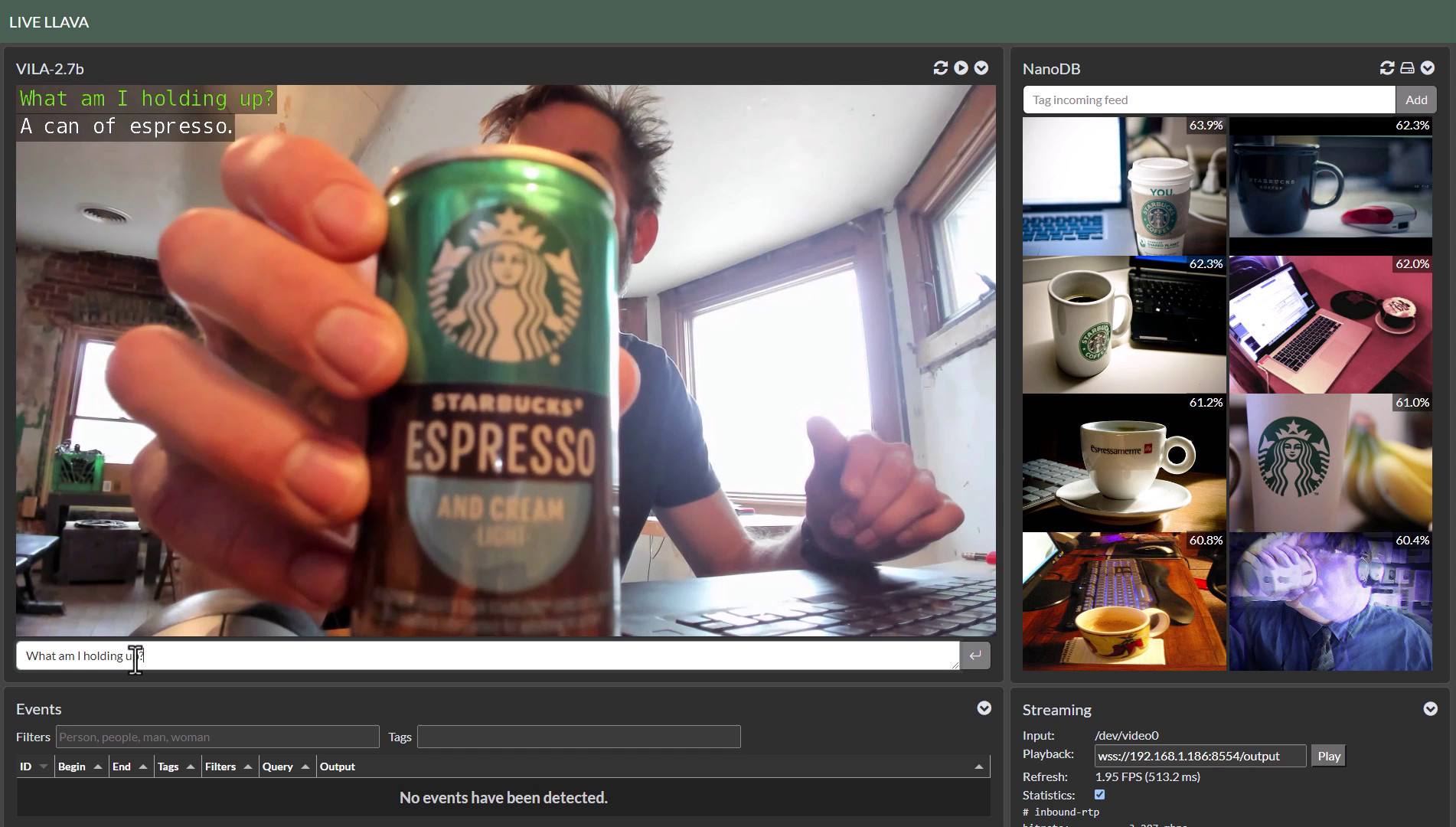

+ + +This measures the end-to-end pipeline performance for continuous streaming like with Live Llava.

+For more data and info about running these models, see the NanoVLM tutorial and local_llm documentation.

Vision Transformers (ViT)

+ + +VIT performance data from [1] [2] [3]

+Stable Diffusion

+ + +Riva

+ + +For running Riva benchmarks, see ASR Performance and TTS Performance.

+Vector Database

+ + +For running vector database benchmarks, see the NanoDB container documentation.

Please click "Jetson Store" button to find out the availability on the page next. + + +| | Product | Action | +|-|-|-| +|

|

| - AI Perf: 275 TOPS

- GPU: NVIDIA Ampere architecture with 2048 NVIDIA CUDA cores and 64 tensor cores

- CPU: 12-core Arm Cortex-A78AE v8.2 64-bit CPU 3MB L2 + 6MB L3

- Memory: 64GB 256-bit LPDDR5 | 204.8 GB/s

- Storage: 64GB eMMC 5.1

|

| - AI Perf: 275 TOPS

- GPU: NVIDIA Ampere architecture with 2048 NVIDIA CUDA cores and 64 tensor cores

- CPU: 12-core Arm Cortex-A78AE v8.2 64-bit CPU 3MB L2 + 6MB L3

- Memory: 32GB 256-bit LPDDR5 | 204.8 GB/s

- Storage: 64GB eMMC 5.1

|

| - AI Perf: 40 TOPS

- GPU: 1024-core NVIDIA Ampere architecture GPU with 32 Tensor Cores

- CPU: 6-core Arm® Cortex®-A78AE v8.2 64-bit CPU 1.5MB L2 + 4MB L3

- Memory: 8GB 128-bit LPDDR5 68 GB/s

- Storage: SD Card Slot & external NVMe via M.2 Key M

-

+

+

+

+

+

+

+

- + + Home + + + + + + + + + + + + +

- + + Tutorials + + + + + + + + + + +

- + + Benchmarks + + + + + + + + + + + +

- + + Projects + + + + + + + + + +

- + + Research Group + + + + +

Community Projects

+Below, you'll find a collection of guides, tutorials, and articles contributed by the community showcasing the implementation of generative AI on the Jetson platform.

+GitHub Japanese NMT Translation for Stable Diffusion (2-23-2024)

+Toshihiko Aoki has created a prompt generator for stable-diffusion-webui that translates Japanese queries into English using a fine-tuned GPT-2 NMT model before feeding them into Stable Diffusion. Check out the full guide on GitHub under to-aoki/ja-tiny-sd-webui, including the training dataset and LoRA building!

GitHub JetBot Voice to Action Tools: Empowering Your ROS2 Robot with Voice Control (2-17-2024)

+Jen Hung Ho created ROS2 nodes for ASR/TTS on Jetson Nano that can can be used to control JetBot, including customizable voice commands and the execution of advanced actions. Check it out on GitHub under Jen-Hung-Ho/ros2_jetbot_tools and Jen-Hung-Ho/ros2_jetbot_voice and on the forums here.

Hackster ClearWater: Underwater Image Enhancement with Generative AI (2-16-2024)

+Vy Pham has created a novel denoising pipeline using a custom trained Transformer-based diffusion model and GAN upscaler for image enhancement, running on Jetson AGX Orin. It runs interactively in a Streamlit web UI for photo capturing and the processing of images and videos. Great work!

+ + +Hackster AI-Powered Application for the Blind and Visually Impaired (12-13-2023)

+Nurgaliyev Shakhizat demonstrates a locally-hosted Blind Assistant Device running on Jetson AGX Orin 64GB Developer Kit for realtime image-to-speech translation:

+ +++Find more resources about this project here: [Hackster] [GitHub]

+

Dave's Armoury Bringing GLaDOS to life with Robotics and AI (2-8-2024)

+See how DIY robotics legend Dave Niewinski from davesarmoury.com brings GLaDOS to life using Jetson AGX Orin, running LLMs onboard alongside object + depth tracking, and RIVA ASR/TTS with a custom-trained voice model for speech recognition and synthesis! Using Unitree Z1 arm with 3D printing and StereoLabs ZED2.

+ + +++Find more resources about this project here: [Forums] [GitHub]

+

Hackster Seeed Studio's Local Voice Chatbot Puts a Speech-Recognizing LLaMa-2 LLM on Your Jetson (2-7-2024)

+Seeed Studio has announced the launch of the Local Voice Chatbot, an NVIDIA Riva- and LLaMa-2-based large language model (LLM) chatbot with voice recognition capabilities — running entirely locally on NVIDIA Jetson devices, including the company's own reComputer range. Follow the step-by-step guide on the Seeed Studio wiki.

+ + +YouTube GenAI Nerds React - Insider Look at NVIDIA's Newest Generative AI (2-6-2024)

+Watch this panel about the latest trends & tech in edge AI, featuring Kerry Shih from OStream, Jim Benson from JetsonHacks, and Dusty from NVIDIA.

+ + +NVIDIA Bringing Generative AI to Life with NVIDIA Jetson (11-7-2023)

+Watch this webinar about deploying LLMs, VLMs, ViTs, and vector databases onboard Jetson Orin for building next-generation applications using Generative AI:

+ + +JetsonHacks Jetson AI Labs – Generative AI Playground (10-31-2023)

+JetsonHacks publishes an insightful video that walks developers through the typical steps for running generative AI models on Jetson following this site's tutorials. The video shows the interaction with the LLaVA model.

+ + +Hackster Vision2Audio - Giving the blind an understanding through AI (10-15-2023)

+Nurgaliyev Shakhizat demonstrates Vision2Audio running on Jetson AGX Orin 64GB Developer Kit to harness the power of LLaVA to help visually impaired people:

+ +NVIDIA Generative AI Models at the Edge (10-19-2023)

+Follow this walkthrough of the Jetson AI Lab tutorials along with coverage of the latest features and advances coming to JetPack 6 and beyond:

+ + +++Technical Blog - https://developer.nvidia.com/blog/bringing-generative-ai-to-life-with-jetson/

+

Medium How to set up your Jetson device for LLM inference and fine-tuning (10-02-2023)

+Michael Yuan's guide demonstrating how to set up the Jetson AGX Orin 64GB Developer Kit specifically for large language model (LLM) inference, highlighting the crucial role of GPUs and the cost-effectiveness of the Jetson AGX Orin for LLM tasks.

++ ++

Hackster Getting Started with AI on Nvidia Jetson AGX Orin Dev Kit (09-16-2023)

+Nurgaliyev Shakhizat demonstrates llamaspeak on Jetson AGX Orin 64GB Developer Kit in this Hackster post:

Hackster New AI Tool Is Generating a Lot of Buzz (09-13-2023)

+Nick Bild provides an insightful introduction to the Jetson Generative AI Playground:

+++https://www.hackster.io/news/new-ai-tool-is-generating-a-lot-of-buzz-3cc5f23a3598

+

JetsonHacks Use These! Jetson Docker Containers Tutorial (09-04-2023)

+JetsonHacks has a in-depth tutorial on how to use jetson-containers and even show text-generation-webui and stable-diffusion-webui containers in action!

Hackster LLaMa 2 LLMs w/ NVIDIA Jetson and textgeneration-web-ui (08-17-2023)

+Paul DeCarlo demonstrates 13B and 70B parameter LLama 2 models running locally on Jetson AGX Orin 64GB Developer Kit in this Hackster post:

+ +Hackster Running a ChatGPT-Like LLM-LLaMA2 on a Nvidia Jetson Cluster (08-14-2023)

+Discover how to run a LLaMA-2 7B model on an NVIDIA Jetson cluster in this insightful tutorial by Nurgaliyev Shakhizat:

+ +JetsonHacks Speech AI on NVIDIA Jetson Tutorial (08-07-2023)

+JetsonHacks gives a nice introduction to NVIDIA RIVA SDK and demonstrate its automated speech recognition (ASR) capability on Jetson Orin Nano Developer Kit.

+ + +Hackster LLM based Multimodal AI w/ Azure Open AI & NVIDIA Jetson (07-12-2023)

+Learn how to harness the power of Multimodal AI by running Microsoft JARVIS on an Jetson AGX Orin 64GB Developer Kit, enabling a wide range of AI tasks with ChatGPT-like capabilities, image generation, and more, in this comprehensive guide by Paul DeCarlo.

+ +Hackster How to Run a ChatGPT-Like LLM on NVIDIA Jetson board (06-13-2023)

+Nurgaliyev Shakhizat explores voice AI assistant on Jetson using FastChat and VoskAPI.

+ + + + + + + + + + + +-

+

+

+

+

+

+

+

- + + Home + + + + + + + + + + + + +

- + + Tutorials + + + + + + + + + + +

- + + Benchmarks + + + + + + + + + +

- + + Projects + + + + + + + + + +

- + + Research Group + + + + +

Getting started

+ + + + + + + + +-

+

+

+

+

+

+

+

- + + Home + + + + + + + + + + + + + + +

- + + Tutorials + + + + + + + + + + +

- + + Benchmarks + + + + + + + + + +

- + + Projects + + + + + + + + + +

- + + Research Group + + + + +

Hello AI World

+Hello AI World is an in-depth tutorial series for DNN-based inference and training of image classification, object detection, semantic segmentation, and more. It is built on the jetson-inference library using TensorRT for optimized performance on Jetson.

It's highly recommended to familiarize yourself with the concepts of machine learning and computer vision before diving into the more advanced topics of generative AI here on the Jetson AI Lab. Many of these models will prove useful to have during your development.

+ + +HELLO AI WORLD >> https://github.com/dusty-nv/jetson-inference

+ + + + + + + + +-

+

+

+

+

+

+

+

+

+

- + + Home + + + + + + + + + + + + +

- + + Tutorials + + + + + + + + + + +

- + + Benchmarks + + + + + + + + + +

- + + Projects + + + + + + + + + +

- + + Research Group + + + + +

Generative AI at the Edge

+Bring generative AI to the world with NVIDIA® Jetson™

+ + Explore Tutorials + + + Walkthrough + ++ + + + Learn More +

Generative AI at the Edge

+Bring generative AI to the world with NVIDIA® Jetson™

+ + Explore Tutorials + + + Walkthrough + ++ + + + Learn More +

+

+ UP42 Python SDK

+Access UP42's geospatial collections and processing workflows via Python.

+ + Get started + + + Go to GitHub + ++ + UP42 in Python +

+Use UP42 via Python: order geospatial data, run analytic workflows, and + generate insights.

++ Python ecosystem +

+Use UP42 together with your preferred Python libraries.

++ + Visualizations +

+Interactive maps and visualizations. Ideal to use with Jupyter notebooks.

+-

+

+

+

+

+

+

+

- + + Home + + + + + + + + + + + + +

- + + Tutorials + + + + + + + + + + +

- + + Benchmarks + + + + + + + + + +

- + + Projects + + + + + + + + + + + +

- + + Research Group + + + + +

Jetson AI Lab Research Group

+The Jetson AI Lab Research Group is a global collective for advancing open-source Edge ML, open to anyone to join and collaborate with others from the community and leverage each other's work. Our goal is using advanced AI for good in real-world applications in accessible and responsible ways. By coordinating together as a group, we can keep up with the rapidly evolving pace of AI and more quickly arrive at deploying intelligent multimodal agents and autonomous robots into the field.

+There are virtual meetings that anyone is welcome to join, offline discussion on the Jetson Projects forum, and guidelines for upstreaming open-source contributions.

+Next Meeting

+The first team meeting is on Wednesday, April 3rd at 9am PST - see the calendar invite below or click here to attend!

+Topics of Interest

+These are some initial research topics for us to discuss and investigate. This list will vary over time as experiments evolve and the SOTA progresses:

+| • Controller LLMs for dynamic pipeline code generation | +• Fine-tuning LLM/VLM onboard Jetson AGX Orin 64GB | +

| • HomeAssistant.io integration for smart home [1] [2] | +• Continuous multi-image VLM streaming and change detection | +

| • Recurrent LLM architectures (Mamba, RKVW, ect) [1] | +• Lightweight low-memory streaming ASR/TTS models | +

| • Diffusion models for image processing and enhancement | +• Time Series Forecasting with Transformers [1] [2] | +

| • Guidance, grammars, and guardrails for constrained output | +• Inline LLM function calling / plugins from API definitions | +

| • ML DevOps, edge deployment, and orchestration | +• Robotics, IoT, and cyberphysical systems integration | +

New topics can be raised to the group either during the meetings or on the forums (people are welcome to work on whatever they want of course)

+Contribution Guidelines

+

When experiments are successful, ideally the results will be packaged in such a way that they are easily reusable for others to integrate into their own projects:

+-

+

- Open-source libraries & code on GitHub +

- Models on HuggingFace Hub +

- Containers provided by jetson-containers +

- Documentation / tutorials on Jetson AI Lab +

- Hackster.io for hardware-centric builds +

Ongoing technical discussions can occur on the Jetson Projects forum (or GitHub Issues), with status updates given during the meetings.

+Meeting Schedule

+We'll aim to meet monthly or bi-weekly as a team in virtual meetings that anyone is welcome to join and speak during. We'll discuss the latest updates and experiments that we want to explore. Please remain courteous to others during the calls. We'll stick around after for anyone who has questions or didn't get the chance to be heard.

+Wednesday, April 3 at 9am PST (4/3/24)

+-

+

- Microsoft Teams - Meeting Link +

- Meeting ID:

223 573 467 074

+ - Passcode:

6ybvCg

+ - Outlook Invite:

Jetson AI Lab Research Group (4324).ics

+ - Agenda:

-

+

- Intros / GTC Recap +

- Home Assistant Integration (forum discussion) +

- Controller Agent LLM (forum discussion) +

- ML DevOps, Containers, Core Inferencing (forum discussion) +

- Open Q&A +

+

The meetings will be recorded and posted so that anyone unable to attend live will be able to watch them after.

+Active Members

+Below are shown some of the sustaining members of the group who have been working on generative AI in edge computing:

+ + +

+

+

+  +

+  + Dustin Franklin, NVIDIA

+ Dustin Franklin, NVIDIA+ + Principal Engineer | Pittsburgh, PA

+ (jetson-inference, jetson-containers) + +

+

+

+  + Nurgaliyev Shakhizat

+ Nurgaliyev Shakhizat+ + Institute of Smart Systems and AI | Kazakhstan

+ (Assistive Devices, Vision2Audio, HPEC Clusters) + +

+

+

+  + Kris Kersey, Kersey Fabrications

+ Kris Kersey, Kersey Fabrications+ + Embedded Software Engineer | Atlanta, GA

+ (The OASIS Project, AR/VR, 3D Fabrication) + +

+

+

+  + Doruk Sönmez, Open Zeka

+ Doruk Sönmez, Open Zeka+ + Intelligent Video Analytics Engineer | Turkey

+ (NVIDIA DLI Certified Instructor, IVA, VLM) + +

+

+

+  +

+  + Akash James, Spark Cognition

+ Akash James, Spark Cognition+ + AI Architect, UC Berkeley Researcher | Oakland

+ (NVIDIA AI Ambassador, Personal Assistants) + +

+

+

+  + Jim Benson, JetsonHacks

+ Jim Benson, JetsonHacks+ + DIY Extraordinaire | Los Angeles, CA

+ (AI in Education, RACECAR/J) + +

+

+ Dana Sheahen, NVIDIA

+ Dana Sheahen, NVIDIA+ + DLI Curriculum Developer | Santa Clara, CA

+ (AI in Education, Jetson AI Fundamentals) + +

+

+

+  +

+  + Dave Niewinski

+ Dave Niewinski+ + Dave's Armoury | Waterloo, Ontario

+ (GLaDOS, Fetch, Offroad La-Z-Boy, KUKA Bot) + +

+

+

+  +

+  + Gary Hilgemann, REBOTNIX

+ Gary Hilgemann, REBOTNIX+ + CEO & AI Roboticist | Lünen, Germany

+ (GUSTAV, SPIKE, VisionTools, GenAI) + +

+

+

+  +

+  + Elaine Wu, Seeed Studio

+ Elaine Wu, Seeed Studio+ + AI & Robotics Partnerships | Shenzhen, China

+ (reComputer, YOLOv8, LocalJARVIS, Voice Bot) + +

+

+ Patty Delafuente, NVIDIA

+ Patty Delafuente, NVIDIA+ + Data Scientist & UMBC PhD Student | MD

+ (AI in Education, DLI Robotics Teaching Kit) + +

+

+

+  + Song Han, MIT HAN Lab

+ Song Han, MIT HAN Lab+ + NVIDIA Research | Cambridge, MA

+ (Efficient Large Models, AWQ, VILA) + +

+

+

+  + Michael Grüner, RidgeRun

+ Michael Grüner, RidgeRun+ + Team Lead / Architect | Costa Rica

+ (Embedded Vision & AI, Multimedia) + +

Anyone is welcome to join this group after contributing, and open a PR against the site repo with their info 😊

+ + + + + + + + + + +Join us for the ina

+ ugural team meeting of the Jetson AI Lab Research Group!&nb

+ sp\; \;

On the agenda\, we will discuss the latest updates\, G

+ TC recap\, and experiments that we want to explore.

Anyone is welcome to j

+ oin and speak. Please remain courteous of others during the call\, and sta

+ y muted if you aren’\;t speaking.

+ \; We’\;ll stick around after for anyone who has questions or

+ didn't get the chance to be heard. \; <

+ /span>This meeting will be recorded and posted afterwards for anyone unabl

+ e to attend.

_______________________________________________

+ _________________________________

Mic

+ rosoft Teams Need help?

Meeting ID: 223 573 467 074

Passcode: 6ybv

+ Cg

Phone conference ID: 479 114 164#

Tenant key: teams@vc.nvidia.com

+

Video ID: 117 895 093 3<

+ span style='font-family:"Segoe UI"\,sans-serif\;mso-fareast-font-family:"T

+ imes New Roman"\;color:#242424'>

For organizers: Meeting options | Reset dial-in PIN

For running LLM benchmarks, see the MLC container documentation.

Small language models are generally defined as having fewer than 7B parameters (Llama-7B shown for reference) For more data and info about running these models, see the SLM tutorial and MLC container documentation.

This measures the end-to-end pipeline performance for continuous streaming like with Live Llava. For more data and info about running these models, see the NanoVLM tutorial and local_llm documentation.

VIT performance data from [1] [2] [3]

"},{"location":"benchmarks.html#stable-diffusion","title":"Stable Diffusion","text":""},{"location":"benchmarks.html#riva","title":"Riva","text":"For running Riva benchmarks, see ASR Performance and TTS Performance.

"},{"location":"benchmarks.html#vector-database","title":"Vector Database","text":"For running vector database benchmarks, see the NanoDB container documentation.

Below, you'll find a collection of guides, tutorials, and articles contributed by the community showcasing the implementation of generative AI on the Jetson platform.

"},{"location":"community_articles.html#github-japanese-nmt-translation-for-stable-diffusion-2-23-2024","title":"GitHub Japanese NMT Translation for Stable Diffusion (2-23-2024)","text":"Toshihiko Aoki has created a prompt generator for stable-diffusion-webui that translates Japanese queries into English using a fine-tuned GPT-2 NMT model before feeding them into Stable Diffusion. Check out the full guide on GitHub under to-aoki/ja-tiny-sd-webui, including the training dataset and LoRA building!

Jen Hung Ho created ROS2 nodes for ASR/TTS on Jetson Nano that can can be used to control JetBot, including customizable voice commands and the execution of advanced actions. Check it out on GitHub under Jen-Hung-Ho/ros2_jetbot_tools and Jen-Hung-Ho/ros2_jetbot_voice and on the forums here.

Vy Pham has created a novel denoising pipeline using a custom trained Transformer-based diffusion model and GAN upscaler for image enhancement, running on Jetson AGX Orin. It runs interactively in a Streamlit web UI for photo capturing and the processing of images and videos. Great work!

Your browser does not support the video tag."},{"location":"community_articles.html#hackster-ai-powered-application-for-the-blind-and-visually-impaired-12-13-2023","title":"Hackster AI-Powered Application for the Blind and Visually Impaired (12-13-2023)","text":"Nurgaliyev Shakhizat demonstrates a locally-hosted Blind Assistant Device running on Jetson AGX Orin 64GB Developer Kit for realtime image-to-speech translation:

\u00a0 Find more resources about this project here: [Hackster] [GitHub]

"},{"location":"community_articles.html#daves-armoury-bringing-glados-to-life-with-robotics-and-ai-2-8-2024","title":"Dave's Armoury Bringing GLaDOS to life with Robotics and AI (2-8-2024)","text":"See how DIY robotics legend Dave Niewinski from davesarmoury.com brings GLaDOS to life using Jetson AGX Orin, running LLMs onboard alongside object + depth tracking, and RIVA ASR/TTS with a custom-trained voice model for speech recognition and synthesis! Using Unitree Z1 arm with 3D printing and StereoLabs ZED2.

\u00a0 Find more resources about this project here: [Forums] [GitHub]

"},{"location":"community_articles.html#hackster-seeed-studios-local-voice-chatbot-puts-a-speech-recognizing-llama-2-llm-on-your-jetson-2-7-2024","title":"Hackster Seeed Studio's Local Voice Chatbot Puts a Speech-Recognizing LLaMa-2 LLM on Your Jetson (2-7-2024)","text":"Seeed Studio has announced the launch of the Local Voice Chatbot, an NVIDIA Riva- and LLaMa-2-based large language model (LLM) chatbot with voice recognition capabilities \u2014 running entirely locally on NVIDIA Jetson devices, including the company's own reComputer range. Follow the step-by-step guide on the Seeed Studio wiki.

"},{"location":"community_articles.html#youtube-genai-nerds-react-insider-look-at-nvidias-newest-generative-ai-2-6-2024","title":"YouTube GenAI Nerds React - Insider Look at NVIDIA's Newest Generative AI (2-6-2024)","text":"Watch this panel about the latest trends & tech in edge AI, featuring Kerry Shih from OStream, Jim Benson from JetsonHacks, and Dusty from NVIDIA.

"},{"location":"community_articles.html#nvidia-bringing-generative-ai-to-life-with-nvidia-jetson-11-7-2023","title":"NVIDIA Bringing Generative AI to Life with NVIDIA Jetson (11-7-2023)","text":"Watch this webinar about deploying LLMs, VLMs, ViTs, and vector databases onboard Jetson Orin for building next-generation applications using Generative AI:

"},{"location":"community_articles.html#jetsonhacks-jetson-ai-labs-generative-ai-playground-10-31-2023","title":"JetsonHacks Jetson AI Labs \u2013 Generative AI Playground (10-31-2023)","text":"JetsonHacks publishes an insightful video that walks developers through the typical steps for running generative AI models on Jetson following this site's tutorials. The video shows the interaction with the LLaVA model.

"},{"location":"community_articles.html#hackster-vision2audio-giving-the-blind-an-understanding-through-ai-10-15-2023","title":"Hackster Vision2Audio - Giving the blind an understanding through AI (10-15-2023)","text":"Nurgaliyev Shakhizat demonstrates Vision2Audio running on Jetson AGX Orin 64GB Developer Kit to harness the power of LLaVA to help visually impaired people:

"},{"location":"community_articles.html#nvidia-generative-ai-models-at-the-edge-10-19-2023","title":"NVIDIA Generative AI Models at the Edge (10-19-2023)","text":"Follow this walkthrough of the Jetson AI Lab tutorials along with coverage of the latest features and advances coming to JetPack 6 and beyond:

\u00a0 Technical Blog - https://developer.nvidia.com/blog/bringing-generative-ai-to-life-with-jetson/

"},{"location":"community_articles.html#medium-how-to-set-up-your-jetson-device-for-llm-inference-and-fine-tuning-10-02-2023","title":"Medium How to set up your Jetson device for LLM inference and fine-tuning (10-02-2023)","text":"Michael Yuan's guide demonstrating how to set up the Jetson AGX Orin 64GB Developer Kit specifically for large language model (LLM) inference, highlighting the crucial role of GPUs and the cost-effectiveness of the Jetson AGX Orin for LLM tasks.

https://medium.com/@michaelyuan_88928/how-to-set-up-your-jetson-device-for-llm-inference-and-fine-tuning-682e36444d43

"},{"location":"community_articles.html#hackster-getting-started-with-ai-on-nvidia-jetson-agx-orin-dev-kit-09-16-2023","title":"Hackster Getting Started with AI on Nvidia Jetson AGX Orin Dev Kit (09-16-2023)","text":"Nurgaliyev Shakhizat demonstrates llamaspeak on Jetson AGX Orin 64GB Developer Kit in this Hackster post:

Nick Bild provides an insightful introduction to the Jetson Generative AI Playground:

https://www.hackster.io/news/new-ai-tool-is-generating-a-lot-of-buzz-3cc5f23a3598

"},{"location":"community_articles.html#jetsonhacks-use-these-jetson-docker-containers-tutorial-09-04-2023","title":"JetsonHacks Use These! Jetson Docker Containers Tutorial (09-04-2023)","text":"JetsonHacks has a in-depth tutorial on how to use jetson-containers and even show text-generation-webui and stable-diffusion-webui containers in action!

Paul DeCarlo demonstrates 13B and 70B parameter LLama 2 models running locally on Jetson AGX Orin 64GB Developer Kit in this Hackster post:

"},{"location":"community_articles.html#hackster-running-a-chatgpt-like-llm-llama2-on-a-nvidia-jetson-cluster-08-14-2023","title":"Hackster Running a ChatGPT-Like LLM-LLaMA2 on a Nvidia Jetson Cluster (08-14-2023)","text":"Discover how to run a LLaMA-2 7B model on an NVIDIA Jetson cluster in this insightful tutorial by Nurgaliyev Shakhizat:

"},{"location":"community_articles.html#jetsonhacks-speech-ai-on-nvidia-jetson-tutorial-08-07-2023","title":"JetsonHacks Speech AI on NVIDIA Jetson Tutorial (08-07-2023)","text":"JetsonHacks gives a nice introduction to NVIDIA RIVA SDK and demonstrate its automated speech recognition (ASR) capability on Jetson Orin Nano Developer Kit.

"},{"location":"community_articles.html#hackster-llm-based-multimodal-ai-w-azure-open-ai-nvidia-jetson-07-12-2023","title":"Hackster LLM based Multimodal AI w/ Azure Open AI & NVIDIA Jetson (07-12-2023)","text":"Learn how to harness the power of Multimodal AI by running Microsoft JARVIS on an Jetson AGX Orin 64GB Developer Kit, enabling a wide range of AI tasks with ChatGPT-like capabilities, image generation, and more, in this comprehensive guide by Paul DeCarlo.

"},{"location":"community_articles.html#hackster-how-to-run-a-chatgpt-like-llm-on-nvidia-jetson-board-06-13-2023","title":"Hackster How to Run a ChatGPT-Like LLM on NVIDIA Jetson board (06-13-2023)","text":"Nurgaliyev Shakhizat explores voice AI assistant on Jetson using FastChat and VoskAPI.

"},{"location":"getting-started.html","title":"Getting started","text":""},{"location":"hello_ai_world.html","title":"Hello AI World","text":"Hello AI World is an in-depth tutorial series for DNN-based inference and training of image classification, object detection, semantic segmentation, and more. It is built on the jetson-inference library using TensorRT for optimized performance on Jetson.

It's highly recommended to familiarize yourself with the concepts of machine learning and computer vision before diving into the more advanced topics of generative AI here on the Jetson AI Lab. Many of these models will prove useful to have during your development.

HELLO AI WORLD >> https://github.com/dusty-nv/jetson-inference

"},{"location":"research.html","title":"Jetson AI Lab Research Group","text":"The Jetson AI Lab Research Group is a global collective for advancing open-source Edge ML, open to anyone to join and collaborate with others from the community and leverage each other's work. Our goal is using advanced AI for good in real-world applications in accessible and responsible ways. By coordinating together as a group, we can keep up with the rapidly evolving pace of AI and more quickly arrive at deploying intelligent multimodal agents and autonomous robots into the field.

There are virtual meetings that anyone is welcome to join, offline discussion on the Jetson Projects forum, and guidelines for upstreaming open-source contributions.

Next Meeting

The first team meeting is on Wednesday, April 3rd at 9am PST - see the calendar invite below or click here to attend!

"},{"location":"research.html#topics-of-interest","title":"Topics of Interest","text":"These are some initial research topics for us to discuss and investigate. This list will vary over time as experiments evolve and the SOTA progresses:

\u2022 Controller LLMs for dynamic pipeline code generation \u2022 Fine-tuning LLM/VLM onboard Jetson AGX Orin 64GB \u2022 HomeAssistant.io integration for smart home [1] [2] \u2022 Continuous multi-image VLM streaming and change detection \u2022 Recurrent LLM architectures (Mamba, RKVW, ect) [1] \u2022 Lightweight low-memory streaming ASR/TTS models \u2022 Diffusion models for image processing and enhancement \u2022 Time Series Forecasting with Transformers [1] [2] \u2022 Guidance, grammars, and guardrails for constrained output \u2022 Inline LLM function calling / plugins from API definitions \u2022 ML DevOps, edge deployment, and orchestration \u2022 Robotics, IoT, and cyberphysical systems integrationNew topics can be raised to the group either during the meetings or on the forums (people are welcome to work on whatever they want of course)

"},{"location":"research.html#contribution-guidelines","title":"Contribution Guidelines","text":"When experiments are successful, ideally the results will be packaged in such a way that they are easily reusable for others to integrate into their own projects:

- Open-source libraries & code on GitHub

- Models on HuggingFace Hub

- Containers provided by jetson-containers

- Documentation / tutorials on Jetson AI Lab

- Hackster.io for hardware-centric builds

Ongoing technical discussions can occur on the Jetson Projects forum (or GitHub Issues), with status updates given during the meetings.

"},{"location":"research.html#meeting-schedule","title":"Meeting Schedule","text":"We'll aim to meet monthly or bi-weekly as a team in virtual meetings that anyone is welcome to join and speak during. We'll discuss the latest updates and experiments that we want to explore. Please remain courteous to others during the calls. We'll stick around after for anyone who has questions or didn't get the chance to be heard.

Wednesday, April 3 at 9am PST (4/3/24)

- Microsoft Teams - Meeting Link

- Meeting ID:

223 573 467 074 - Passcode:

6ybvCg - Outlook Invite:

Jetson AI Lab Research Group (4324).ics - Agenda:

- Intros / GTC Recap

- Home Assistant Integration (forum discussion)

- Controller Agent LLM (forum discussion)

- ML DevOps, Containers, Core Inferencing (forum discussion)

- Open Q&A

The meetings will be recorded and posted so that anyone unable to attend live will be able to watch them after.

"},{"location":"research.html#active-members","title":"Active Members","text":"Below are shown some of the sustaining members of the group who have been working on generative AI in edge computing:

Dustin Franklin, NVIDIA Principal Engineer | Pittsburgh, PA (jetson-inference, jetson-containers) Nurgaliyev Shakhizat Institute of Smart Systems and AI | Kazakhstan (Assistive Devices, Vision2Audio, HPEC Clusters) Kris Kersey, Kersey Fabrications Embedded Software Engineer | Atlanta, GA (The OASIS Project, AR/VR, 3D Fabrication) Johnny N\u00fa\u00f1ez Cano PhD Researcher in CV/AI | Barcelona, Spain (Recurrent LLMs, Pose & Behavior Analysis) Doruk S\u00f6nmez, Open Zeka Intelligent Video Analytics Engineer | Turkey (NVIDIA DLI Certified Instructor, IVA, VLM) Akash James, Spark Cognition AI Architect, UC Berkeley Researcher | Oakland (NVIDIA AI Ambassador, Personal Assistants) Mieszko Syty, MS/1 Design AI/ML Engineer | Warsaw, Poland (LLM, Home Assistants, ML DevOps) Jim Benson, JetsonHacks DIY Extraordinaire | Los Angeles, CA (AI in Education, RACECAR/J) Chitoku Yato, NVIDIA Jetson AI DevTech | Santa Clara, CA (JetBot, JetRacer, MinDisk, Containers) Dana Sheahen, NVIDIA DLI Curriculum Developer | Santa Clara, CA (AI in Education, Jetson AI Fundamentals) Sammy Ochoa, NVIDIA Jetson AI DevTech | Austin, TX (Metropolis Microservices) John Welsh, NVIDIA (NanoOWL, NanoSAM, JetBot, JetRacer, torch2trt, trt_pose, Knowledge Distillation) Dave Niewinski Dave's Armoury | Waterloo, Ontario (GLaDOS, Fetch, Offroad La-Z-Boy, KUKA Bot) Gary Hilgemann, REBOTNIX CEO & AI Roboticist | L\u00fcnen, Germany (GUSTAV, SPIKE, VisionTools, GenAI) Elaine Wu, Seeed Studio AI & Robotics Partnerships | Shenzhen, China (reComputer, YOLOv8, LocalJARVIS, Voice Bot) Patty Delafuente, NVIDIA Data Scientist & UMBC PhD Student | MD (AI in Education, DLI Robotics Teaching Kit) Song Han, MIT HAN Lab NVIDIA Research | Cambridge, MA (Efficient Large Models, AWQ, VILA) Bryan Hughes, Mimzy AI Founder, Entrepreneur | SF Bay Area (Multimodal Assistants, AI at the Edge) Tianqi Chen, CMU Catalyst OctoML, CTO | Seattle, WA (MLC, Apache TVM, XGBoost) Michael Gr\u00fcner, RidgeRun Team Lead / Architect | Costa Rica (Embedded Vision & AI, Multimedia)Anyone is welcome to join this group after contributing, and open a PR against the site repo with their info \ud83d\ude0a

"},{"location":"tips_ram-optimization.html","title":"RAM Optimization","text":"Running a LLM requires a huge RAM space.

Especially if you are on Jetson Orin Nano that only has 8GB of RAM, it is crucial to leave as much RAM space available for models.

Here we share a couple of ways to optimize the system RAM usage.

"},{"location":"tips_ram-optimization.html#disabling-the-desktop-gui","title":"Disabling the Desktop GUI","text":"If you use your Jetson remotely through SSH, you can disable the Ubuntu desktop GUI. This will free up extra memory that the window manager and desktop uses (around ~800MB for Unity/GNOME).

You can disable the desktop temporarily, run commands in the console, and then re-start the desktop when desired:

$ sudo init 3 # stop the desktop\n# log your user back into the console (Ctrl+Alt+F1, F2, ect)\n$ sudo init 5 # restart the desktop\nIf you wish to make this persistent across reboots, you can use the following commands to change the boot-up behavior:

-

To disable desktop on boot

sudo systemctl set-default multi-user.target\n -

To enable desktop on boot

sudo systemctl set-default graphical.target\n

sudo systemctl disable nvargus-daemon.service\nIf you're building containers or working with large models, it's advisable to mount SWAP (typically correlated with the amount of memory in the board). Run these commands to disable ZRAM and create a swap file:

If you have NVMe SSD storage available, it's preferred to allocate the swap file on the NVMe SSD.

sudo systemctl disable nvzramconfig\nsudo fallocate -l 16G /ssd/16GB.swap\nsudo mkswap /ssd/16GB.swap\nsudo swapon /ssd/16GB.swap\nThen add the following line to the end of /etc/fstab to make the change persistent:

/ssd/16GB.swap none swap sw 0 0\nOnce you have your Jetson set up by flashing the latest Jetson Linux (L4T) BSP on it or by flashing the SD card with the whole JetPack image, before embarking on testing out all the great generative AI application using jetson-containers, you want to make sure you have a huge storage space for all the containers and the models you will download.

We are going to show how you can install SSD on your Jetson, and set it up for Docker.

"},{"location":"tips_ssd-docker.html#ssd","title":"SSD","text":""},{"location":"tips_ssd-docker.html#physical-installation","title":"Physical installation","text":"- Unplug power and any peripherals from the Jetson developer kit.

- Physically install an NVMe SSD card on the carrier board of your Jetson developer kit, making sure to properly seat the connector and secure with the screw.

- Reconnect any peripherals, and then reconnect the power supply to turn on the Jetson developer kit.

-

Once the system is up, verify that your Jetson identifies a new memory controller on PCI bus:

lspci\nThe output should look like the following:

0007:01:00.0 Non-Volatile memory controller: Marvell Technology Group Ltd. Device 1322 (rev 02)\n

-

Run

lsblkto find the device name.lsblk\nThe output should look like the following:

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT\nloop0 7:0 0 16M 1 loop \nmmcblk1 179:0 0 59.5G 0 disk \n\u251c\u2500mmcblk1p1 179:1 0 58G 0 part /\n\u251c\u2500mmcblk1p2 179:2 0 128M 0 part \n\u251c\u2500mmcblk1p3 179:3 0 768K 0 part \n\u251c\u2500mmcblk1p4 179:4 0 31.6M 0 part \n\u251c\u2500mmcblk1p5 179:5 0 128M 0 part \n\u251c\u2500mmcblk1p6 179:6 0 768K 0 part \n\u251c\u2500mmcblk1p7 179:7 0 31.6M 0 part \n\u251c\u2500mmcblk1p8 179:8 0 80M 0 part \n\u251c\u2500mmcblk1p9 179:9 0 512K 0 part \n\u251c\u2500mmcblk1p10 179:10 0 64M 0 part \n\u251c\u2500mmcblk1p11 179:11 0 80M 0 part \n\u251c\u2500mmcblk1p12 179:12 0 512K 0 part \n\u251c\u2500mmcblk1p13 179:13 0 64M 0 part \n\u2514\u2500mmcblk1p14 179:14 0 879.5M 0 part \nzram0 251:0 0 1.8G 0 disk [SWAP]\nzram1 251:1 0 1.8G 0 disk [SWAP]\nzram2 251:2 0 1.8G 0 disk [SWAP]\nzram3 251:3 0 1.8G 0 disk [SWAP]\nnvme0n1 259:0 0 238.5G 0 disk \nIdentify the device corresponding to your SSD. In this case, it is

nvme0n1. -

Format the SSD, create a mount point, and mount it to the filesystem.

sudo mkfs.ext4 /dev/nvme0n1\nYou can choose any name for the mount point directory. We use

/ssdhere, but injetson-containers' setup.md documentation,/mntis used.sudo mkdir /ssd\nsudo mount /dev/nvme0n1 /ssd\n -

In order to ensure that the mount persists after boot, add an entry to the

fstabfile:First, identify the UUID for your SSD:

lsblk -f\nThen, add a new entry to the

fstabfile:sudo vi /etc/fstab\nInsert the following line, replacing the UUID with the value found from

lsblk -f:UUID=************-****-****-****-******** /ssd/ ext4 defaults 0 2\n -

Finally, change the ownership of the

/ssddirectory.sudo chown ${USER}:${USER} /ssd\n

-

Install

nvidia-containerpackage.Note: If you used an NVIDIA-supplied SD card image to flash your SD card, all necessary JetPack components (including

nvidia-containers) and Docker are already pre-installed, so this step can be skipped.sudo apt update\nsudo apt install -y nvidia-container\nJetPack 6.0 DP users

If you flash Jetson Linux (L4T) R36.2 (JetPack 6.0 DP) on your Jetson using SDK Manager, and install

nvidia-containerusingapt, on JetPack 6.0 it no longer automatically installs Docker.Therefore, you need to run the following to manually install Docker and set it up.

sudo apt update\nsudo apt install -y nvidia-container curl\ncurl https://get.docker.com | sh && sudo systemctl --now enable docker\nsudo nvidia-ctk runtime configure --runtime=docker\n -

Restart the Docker service and add your user to the

dockergroup, so that you don't need to use the command withsudo.sudo systemctl restart docker\nsudo usermod -aG docker $USER\nnewgrp docker\n -

Add default runtime in

/etc/docker/daemon.jsonsudo vi /etc/docker/daemon.json\nInsert the

\"default-runtime\": \"nvidia\"line as following:{\n\"runtimes\": {\n\"nvidia\": {\n\"path\": \"nvidia-container-runtime\",\n\"runtimeArgs\": []\n}\n},\n\"default-runtime\": \"nvidia\"\n}\n -

Restart Docker

sudo systemctl daemon-reload && sudo systemctl restart docker\n

Now that the SSD is installed and available on your device, you can use the extra storage capacity to hold the storage-demanding Docker directory.

-

Stop the Docker service.

sudo systemctl stop docker\n -

Move the existing Docker folder

sudo du -csh /var/lib/docker/ && \\\nsudo mkdir /ssd/docker && \\\nsudo rsync -axPS /var/lib/docker/ /ssd/docker/ && \\\nsudo du -csh /ssd/docker/ -

Edit

/etc/docker/daemon.jsonsudo vi /etc/docker/daemon.json\nInsert

\"data-root\"line like the following.{\n\"runtimes\": {\n\"nvidia\": {\n\"path\": \"nvidia-container-runtime\",\n\"runtimeArgs\": []\n}\n},\n\"default-runtime\": \"nvidia\",\n\"data-root\": \"/ssd/docker\"\n}\n -

Rename the old Docker data directory

sudo mv /var/lib/docker /var/lib/docker.old\n -

Restart the docker daemon

sudo systemctl daemon-reload && \\\nsudo systemctl restart docker && \\\nsudo journalctl -u docker\n

-

[Terminal 1] First, open a terminal to monitor the disk usage while pulling a Docker image.

watch -n1 df -

[Terminal 2] Next, open a new terminal and start Docker pull.

docker pull nvcr.io/nvidia/l4t-base:r35.2.1\n -

[Terminal 1] Observe that the disk usage on

/ssdgoes up as the container image is downloaded and extracted.~$ docker image ls\nREPOSITORY TAG IMAGE ID CREATED SIZE\nnvcr.io/nvidia/l4t-base r35.2.1 dc07eb476a1d 7 months ago 713MB\n

Reboot your Jetson, and verify that you observe the following:

~$ sudo blkid | grep nvme\n/dev/nvme0n1: UUID=\"9fc06de1-7cf3-43e2-928a-53a9c03fc5d8\" TYPE=\"ext4\"\n\n~$ df -h\nFilesystem Size Used Avail Use% Mounted on\n/dev/mmcblk1p1 116G 18G 94G 16% /\nnone 3.5G 0 3.5G 0% /dev\ntmpfs 3.6G 108K 3.6G 1% /dev/shm\ntmpfs 734M 35M 699M 5% /run\ntmpfs 5.0M 4.0K 5.0M 1% /run/lock\ntmpfs 3.6G 0 3.6G 0% /sys/fs/cgroup\ntmpfs 734M 88K 734M 1% /run/user/1000\n/dev/nvme0n1 458G 824M 434G 1% /ssd\n\n~$ docker info | grep Root\n Docker Root Dir: /ssd/docker\n\n~$ sudo ls -l /ssd/docker/\ntotal 44\ndrwx--x--x 4 root root 4096 Mar 22 11:44 buildkit\ndrwx--x--- 2 root root 4096 Mar 22 11:44 containers\ndrwx------ 3 root root 4096 Mar 22 11:44 image\ndrwxr-x--- 3 root root 4096 Mar 22 11:44 network\ndrwx--x--- 13 root root 4096 Mar 22 16:20 overlay2\ndrwx------ 4 root root 4096 Mar 22 11:44 plugins\ndrwx------ 2 root root 4096 Mar 22 16:19 runtimes\ndrwx------ 2 root root 4096 Mar 22 11:44 swarm\ndrwx------ 2 root root 4096 Mar 22 16:20 tmp\ndrwx------ 2 root root 4096 Mar 22 11:44 trust\ndrwx-----x 2 root root 4096 Mar 22 16:19 volumes\n\n~$ sudo du -chs /ssd/docker/\n752M /ssd/docker/\n752M total\n\n~$ docker info | grep -e \"Runtime\" -e \"Root\"\nRuntimes: io.containerd.runtime.v1.linux nvidia runc io.containerd.runc.v2\n Default Runtime: nvidia\n Docker Root Dir: /ssd/docker\nYour Jetson is now set up with the SSD!

"},{"location":"try.html","title":"Try","text":"Jump to NVIDIA Jetson Store.

"},{"location":"tutorial-intro.html","title":"Tutorial - Introduction","text":""},{"location":"tutorial-intro.html#overview","title":"Overview","text":"Our tutorials are divided into categories roughly based on model modality, the type of data to be processed or generated.

"},{"location":"tutorial-intro.html#text-llm","title":"Text (LLM)","text":"text-generation-webui Interact with a local AI assistant by running a LLM with oobabooga's text-generaton-webui llamaspeak Talk live with Llama using Riva ASR/TTS, and chat about images with Llava! Small LLM (SLM) Deploy Small Language Models (SLM) with reduced memory usage and higher throughput. API Examples Learn how to write Python code for doing LLM inference using popular APIs."},{"location":"tutorial-intro.html#text-vision-vlm","title":"Text + Vision (VLM)","text":"Give your locally running LLM an access to vision!

Mini-GPT4 Mini-GPT4, an open-source model that demonstrate vision-language capabilities. LLaVA Large Language and Vision Assistant, multimodal model that combines a vision encoder and LLM for visual and language understanding. Live LLaVA Run multimodal models interactively on live video streams over a repeating set of prompts. NanoVLM Use mini vision/language models and the optimized multimodal pipeline for live streaming."},{"location":"tutorial-intro.html#image-generation","title":"Image Generation","text":"Stable Diffusion Run AUTOMATIC1111'sstable-diffusion-webui to generate images from prompts Stable Diffusion XL A newer ensemble pipeline consisting of a base model and refiner that results in significantly enhanced and detailed image generation capabilities."},{"location":"tutorial-intro.html#vision-transformers-vit","title":"Vision Transformers (ViT)","text":"EfficientVIT MIT Han Lab's EfficientViT, Multi-Scale Linear Attention for High-Resolution Dense Prediction NanoOWL OWL-ViT optimized to run real-time on Jetson with NVIDIA TensorRT NanoSAM NanoSAM, SAM model variant capable of running in real-time on Jetson SAM Meta's SAM, Segment Anything model TAM TAM, Track-Anything model, is an interactive tool for video object tracking and segmentation"},{"location":"tutorial-intro.html#vector-database","title":"Vector Database","text":"NanoDB Interactive demo to witness the impact of Vector Database that handles multimodal data"},{"location":"tutorial-intro.html#audio","title":"Audio","text":"AudioCraft Meta's AudioCraft, to produce high-quality audio and music Whisper OpenAI's Whisper, pre-trained model for automatic speech recognition (ASR)"},{"location":"tutorial-intro.html#metropolis-microservices","title":"Metropolis Microservices","text":"First Steps Get Metropolis Microservices up & running on Jetson with NVStreamer and AI NVR capabilities."},{"location":"tutorial-intro.html#about-nvidia-jetson","title":"About NVIDIA Jetson","text":"Note

We are mainly targeting Jetson Orin generation devices for deploying the latest LLMs and generative AI models.

Jetson AGX Orin 64GB Developer Kit Jetson AGX Orin Developer Kit Jetson Orin Nano Developer Kit GPU 2048-core NVIDIA Ampere architecture GPU with 64 Tensor Cores 1024-core NVIDIA Ampere architecture GPU with 32 Tensor Cores RAM(CPU+GPU) 64GB 32GB 8GB Storage 64GB eMMC (+ NVMe SSD) microSD card (+ NVMe SSD)"},{"location":"tutorial_api-examples.html","title":"Tutorial - API Examples","text":"It's good to know the code for generating text with LLM inference, and ancillary things like tokenization, chat templates, and prompting. On this page we give Python examples of running various LLM APIs, and their benchmarks.

What you need

-

One of the following Jetson devices:

Jetson AGX Orin (64GB) Jetson AGX Orin (32GB) Jetson Orin NX (16GB) Jetson Orin Nano (8GB)\u26a0\ufe0f

-

Running one of the following versions of JetPack:

JetPack 5 (L4T r35.x) JetPack 6 (L4T r36.x)

-

Sufficient storage space (preferably with NVMe SSD).

22GBforl4t-text-generationcontainer image- Space for models (

>10GB)

The HuggingFace Transformers API is the de-facto API that models are released for, often serving as the reference implementation. It's not terribly fast, but it does have broad model support, and also supports quantization (AutoGPTQ, AWQ). This uses streaming:

from transformers import AutoModelForCausalLM, AutoTokenizer, TextIteratorStreamer\nfrom threading import Thread\n\nmodel_name='meta-llama/Llama-2-7b-chat-hf'\nmodel = AutoModelForCausalLM.from_pretrained(model_name, device_map='cuda')\n\ntokenizer = AutoTokenizer.from_pretrained(model_name)\nstreamer = TextIteratorStreamer(tokenizer)\n\nprompt = [{'role': 'user', 'content': 'Can I get a recipe for French Onion soup?'}]\ninputs = tokenizer.apply_chat_template(\n prompt,\n add_generation_prompt=True,\n return_tensors='pt'\n).to(model.device)\n\nThread(target=lambda: model.generate(inputs, max_new_tokens=256, streamer=streamer)).start()\n\nfor text in streamer:\n print(text, end='', flush=True)\nTo run this (it can be found here), you can mount a directory containing the script or your jetson-containers directory:

./run.sh --volume $PWD/packages/llm:/mount --workdir /mount \\\n$(./autotag l4t-text-generation) \\\npython3 transformers/test.py\nWe use the l4t-text-generation container because it includes the quantization libraries in addition to Transformers, for running the quanztized versions of the models like TheBloke/Llama-2-7B-Chat-GPTQ

The huggingface-benchmark.py script will benchmark the models:

./run.sh --volume $PWD/packages/llm/transformers:/mount --workdir /mount \\\n$(./autotag l4t-text-generation) \\\npython3 huggingface-benchmark.py --model meta-llama/Llama-2-7b-chat-hf\n* meta-llama/Llama-2-7b-chat-hf AVG = 20.7077 seconds, 6.2 tokens/sec memory=10173.45 MB\n* TheBloke/Llama-2-7B-Chat-GPTQ AVG = 12.3922 seconds, 10.3 tokens/sec memory=7023.36 MB\n* TheBloke/Llama-2-7B-Chat-AWQ AVG = 11.4667 seconds, 11.2 tokens/sec memory=4662.34 MB\nThe local_llm container uses the optimized MLC/TVM library for inference, like on the Benchmarks page:

from local_llm import LocalLM, ChatHistory, ChatTemplates\nfrom termcolor import cprint\n\n# load model\nmodel = LocalLM.from_pretrained(\n model='meta-llama/Llama-2-7b-chat-hf', \n quant='q4f16_ft', \n api='mlc'\n)\n\n# create the chat history\nchat_history = ChatHistory(model, system_prompt=\"You are a helpful and friendly AI assistant.\")\n\nwhile True:\n # enter the user query from terminal\n print('>> ', end='', flush=True)\n prompt = input().strip()\n\n # add user prompt and generate chat tokens/embeddings\n chat_history.append(role='user', msg=prompt)\n embedding, position = chat_history.embed_chat()\n\n # generate bot reply\n reply = model.generate(\n embedding, \n streaming=True, \n kv_cache=chat_history.kv_cache,\n stop_tokens=chat_history.template.stop,\n max_new_tokens=256,\n )\n\n # append the output stream to the chat history\n bot_reply = chat_history.append(role='bot', text='')\n\n for token in reply:\n bot_reply.text += token\n cprint(token, color='blue', end='', flush=True)\n\n print('\\n')\n\n # save the inter-request KV cache \n chat_history.kv_cache = reply.kv_cache\nThis example keeps an interactive chat running with text being entered from the terminal. You can start it like this:

./run.sh $(./autotag local_llm) \\\n python3 -m local_llm.chat.example\nOr for easy editing from the host device, copy the source into your own script and mount it into the container with the --volume flag.

Let's run Meta's AudioCraft, to produce high-quality audio and music on Jetson!

What you need

-

One of the following Jetson devices:

Jetson AGX Orin (64GB) Jetson AGX Orin (32GB) Jetson Orin Nano (8GB)

-

Running one of the following versions of JetPack:

JetPack 5 (L4T r35.x)

-

Sufficient storage space (preferably with NVMe SSD).

10.7 GBforaudiocraftcontainer image- Space for checkpoints

-

Clone and setup

jetson-containers:git clone https://github.com/dusty-nv/jetson-containers\ncd jetson-containers\nsudo apt update; sudo apt install -y python3-pip\npip3 install -r requirements.txt\n

Use run.sh and autotag script to automatically pull or build a compatible container image.

cd jetson-containers\n./run.sh $(./autotag audiocraft)\nThe container has a default run command (CMD) that will automatically start the Jupyter Lab server.

Open your browser and access http://<IP_ADDRESS>:8888.

The default password for Jupyter Lab is nvidia.

AudioCraft repo comes with demo Jupyter notebooks.

On Jupyter Lab navigation pane on the left, double-click demos folder.

For \"Text-conditional Generation\", you should get something like this.

Your browser does not support the audio element.

Info

You may encounter an error message like the following when executing the first cell, but you can keep going.

A matching Triton is not available, some optimizations will not be enabled.\nError caught was: No module named 'triton'\nWarning

When running the 5-th cell of audiogen_demo.ipynb, you may run into \"Failed to load audio\" RuntimeError.

For \"Text-conditional Generation\", you should get something like this.

Your browser does not support the audio element.

Warning

When running the 5-th cell of musicgen_demo.ipynb, you may run into \"Failed to load audio\" RuntimeError.

See \"Jetson Introduction to Knowledge Distillation\" repo's README.md.

https://github.com/NVIDIA-AI-IOT/jetson-intro-to-distillation

"},{"location":"tutorial_live-llava.html","title":"Tutorial - Live LLaVA","text":"Recommended

Follow the chat-based LLaVA and NanoVLM tutorials and see the local_llm documentation to familiarize yourself with VLMs and test the models first.

This multimodal agent runs a vision-language model on a live camera feed or video stream, repeatedly applying the same prompts to it:

It uses models like LLaVA or VILA (based on Llama and CLIP) and has been quantized with 4-bit precision to be deployed on Jetson Orin. This runs an optimized multimodal pipeline from the local_llm package, and acts as a building block for creating event-driven streaming applications that trigger user-promptable alerts and actions with the flexibility of VLMs:

The interactive web UI supports event filters, alerts, and multimodal vector DB integration.

"},{"location":"tutorial_live-llava.html#running-the-live-llava-demo","title":"Running the Live Llava Demo","text":"What you need

-

One of the following Jetson devices:

Jetson AGX Orin (64GB) Jetson AGX Orin (32GB) Jetson Orin NX (16GB) Jetson Orin Nano (8GB)\u26a0\ufe0f

-

Running one of the following versions of JetPack:

JetPack 6 (L4T r36.x)

-

Sufficient storage space (preferably with NVMe SSD).

22GBforlocal_llmcontainer image- Space for models (

>10GB)

-

Follow the chat-based LLaVA and NanoVLM tutorials first and see the

local_llmdocumentation. -

Supported VLM models in

local_llm:liuhaotian/llava-v1.5-7b,liuhaotian/llava-v1.5-13b,liuhaotian/llava-v1.6-vicuna-7b,liuhaotian/llava-v1.6-vicuna-13bEfficient-Large-Model/VILA-2.7b,Efficient-Large-Model/VILA-7b,Efficient-Large-Model/VILA-13bNousResearch/Obsidian-3B-V0.5VILA-2.7b,VILA-7b,Llava-7b, andObsidian-3Bcan run on Orin Nano 8GB

The VideoQuery agent processes an incoming camera or video feed on prompts in a closed loop with the VLM. Navigate your browser to https://<IP_ADDRESS>:8050 after launching it, proceed past the SSL warning, and see this demo walkthrough video on using the web UI.

./run.sh $(./autotag local_llm) \\\npython3 -m local_llm.agents.video_query --api=mlc \\\n--model Efficient-Large-Model/VILA-2.7b \\\n--max-context-len 768 \\\n--max-new-tokens 32 \\\n--video-input /dev/video0 \\\n--video-output webrtc://@:8554/output\n

This uses jetson_utils for video I/O, and for options related to protocols and file formats, see Camera Streaming and Multimedia. In the example above, it captures a V4L2 USB webcam connected to the Jetson (under the device /dev/video0) and outputs a WebRTC stream.

The example above was running on a live camera, but you can also read and write a video file or network stream by substituting the path or URL to the --video-input and --video-output command-line arguments like this:

./run.sh \\\n-v /path/to/your/videos:/mount\n $(./autotag local_llm) \\\npython3 -m local_llm.agents.video_query --api=mlc \\\n--model Efficient-Large-Model/VILA-2.7b \\\n--max-new-tokens 32 \\\n--video-input /mount/my_video.mp4 \\\n--video-output /mount/output.mp4 \\\n--prompt \"What does the weather look like?\"\nThis example processes and pre-recorded video (in MP4, MKV, AVI, FLV formats with H.264/H.265 encoding), but it also can input/output live network streams like RTP, RTSP, and WebRTC using Jetson's hardware-accelerated video codecs.

"},{"location":"tutorial_live-llava.html#nanodb-integration","title":"NanoDB Integration","text":"If you launch the VideoQuery agent with the --nanodb flag along with a path to your NanoDB database, it will perform reverse-image search on the incoming feed against the database by re-using the CLIP embeddings generated by the VLM.

To enable this mode, first follow the NanoDB tutorial to download, index, and test the database. Then launch VideoQuery like this:

./run.sh $(./autotag local_llm) \\\npython3 -m local_llm.agents.video_query --api=mlc \\\n--model Efficient-Large-Model/VILA-2.7b \\\n--max-context-len 768 \\\n--max-new-tokens 32 \\\n--video-input /dev/video0 \\\n--video-output webrtc://@:8554/output \\\n--nanodb /data/nanodb/coco/2017\nYou can also tag incoming images and add them to the database using the panel in the web UI.

"},{"location":"tutorial_llamaspeak.html","title":"Tutorial - llamaspeak","text":"Talk live with Llama using Riva ASR/TTS, and chat about images with Llava!

- The

local_llmpackage provides the optimized LLMs, llamaspeak, and speech integration. - It's recommended to run JetPack 6.0 to be able to run the latest containers for this.

llamaspeak is an optimized web UI and voice agent that has multimodal support for chatting about images with vision-language models:

Multimodal Voice Chat with LLaVA-1.5 13B on NVIDIA Jetson AGX Orin (container: local_llm)

See the Voice Chat section of the local_llm documentation to run llamaspeak.

LLaVA is a popular multimodal vision/language model that you can run locally on Jetson to answer questions about image prompts and queries. Llava uses the CLIP vision encoder to transform images into the same embedding space as its LLM (which is the same as Llama architecture). Below we cover different methods to run Llava on Jetson, with increasingly optimized performance:

- Chat with Llava using

text-generation-webui - Run from the terminal with

llava.serve.cli - Quantized GGUF models with

llama.cpp - Optimized Multimodal Pipeline with

local_llm

text-generation-webui 4-bit (GPTQ) 2.3 9.7 GB llava.serve.cli FP16 (None) 4.2 27.7 GB llama.cpp 4-bit (Q4_K) 10.1 9.2 GB local_llm 4-bit (MLC) 21.1 8.7 GB In addition to Llava, the local_llm pipeline supports VILA and mini vision models that run on Orin Nano as well.

text-generation-webui","text":"What you need

-

One of the following Jetson devices:

Jetson AGX Orin (64GB) Jetson AGX Orin (32GB) Jetson Orin NX (16GB)

-

Running one of the following versions of JetPack:

JetPack 5 (L4T r35.x) JetPack 6 (L4T r36.x)

-

Sufficient storage space (preferably with NVMe SSD).

6.2GBfortext-generation-webuicontainer image- Space for models

- CLIP model :

1.7GB - Llava-v1.5-13B-GPTQ model :

7.25GB

- CLIP model :

-

Clone and setup

jetson-containers:git clone https://github.com/dusty-nv/jetson-containers\ncd jetson-containers\nsudo apt update; sudo apt install -y python3-pip\npip3 install -r requirements.txt\n

./run.sh --workdir=/opt/text-generation-webui $(./autotag text-generation-webui) \\\n python3 download-model.py --output=/data/models/text-generation-webui \\\n TheBloke/llava-v1.5-13B-GPTQ\n./run.sh --workdir=/opt/text-generation-webui $(./autotag text-generation-webui) \\\n python3 server.py --listen \\\n --model-dir /data/models/text-generation-webui \\\n --model TheBloke_llava-v1.5-13B-GPTQ \\\n --multimodal-pipeline llava-v1.5-13b \\\n --loader autogptq \\\n --disable_exllama \\\n --verbose\nGo to Chat tab, drag and drop an image into the Drop Image Here area, and your question in the text area and hit Generate:

"},{"location":"tutorial_llava.html#result","title":"Result","text":""},{"location":"tutorial_llava.html#2-run-from-the-terminal-with-llavaservecli","title":"2. Run from the terminal withllava.serve.cli","text":"What you need

-

One of the following Jetson:

Jetson AGX Orin 64GB Jetson AGX Orin (32GB)

-

Running one of the following versions of JetPack:

JetPack 5 (L4T r35.x) JetPack 6 (L4T r36.x)

-

Sufficient storage space (preferably with NVMe SSD).

6.1GBforllavacontainer14GBfor Llava-7B (or26GBfor Llava-13B)

This example uses the upstream Llava repo to run the original, unquantized Llava models from the command-line. It uses more memory due to using FP16 precision, and is provided mostly as a reference for debugging. See the Llava container readme for more info.

"},{"location":"tutorial_llava.html#llava-v15-7b","title":"llava-v1.5-7b","text":"./run.sh $(./autotag llava) \\\n python3 -m llava.serve.cli \\\n --model-path liuhaotian/llava-v1.5-7b \\\n --image-file /data/images/hoover.jpg\n./run.sh $(./autotag llava) \\\npython3 -m llava.serve.cli \\\n--model-path liuhaotian/llava-v1.5-13b \\\n--image-file /data/images/hoover.jpg\nUnquantized 13B may run only on Jetson AGX Orin 64GB due to memory requirements.

"},{"location":"tutorial_llava.html#3-quantized-gguf-models-with-llamacpp","title":"3. Quantized GGUF models withllama.cpp","text":"What you need

-

One of the following Jetson devices:

Jetson AGX Orin (64GB) Jetson AGX Orin (32GB) Jetson Orin NX (16GB)

-

Running one of the following versions of JetPack:

JetPack 5 (L4T r35.x) JetPack 6 (L4T r36.x)

llama.cpp is one of the faster LLM API's, and can apply a variety of quantization methods to Llava to reduce its memory usage and runtime. Despite its name, it uses CUDA. There are pre-quantized versions of Llava-1.5 available in GGUF format for 4-bit and 5-bit:

- mys/ggml_llava-v1.5-7b

- mys/ggml_llava-v1.5-13b

./run.sh --workdir=/opt/llama.cpp/bin $(./autotag llama_cpp:gguf) \\\n/bin/bash -c './llava-cli \\\n --model $(huggingface-downloader mys/ggml_llava-v1.5-13b/ggml-model-q4_k.gguf) \\\n --mmproj $(huggingface-downloader mys/ggml_llava-v1.5-13b/mmproj-model-f16.gguf) \\\n --n-gpu-layers 999 \\\n --image /data/images/hoover.jpg \\\n --prompt \"What does the sign say\"'\nQ4_K 4 The sign says \"Hoover Dam, Exit 9.\" 10.17 9.2 GB Q5_K 5 The sign says \"Hoover Dam exit 9.\" 9.73 10.4 GB A lower temperature like 0.1 is recommended for better quality (--temp 0.1), and if you omit --prompt it will describe the image:

./run.sh --workdir=/opt/llama.cpp/bin $(./autotag llama_cpp:gguf) \\\n/bin/bash -c './llava-cli \\\n --model $(huggingface-downloader mys/ggml_llava-v1.5-13b/ggml-model-q4_k.gguf) \\\n --mmproj $(huggingface-downloader mys/ggml_llava-v1.5-13b/mmproj-model-f16.gguf) \\\n --n-gpu-layers 999 \\\n --image /data/images/lake.jpg'\n\nIn this image, a small wooden pier extends out into a calm lake, surrounded by tall trees and mountains. The pier seems to be the only access point to the lake. The serene scene includes a few boats scattered across the water, with one near the pier and the others further away. The overall atmosphere suggests a peaceful and tranquil setting, perfect for relaxation and enjoying nature.\nYou can put your own images in the mounted jetson-containers/data directory. The C++ code for llava-cli can be found here. The llama-cpp-python bindings also support Llava, however they are significantly slower from Python for some reason (potentially pre-processing)

local_llm","text":"What's Next

This section got too long and was moved to the NanoVLM page - check it out there for performance optimizations, mini VLMs, and live streaming!

"},{"location":"tutorial_minigpt4.html","title":"Tutorial - MiniGPT-4","text":"Give your locally running LLM an access to vision, by running MiniGPT-4 on Jetson!

What you need

-

One of the following Jetson devices:

Jetson AGX Orin (64GB) Jetson AGX Orin (32GB) Jetson Orin NX (16GB) Jetson Orin Nano (8GB)

-

Running one of the following versions of JetPack:

JetPack 5 (L4T r35.x) JetPack 6 (L4T r36.x)

-

Sufficient storage space (preferably with NVMe SSD).

5.8GBfor container image- Space for pre-quantized MiniGPT-4 model

-

Clone and setup

jetson-containers:git clone https://github.com/dusty-nv/jetson-containers\ncd jetson-containers\nsudo apt update; sudo apt install -y python3-pip\npip3 install -r requirements.txt\n

minigpt4 container with models","text":"To start the MiniGPT4 container and webserver with the recommended models, run this command:

cd jetson-containers\n./run.sh $(./autotag minigpt4) /bin/bash -c 'cd /opt/minigpt4.cpp/minigpt4 && python3 webui.py \\\n $(huggingface-downloader --type=dataset maknee/minigpt4-13b-ggml/minigpt4-13B-f16.bin) \\\n $(huggingface-downloader --type=dataset maknee/ggml-vicuna-v0-quantized/ggml-vicuna-13B-v0-q5_k.bin)'\nThen, open your web browser and access http://<IP_ADDRESS>:7860.

17# First steps with Metropolis Microservices for Jetson

NVIDIA Metropolis Microservices for Jetson simplifies the development of vision AI applications, offering a suite of customizable, cloud-native tools. Before diving into this tutorial, ensure you've filled out the Metropolis Microservices for Jetson Early Access form to gain the necessary access to launch the services. This step is crucial as it enables you to utilize all the features and capabilities discussed in this guide.

Perfect for both newcomers and experts, this tutorial provides straightforward steps to kick-start your edge AI projects. Whether you're a student or an ecosystem partner working on a use case, this guide offers a straightforward start for every skill level.

"},{"location":"tutorial_mmj.html#0-install-nvidia-jetson-services","title":"0. Install NVIDIA Jetson Services:","text":"Ok, let's start by installing NVIDIA Jetson Services:

sudo apt install nvidia-jetson-services\nLet's add some performance hacks that will be needed to run the demo faster and without streaming artifacts:

-

If you don't have the Orin at max performance, you can use these two commands, a reboot is needed after:

sudo nvpmodel -m 0 \nsudo jetson_clocks\n -

After these two commands, a reboot is needed if your Jetson wasn't already in high-performance mode. These are optional, but they fine-tune your network buffers to ensure smoother streaming by optimizing how much data can be sent and received:

sudo sysctl -w net.core.rmem_default=2129920\nsudo sysctl -w net.core.rmem_max=10000000\nsudo sysctl -w net.core.wmem_max=2000000\n

Download NGC for ARM64 from the NGC for CLI site:

unzip ngccli_arm64.zip\nchmod u+x ngc-cli/ngc\necho \"export PATH=\\\"\\$PATH:$(pwd)/ngc-cli\\\"\" >> ~/.bash_profile && source ~/.bash_profile\nngc config set\nYou should then paste the API key and use the organization name you are using. You can also press [Enter] to select the default values for the remaining options. After this, you should get the message:

Successfully saved NGC configuration to /home/jetson/.ngc/config\nThen, login with the same API key:

sudo docker login nvcr.io -u \"\\$oauthtoken\" -p <NGC-API-KEY>\nNow launch the Redis and Ingress services, as we need them for this tutorial.

sudo systemctl start jetson-redis\nsudo systemctl start jetson-ingress\nFirst, we need to install NVStreamer, an app that streams the videos MMJs will need to run AI on them. Follow this NVStreamer Link (In the top-left, click Download files.zip)

unzip files.zip\nrm files.zip\ntar -xvf nvstreamer.tar.gz\ncd nvstreamer\nsudo docker compose -f compose_nvstreamer.yaml up -d --force-recreate\nAI NVR (NGC) Link (Top-left -> Download files.zip)

unzip files.zip\nrm files.zip\ntar -xvf ai_nvr.tar.gz\nsudo cp ai_nvr/config/ai-nvr-nginx.conf /opt/nvidia/jetson/services/ingress/config/\ncd ai_nvr\nsudo docker compose -f compose_agx.yaml up -d --force-recreate\nDownload them from here.

unzip files.zip\n- The NVStream Streamer Dashboard, running in: http://localhost:31000

- The NVStreamer Camera Management Dashboard, running in: http://localhost:31000

So, first we need to upload the file in the Streamer interface, it looks like this:

There, go to File Upload, and drag and drop the file in the upload squared area.

After uploading it, go to the Dashboad option of the left menu, and copy the RTSP URL of the video you just uploaded, you will need it for the Camera Management Dashboard.

Now jump to the Camera Management Dashboard (http://localhost:30080/vst), it looks like this:

Go to the Camera Management option of the menu, then use the Add device manually option, and paste the RTSP URL, add the name of your video to the Name and Location text boxes, so it will be displayed on top of the stream.

Finally, click in the Live Streams option of the left menu, and you should be able to watch your video stream.

"},{"location":"tutorial_mmj.html#5-watch-rtsp-ai-processed-streaming-from-vlc","title":"5. Watch RTSP AI processed streaming from VLC","text":"Open VLC from another computer (localhost doesn't work here), and point to your Jetson Orin's IP address (you should be in the same network, or not having a firewal to access).

The easiest way to get Jetson's ip is launching:

ifconfig\nThen go to rtsp://[JETSON_IP]:8555/ds-test using VLC like this:

"},{"location":"tutorial_mmj.html#6-android-app","title":"6. Android app","text":"There is an Android app that allows you to track events and create areas of interest to monitor, you can find it on Google Play as AI NVR.

Here is a quick walkthough where you can see how to:

- Add the IP address of the Jetson

- Track current events

- Add new areas of interest

- Add tripwire to track the flux and direction of events

We saw in the previous LLaVA tutorial how to run vision-language models through tools like text-generation-webui and llama.cpp. In a similar vein to the SLM page on Small Language Models, here we'll explore optimizing VLMs for reduced memory usage and higher performance that reaches interactive levels (like in Liva LLava). These are great for fitting on Orin Nano and increasing the framerate.

There are 3 model families currently supported: Llava, VILA, and Obsidian (mini VLM)

"},{"location":"tutorial_nano-vlm.html#vlm-benchmarks","title":"VLM Benchmarks","text":"This FPS measures the end-to-end pipeline performance for continuous streaming like with Live Llava (on yes/no question)

\u2022 \u00a0 These models all use CLIP ViT-L/14@336px for the vision encoder. \u2022 \u00a0 Jetson Orin Nano 8GB runs out of memory trying to run Llava-13B.

What you need

-

One of the following Jetson devices:

Jetson AGX Orin (64GB) Jetson AGX Orin (32GB) Jetson Orin NX (16GB) Jetson Orin Nano (8GB)\u26a0\ufe0f

-

Running one of the following versions of JetPack:

JetPack 6 (L4T r36.x)

-

Sufficient storage space (preferably with NVMe SSD).

22GBforlocal_llmcontainer image- Space for models (

>10GB)

-

Supported VLM models in

local_llm:liuhaotian/llava-v1.5-7b,liuhaotian/llava-v1.5-13b,liuhaotian/llava-v1.6-vicuna-7b,liuhaotian/llava-v1.6-vicuna-13bEfficient-Large-Model/VILA-2.7b,Efficient-Large-Model/VILA-7b,Efficient-Large-Model/VILA-13bNousResearch/Obsidian-3B-V0.5VILA-2.7b,VILA-7b,Llava-7b, andObsidian-3Bcan run on Orin Nano 8GB

The optimized local_llm container using MLC/TVM for quantization and inference provides the highest performance. It efficiently manages the CLIP embeddings and KV cache. You can find the Python code for the chat program used in this example here.

./run.sh $(./autotag local_llm) \\\npython3 -m local_llm --api=mlc \\\n--model liuhaotian/llava-v1.6-vicuna-7b \\\n--max-context-len 768 \\\n--max-new-tokens 128\nThis starts an interactive console-based chat with Llava, and on the first run the model will automatically be downloaded from HuggingFace and quantized using MLC and W4A16 precision (which can take some time). See here for command-line options.

You'll end up at a >> PROMPT: in which you can enter the path or URL of an image file, followed by your question about the image. You can follow-up with multiple questions about the same image. Llava does not understand multiple images in the same chat, so when changing images, first reset the chat history by entering clear or reset as the prompt. VILA supports multiple images (area of active research)

During testing, you can specify prompts on the command-line that will run sequentially:

./run.sh $(./autotag local_llm) \\\n python3 -m local_llm --api=mlc \\\n --model liuhaotian/llava-v1.6-vicuna-7b \\\n --max-context-len 768 \\\n --max-new-tokens 128 \\\n --prompt '/data/images/hoover.jpg' \\\n --prompt 'what does the road sign say?' \\\n --prompt 'what kind of environment is it?' \\\n --prompt 'reset' \\\n --prompt '/data/images/lake.jpg' \\\n --prompt 'please describe the scene.' \\\n --prompt 'are there any hazards to be aware of?'\nYou can also use --prompt /data/prompts/images.json to run the test sequence, the results of which are in the table below.

\u2022 \u00a0 The model responses are with 4-bit quantization enabled, and are truncated to 128 tokens for brevity. \u2022 \u00a0 These chat questions and images are from /data/prompts/images.json (found in jetson-containers)

When prompted, these models can also output in constrained JSON formats (which the LLaVA authors cover in their LLaVA-1.5 paper), and can be used to programatically query information about the image:

./run.sh $(./autotag local_llm) \\\n python3 -m local_llm --api=mlc \\\n --model liuhaotian/llava-v1.5-13b \\\n --prompt '/data/images/hoover.jpg' \\\n --prompt 'extract any text from the image as json'\n\n{\n \"sign\": \"Hoover Dam\",\n \"exit\": \"2\",\n \"distance\": \"1 1/2 mile\"\n}\nTo use local_llm with a web UI instead, see the Voice Chat section of the documentation:

"},{"location":"tutorial_nano-vlm.html#live-streaming","title":"Live Streaming","text":"These models can also be used with the Live Llava agent for continuous streaming - just substitute the desired model name below:

./run.sh $(./autotag local_llm) \\\npython3 -m local_llm.agents.video_query --api=mlc \\\n--model Efficient-Large-Model/VILA-2.7b \\\n--max-context-len 768 \\\n--max-new-tokens 32 \\\n--video-input /dev/video0 \\\n--video-output webrtc://@:8554/output\nLet's run NanoDB's interactive demo to witness the impact of Vector Database that handles multimodal data.

What you need

-

One of the following Jetson devices:

Jetson AGX Orin (64GB) Jetson AGX Orin (32GB) Jetson Orin NX (16GB) Jetson Orin Nano (8GB)

-

Running one of the following versions of JetPack:

JetPack 5 (L4T r35.x) JetPack 6 (L4T r36.x)

-

Sufficient storage space (preferably with NVMe SSD).

16GBfor container image40GBfor MS COCO dataset

-

Clone and setup

jetson-containers:git clone https://github.com/dusty-nv/jetson-containers\ncd jetson-containers\nsudo apt update; sudo apt install -y python3-pip\npip3 install -r requirements.txt\n

Just for an example, let's use MS COCO dataset:

cd jetson-containers\nmkdir -p data/datasets/coco/2017\ncd data/datasets/coco/2017\n\nwget http://images.cocodataset.org/zips/train2017.zip\nwget http://images.cocodataset.org/zips/val2017.zip\nwget http://images.cocodataset.org/zips/unlabeled2017.zip\n\nunzip train2017.zip\nunzip val2017.zip\nunzip unlabeled2017.zip\nYou can download a pre-indexed NanoDB that was already prepared over the COCO dataset from here:

cd jetson-containers/data\nwget https://nvidia.box.com/shared/static/icw8qhgioyj4qsk832r4nj2p9olsxoci.gz -O nanodb_coco_2017.tar.gz\ntar -xzvf nanodb_coco_2017.tar.gz\nThis allow you to skip the indexing process in the next step, and jump to starting the Web UI.

"},{"location":"tutorial_nanodb.html#indexing-data","title":"Indexing Data","text":"If you didn't download the NanoDB index for COCO from above, we need to build the index by scanning your dataset directory:

./run.sh $(./autotag nanodb) \\\n python3 -m nanodb \\\n --scan /data/datasets/coco/2017 \\\n --path /data/nanodb/coco/2017 \\\n --autosave --validate \nThis will take a few hours on AGX Orin. Once the database has loaded and completed any start-up operations , it will drop down to a > prompt from which the user can run search queries. You can quickly check the operation by typing your query on this prompt:

> a girl riding a horse\n\n* index=80110 /data/datasets/coco/2017/train2017/000000393735.jpg similarity=0.29991915822029114\n* index=158747 /data/datasets/coco/2017/unlabeled2017/000000189708.jpg similarity=0.29254037141799927\n* index=123846 /data/datasets/coco/2017/unlabeled2017/000000026239.jpg similarity=0.292171448469162\n* index=127338 /data/datasets/coco/2017/unlabeled2017/000000042508.jpg similarity=0.29118549823760986\n* index=77416 /data/datasets/coco/2017/train2017/000000380634.jpg similarity=0.28964102268218994\n* index=51992 /data/datasets/coco/2017/train2017/000000256290.jpg similarity=0.28929752111434937\n* index=228640 /data/datasets/coco/2017/unlabeled2017/000000520381.jpg similarity=0.28642547130584717\n* index=104819 /data/datasets/coco/2017/train2017/000000515895.jpg similarity=0.285491943359375\nYou can press Ctrl+C to exit. For more info about the various options available, see the NanoDB container documentation.

"},{"location":"tutorial_nanodb.html#interactive-web-ui","title":"Interactive Web UI","text":"Spin up the Gradio server:

./run.sh $(./autotag nanodb) \\\n python3 -m nanodb \\\n --path /data/nanodb/coco/2017 \\\n --server --port=7860\nThen navigate your browser to http://<IP_ADDRESS>:7860, and you can enter text search queries as well as drag/upload images:

To use the dark theme, navigate to http://<IP_ADDRESS>:7860/?__theme=dark instead"},{"location":"tutorial_slm.html","title":"Tutorial - Small Language Models (SLM)","text":"

Small Language Models (SLMs) represent a growing class of language models that have <7B parameters - for example StableLM, Phi-2, and Gemma-2B. Their smaller memory footprint and faster performance make them good candidates for deploying on Jetson Orin Nano. Some are very capable with abilities at a similar level as the larger models, having been trained on high-quality curated datasets.

This tutorial shows how to run optimized SLMs with quantization using the local_llm container and MLC/TVM backend. You can run these models through tools like text-generation-webui and llama.cpp as well, just not as fast - and since the focus of SLMs is reduced computational and memory requirements, here we'll use the most optimized path available. Those shown below have been profiled.