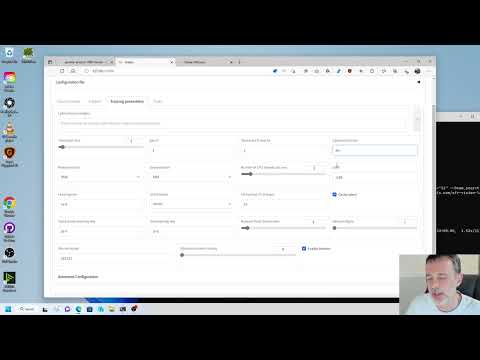

This repository provides a Windows-focused Gradio GUI for Kohya's Stable Diffusion trainers. The GUI allows you to set the training parameters and generate and run the required CLI commands to train the model.

If you run on Linux and would like to use the GUI, there is now a port of it as a docker container. You can find the project here.

- Tutorials

- Required Dependencies

- Installation

- Upgrading

- Launching the GUI

- Dreambooth

- Finetune

- Train Network

- LoRA

- Troubleshooting

- Change History

How to Create a LoRA Part 1: Dataset Preparation:

How to Create a LoRA Part 2: Training the Model:

- Install Python 3.10

- make sure to tick the box to add Python to the 'PATH' environment variable

- Install Git

- Install Visual Studio 2015, 2017, 2019, and 2022 redistributable

Give unrestricted script access to powershell so venv can work:

- Run PowerShell as an administrator

- Run

Set-ExecutionPolicy Unrestrictedand answer 'A' - Close PowerShell

Open a regular user Powershell terminal and run the following commands:

git clone https://github.com/bmaltais/kohya_ss.git

cd kohya_ss

python -m venv venv

.\venv\Scripts\activate

pip install torch==1.12.1+cu116 torchvision==0.13.1+cu116 --extra-index-url https://download.pytorch.org/whl/cu116

pip install --use-pep517 --upgrade -r requirements.txt

pip install -U -I --no-deps https://github.com/C43H66N12O12S2/stable-diffusion-webui/releases/download/f/xformers-0.0.14.dev0-cp310-cp310-win_amd64.whl

cp .\bitsandbytes_windows\*.dll .\venv\Lib\site-packages\bitsandbytes\

cp .\bitsandbytes_windows\cextension.py .\venv\Lib\site-packages\bitsandbytes\cextension.py

cp .\bitsandbytes_windows\main.py .\venv\Lib\site-packages\bitsandbytes\cuda_setup\main.py

accelerate configThis step is optional but can improve the learning speed for NVIDIA 30X0/40X0 owners. It allows for larger training batch size and faster training speed.

Due to the file size, I can't host the DLLs needed for CUDNN 8.6 on Github. I strongly advise you download them for a speed boost in sample generation (almost 50% on 4090 GPU) you can download them here.

To install, simply unzip the directory and place the cudnn_windows folder in the root of the this repo.

Run the following commands to install:

.\venv\Scripts\activate

python .\tools\cudann_1.8_install.py

When a new release comes out, you can upgrade your repo with the following commands in the root directory:

git pull

.\venv\Scripts\activate

pip install --use-pep517 --upgrade -r requirements.txtOnce the commands have completed successfully you should be ready to use the new version.

To run the GUI, simply use this command:

.\gui.ps1

or you can also do:

.\venv\Scripts\activate

python.exe .\kohya_gui.py

You can find the dreambooth solution specific here: Dreambooth README

You can find the finetune solution specific here: Finetune README

You can find the train network solution specific here: Train network README

Training a LoRA currently uses the train_network.py code. You can create a LoRA network by using the all-in-one gui.cmd or by running the dedicated LoRA training GUI with:

.\venv\Scripts\activate

python lora_gui.py

Once you have created the LoRA network, you can generate images via auto1111 by installing this extension.

- X error relating to

page file: Increase the page file size limit in Windows.

- Re-install Python 3.10 on your system.

This is usually related to an installation issue. Make sure you do not have any python modules installed locally that could conflict with the ones installed in the venv:

- Open a new powershell terminal and make sure no venv is active.

- Run the following commands:

pip freeze > uninstall.txt

pip uninstall -r uninstall.txt

This will store your a backup file with your current locally installed pip packages and then uninstall them. Then, redo the installation instructions within the kohya_ss venv.

-

2023/02/23 (v20.8.1):

- Fix instability training issue in

train_network.py.fp16training is probably not affected by this issue.- Training with

floatfor SD2.x models will work now. Also training with bf16 might be improved. - This issue seems to have occurred in PR#190.

- Add some metadata to LoRA model. Thanks to space-nuko!

- Raise an error if optimizer options conflict (e.g.

--optimizer_typeand--use_8bit_adam.) - Support ControlNet in

gen_img_diffusers.py(no documentation yet.)

- Fix instability training issue in

-

2023/02/22 (v20.8.0):

- Add gui support for optimizers:

AdamW, AdamW8bit, Lion, SGDNesterov, SGDNesterov8bit, DAdaptation, AdaFactor - Add gui support for

--noise_offset - Refactor optmizer options. Thanks to mgz-dev!

- Add

--optimizer_typeoption for each training script. Please see help. Japanese documentation is here. --use_8bit_adamand--use_lion_optimizeroptions also work and will override the options above for backward compatibility.

- Add

- Add SGDNesterov and its 8bit.

- Add D-Adaptation optimizer. Thanks to BootsofLagrangian and all!

- Please install D-Adaptation optimizer with

pip install dadaptation(it is not in requirements.txt currently.) - Please see kohya-ss/sd-scripts#181 for details.

- Please install D-Adaptation optimizer with

- Add AdaFactor optimizer. Thanks to Toshiaki!

- Extra lr scheduler settings (num_cycles etc.) are working in training scripts other than

train_network.py. - Add

--max_grad_normoption for each training script for gradient clipping.0.0disables clipping. - Symbolic link can be loaded in each training script. Thanks to TkskKurumi!

- Add gui support for optimizers:

-

2023/02/19 (v20.7.4):

- Add

--use_lion_optimizerto each training script to use Lion optimizer.- Please install Lion optimizer with

pip install lion-pytorch(it is not inrequirements.txtcurrently.)

- Please install Lion optimizer with

- Add

--lowramoption totrain_network.py. Load models to VRAM instead of VRAM (for machines which have bigger VRAM than RAM such as Colab and Kaggle). Thanks to Isotr0py!- Default behavior (without lowram) has reverted to the same as before 14 Feb.

- Fixed git commit hash to be set correctly regardless of the working directory. Thanks to vladmandic!

- Add

-

2023/02/15 (v20.7.3):

- Update upgrade.ps1 script

- Integrate new kohya sd-script

- Noise offset is recorded to the metadata. Thanks to space-nuko!

- Show the moving average loss to prevent loss jumping in

train_network.pyandtrain_db.py. Thanks to shirayu! - Add support with multi-gpu trainining for

train_network.py. Thanks to Isotr0py! - Add

--verboseoption forresize_lora.py. For details, see this PR. Thanks to mgz-dev! - Git commit hash is added to the metadata for LoRA. Thanks to space-nuko!

- Add

--noise_offsetoption for each training scripts.- Implementation of https://www.crosslabs.org//blog/diffusion-with-offset-noise

- This option may improve ability to generate darker/lighter images. May work with LoRA.

-

2023/02/11 (v20.7.2):

lora_interrogator.pyis added innetworksfolder. Seepython networks\lora_interrogator.py -hfor usage.- For LoRAs where the activation word is unknown, this script compares the output of Text Encoder after applying LoRA to that of unapplied to find out which token is affected by LoRA. Hopefully you can figure out the activation word. LoRA trained with captions does not seem to be able to interrogate.

- Batch size can be large (like 64 or 128).

train_textual_inversion.pynow supports multiple init words.- Following feature is reverted to be the same as before. Sorry for confusion:

Now the number of data in each batch is limited to the number of actual images (not duplicated). Because a certain bucket may contain smaller number of actual images, so the batch may contain same (duplicated) images.

- Add new tool to sort, group and average crop image in a dataset

-

2023/02/09 (v20.7.1)

- Caption dropout is supported in

train_db.py,fine_tune.pyandtrain_network.py. Thanks to forestsource!--caption_dropout_rateoption specifies the dropout rate for captions (0~1.0, 0.1 means 10% chance for dropout). If dropout occurs, the image is trained with the empty caption. Default is 0 (no dropout).--caption_dropout_every_n_epochsoption specifies how many epochs to drop captions. If3is specified, in epoch 3, 6, 9 ..., images are trained with all captions empty. Default is None (no dropout).--caption_tag_dropout_rateoption specified the dropout rate for tags (comma separated tokens) (0~1.0, 0.1 means 10% chance for dropout). If dropout occurs, the tag is removed from the caption. If--keep_tokensoption is set, these tokens (tags) are not dropped. Default is 0 (no droupout).- The bulk image downsampling script is added. Documentation is here (in Jpanaese). Thanks to bmaltais!

- Typo check is added. Thanks to shirayu!

- Add option to autolaunch the GUI in a browser and set the server_port. USe either

gui.ps1 --inbrowser --server_port 3456orgui.cmd -inbrowser -server_port 3456

- Caption dropout is supported in

-

2023/02/06 (v20.7.0)

--bucket_reso_stepsand--bucket_no_upscaleoptions are added to training scripts (fine tuning, DreamBooth, LoRA and Textual Inversion) andprepare_buckets_latents.py.--bucket_reso_stepstakes the steps for buckets in aspect ratio bucketing. Default is 64, same as before.- Any value greater than or equal to 1 can be specified; 64 is highly recommended and a value divisible by 8 is recommended.

- If less than 64 is specified, padding will occur within U-Net. The result is unknown.

- If you specify a value that is not divisible by 8, it will be truncated to divisible by 8 inside VAE, because the size of the latent is 1/8 of the image size.

- If the

--bucket_no_upscaleoption is specified, images smaller than the bucket size will be processed without upscaling.- Internally, a bucket smaller than the image size is created (for example, if the image is 300x300 and

bucket_reso_steps=64, the bucket is 256x256). The image will be trimmed. - Implementation of #130.

- Images with an area larger than the maximum size specified by

--resolutionare downsampled to the max bucket size.

- Internally, a bucket smaller than the image size is created (for example, if the image is 300x300 and

- Now the number of data in each batch is limited to the number of actual images (not duplicated). Because a certain bucket may contain smaller number of actual images, so the batch may contain same (duplicated) images.

--random_cropnow also works with buckets enabled.- Instead of always cropping the center of the image, the image is shifted left, right, up, and down to be used as the training data. This is expected to train to the edges of the image.

- Implementation of discussion #34.

-

2023/02/04 (v20.6.1)

- Add new LoRA resize GUI

--persistent_data_loader_workersoption is added tofine_tune.py,train_db.pyandtrain_network.py. This option may significantly reduce the waiting time between epochs. Thanks to hitomi!--debug_datasetoption is now working on non-Windows environment. Thanks to tsukimiya!networks/resize_lora.pyscript is added. This can approximate the higher-rank (dim) LoRA model by a lower-rank LoRA model, e.g. 128 to 4. Thanks to mgz-dev!--helpoption shows usage.- Currently the metadata is not copied. This will be fixed in the near future.

-

2023/02/03 (v20.6.0)

- Increase max LoRA rank (dim) size to 1024.

- Update finetune preprocessing scripts.

.bmpand.jpegare supported. Thanks to breakcore2 and p1atdev!- The default weights of

tag_images_by_wd14_tagger.pyis nowSmilingWolf/wd-v1-4-convnext-tagger-v2. You can specify another model id fromSmilingWolfby--repo_idoption. Thanks to SmilingWolf for the great work. - To change the weight, remove

wd14_tagger_modelfolder, and run the script again. --max_data_loader_n_workersoption is added to each script. This option uses the DataLoader for data loading to speed up loading, 20%~30% faster.- Please specify 2 or 4, depends on the number of CPU cores.

--recursiveoption is added tomerge_dd_tags_to_metadata.pyandmerge_captions_to_metadata.py, only works with--full_path.make_captions_by_git.pyis added. It uses GIT microsoft/git-large-textcaps for captioning.requirements.txtis updated. If you use this script, please update the libraries.- Usage is almost the same as

make_captions.py, but batch size should be smaller. --remove_wordsoption removes as much text as possible (such asthe word "XXXX" on it).--skip_existingoption is added toprepare_buckets_latents.py. Images with existing npz files are ignored by this option.clean_captions_and_tags.pyis updated to remove duplicated or conflicting tags, e.g.shirtis removed whenwhite shirtexists. ifblack hairis withred hair, both are removed.

- Tag frequency is added to the metadata in

train_network.py. Thanks to space-nuko!- All tags and number of occurrences of the tag are recorded. If you do not want it, disable metadata storing with

--no_metadataoption.

- All tags and number of occurrences of the tag are recorded. If you do not want it, disable metadata storing with

-

2023/01/30 (v20.5.2):

- Add

--lr_scheduler_num_cyclesand--lr_scheduler_poweroptions fortrain_network.pyfor cosine_with_restarts and polynomial learning rate schedulers. Thanks to mgz-dev! - Fixed U-Net

sample_sizeparameter to64when converting from SD to Diffusers format, inconvert_diffusers20_original_sd.py

- Add

-

2023/01/27 (v20.5.1):

-

2023/01/26 (v20.5.0):

- Add new

Dreambooth TItab for training of Textual Inversion embeddings - Add Textual Inversion training. Documentation is here (in Japanese.)

- Add new

-

2023/01/22 (v20.4.1):

- Add new tool to verify LoRA weights produced by the trainer. Can be found under "Dreambooth LoRA/Tools/Verify LoRA"

-

2023/01/22 (v20.4.0):

- Add support for

network_alphaunder the Training tab and support for--training_commentunder the Folders tab. - Add

--network_alphaoption to specifyalphavalue to prevent underflows for stable training. Thanks to CCRcmcpe!- Details of the issue are described here.

- The default value is

1, scale1 / rank (or dimension). Set same value asnetwork_dimfor same behavior to old version. - LoRA with a large dimension (rank) seems to require a higher learning rate with

alpha=1(e.g. 1e-3 for 128-dim, still investigating).

- For generating images in Web UI, the latest version of the extension

sd-webui-additional-networks(v0.3.0 or later) is required for the models trained with this release or later. - Add logging for the learning rate for U-Net and Text Encoder independently, and for running average epoch loss. Thanks to mgz-dev!

- Add more metadata such as dataset/reg image dirs, session ID, output name etc... See this pull request for details. Thanks to space-nuko!

- Now the metadata includes the folder name (the basename of the folder contains image files, not the full path). If you do not want it, disable metadata storing with

--no_metadataoption.

- Now the metadata includes the folder name (the basename of the folder contains image files, not the full path). If you do not want it, disable metadata storing with

- Add

--training_commentoption. You can specify an arbitrary string and refer to it by the extension.

- Add support for

It seems that the Stable Diffusion web UI now supports image generation using the LoRA model learned in this repository.

Note: At this time, it appears that models learned with version 0.4.0 are not supported. If you want to use the generation function of the web UI, please continue to use version 0.3.2. Also, it seems that LoRA models for SD2.x are not supported.

- 2023/01/16 (v20.3.0):

- Fix a part of LoRA modules are not trained when

gradient_checkpointingis enabled. - Add

--save_last_n_epochs_stateoption. You can specify how many state folders to keep, apart from how many models to keep. Thanks to shirayu! - Fix Text Encoder training stops at

max_train_stepseven ifmax_train_epochsis set intrain_db.py. - Added script to check LoRA weights. You can check weights by

python networks\check_lora_weights.py <model file>. If some modules are not trained, the value is0.0like following.lora_te_text_model_encoder_layers_11_*is not trained withclip_skip=2, so0.0is okay for these modules.

- Fix a part of LoRA modules are not trained when

- example result of

check_lora_weights.py, Text Encoder and a part of U-Net are not trained:

number of LoRA-up modules: 264

lora_te_text_model_encoder_layers_0_mlp_fc1.lora_up.weight,0.0

lora_te_text_model_encoder_layers_0_mlp_fc2.lora_up.weight,0.0

lora_te_text_model_encoder_layers_0_self_attn_k_proj.lora_up.weight,0.0

:

lora_unet_down_blocks_2_attentions_1_transformer_blocks_0_ff_net_0_proj.lora_up.weight,0.0

lora_unet_down_blocks_2_attentions_1_transformer_blocks_0_ff_net_2.lora_up.weight,0.0

lora_unet_mid_block_attentions_0_proj_in.lora_up.weight,0.003503334941342473

lora_unet_mid_block_attentions_0_proj_out.lora_up.weight,0.004308608360588551

:

- all modules are trained:

number of LoRA-up modules: 264

lora_te_text_model_encoder_layers_0_mlp_fc1.lora_up.weight,0.0028684409335255623

lora_te_text_model_encoder_layers_0_mlp_fc2.lora_up.weight,0.0029794853180646896

lora_te_text_model_encoder_layers_0_self_attn_k_proj.lora_up.weight,0.002507600700482726

lora_te_text_model_encoder_layers_0_self_attn_out_proj.lora_up.weight,0.002639499492943287

:

-

2023/01/16 (v20.2.1):

- Merging the latest code update from kohya

- Added

--max_train_epochsand--max_data_loader_n_workersoption for each training script. - If you specify the number of training epochs with

--max_train_epochs, the number of steps is calculated from the number of epochs automatically. - You can set the number of workers for DataLoader with

--max_data_loader_n_workers, default is 8. The lower number may reduce the main memory usage and the time between epochs, but may cause slower data loading (training). - Fix loading some VAE or .safetensors as VAE is failed for

--vaeoption. Thanks to Fannovel16! - Add negative prompt scaling for

gen_img_diffusers.pyYou can set another conditioning scale to the negative prompt with--negative_scaleoption, and--nloption for the prompt. Thanks to laksjdjf! - Refactoring of GUI code and fixing mismatch... and possibly introducing bugs...

-

2023/01/11 (v20.2.0):

- Add support for max token length

-

2023/01/10 (v20.1.1):

- Fix issue with LoRA config loading

-

2023/01/10 (v20.1):

- Add support for

--output_nameto trainers - Refactor code for easier maintenance

- Add support for

-

2023/01/10 (v20.0):

- Update code base to match latest kohys_ss code upgrade

-

2023/01/09 (v19.4.3):

- Add vae support to dreambooth GUI

- Add gradient_checkpointing, gradient_accumulation_steps, mem_eff_attn, shuffle_caption to finetune GUI

- Add gradient_accumulation_steps, mem_eff_attn to dreambooth lora gui

-

2023/01/08 (v19.4.2):

- Add find/replace option to Basic Caption utility

- Add resume training and save_state option to finetune UI

-

2023/01/06 (v19.4.1):

- Emergency fix for new version of gradio causing issues with drop down menus. Please run

pip install -U -r requirements.txtto fix the issue after pulling this repo.

- Emergency fix for new version of gradio causing issues with drop down menus. Please run

-

2023/01/06 (v19.4):

- Add new Utility to Extract a LoRA from a finetuned model

-

2023/01/06 (v19.3.1):

- Emergency fix for dreambooth_ui no longer working, sorry

- Add LoRA network merge too GUI. Run

pip install -U -r requirements.txtafter pulling this new release.

-

2023/01/05 (v19.3):

- Add support for

--clip_skipoption - Add missing

detect_face_rotate.pyto tools folder - Add

gui.cmdfor easy start of GUI

- Add support for

-

2023/01/02 (v19.2) update:

- Finetune, add xformers, 8bit adam, min bucket, max bucket, batch size and flip augmentation support for dataset preparation

- Finetune, add "Dataset preparation" tab to group task specific options

-

2023/01/01 (v19.2) update:

- add support for color and flip augmentation to "Dreambooth LoRA"

-

2023/01/01 (v19.1) update:

- merge kohys_ss upstream code updates

- rework Dreambooth LoRA GUI

- fix bug where LoRA network weights were not loaded to properly resume training

-

2022/12/30 (v19) update:

- support for LoRA network training in kohya_gui.py.

-

2022/12/23 (v18.8) update:

- Fix for conversion tool issue when the source was an sd1.x diffuser model

- Other minor code and GUI fix

-

2022/12/22 (v18.7) update:

- Merge dreambooth and finetune is a common GUI

- General bug fixes and code improvements

-

2022/12/21 (v18.6.1) update:

- fix issue with dataset balancing when the number of detected images in the folder is 0

-

2022/12/21 (v18.6) update:

- add optional GUI authentication support via:

python fine_tune.py --username=<name> --password=<password>

- add optional GUI authentication support via: