Metadata correction for 2024.lrec-main.143 #4490

Labels

approved

Used to note team approval of metadata requests

correction

for corrections submitted to the anthology

metadata

Correction to metadata

JSON data block

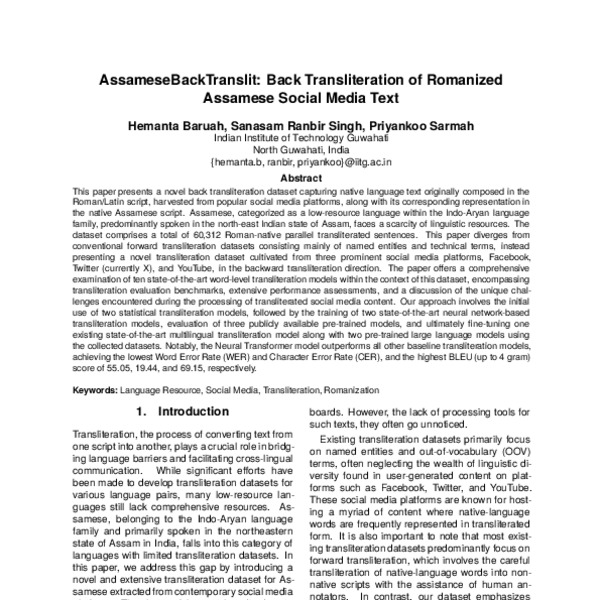

{ "anthology_id": "2024.lrec-main.143", "abstract": "This paper presents a novel back transliteration dataset capturing native language text originally composed in the Roman/Latin script, harvested from popular social media platforms, along with its corresponding representation in the native Assamese script. Assamese, categorized as a low-resource language within the Indo-Aryan language family, predominantly spoken in the north-east Indian state of Assam, faces a scarcity of linguistic resources. The dataset comprises a total of 60,312 Roman-native parallel transliterated sentences. This paper diverges from conventional forward transliteration datasets consisting mainly of named entities and technical terms, instead presenting a novel transliteration dataset cultivated from three prominent social media platforms, Facebook, Twitter(currently X), and YouTube, in the backward transliteration direction. The paper offers a comprehensive examination of ten state-of-the-art word-level transliteration models within the context of this dataset, encompassing transliteration evaluation benchmarks, extensive performance assessments, and a discussion of the unique challenges encountered during the processing of transliterated social media content. Our approach involves the initial use of two statistical transliteration models, followed by the training of two state-of-the-art neural network-based transliteration models, evaluation of three publicly available pre-trained models, and ultimately fine-tuning one existing state-of-the-art multilingual transliteration model along with two pre-trained large language models using the collected datasets. Notably, the Neural Transformer model outperforms all other baseline transliteration models, achieving the lowest Word Error Rate (WER) and Character Error Rate (CER), and the highest BLEU (up to 4 gram) score of 55.05, 19.44, and 69.15, respectively." }The text was updated successfully, but these errors were encountered: