+

+#### Dataset Configuration

+

+It is mainly about two parts.

+

+- The location of the dataset(s), including images and annotation files.

+

+- Data augmentation related configurations. In the OCR domain, data augmentation is usually strongly associated with the model.

+

+More parameter configurations can be found in [Data Base Class](#TODO).

+

+The naming convention for dataset fields in MMOCR is

+

+```Python

+{dataset}_{task}_{train/val/test} = dict(...)

+```

+

+- dataset: See [dataset abbreviations](#TODO)

+

+- task: `det`(text detection), `rec`(text recognition), `kie`(key information extraction)

+

+- train/val/test: Dataset split.

+

+For example, for text recognition tasks, Syn90k is used as the training set, while icdar2013 and icdar2015 serve as the test sets. These are configured as follows.

+

+```Python

+# text recognition dataset configuration

+mj_rec_train = dict(

+ type='OCRDataset',

+ data_root='data/rec/Syn90k/',

+ data_prefix=dict(img_path='mnt/ramdisk/max/90kDICT32px'),

+ ann_file='train_labels.json',

+ test_mode=False,

+ pipeline=None)

+

+ic13_rec_test = dict(

+ type='OCRDataset',

+ data_root='data/rec/icdar_2013/',

+ data_prefix=dict(img_path='Challenge2_Test_Task3_Images/'),

+ ann_file='test_labels.json',

+ test_mode=True,

+ pipeline=None)

+

+ic15_rec_test = dict(

+ type='OCRDataset',

+ data_root='data/rec/icdar_2015/',

+ data_prefix=dict(img_path='ch4_test_word_images_gt/'),

+ ann_file='test_labels.json',

+ test_mode=True,

+ pipeline=None)

+```

+

+

+

+#### Data Pipeline Configuration

+

+In MMOCR, dataset construction and data preparation are decoupled from each other. In other words, dataset classes such as `OCRDataset` are responsible for reading and parsing annotation files, while Data Transforms further implement data loading, data augmentation, data formatting and other related functions.

+

+In general, there are different augmentation strategies for training and testing, so there are usually `training_pipeline` and `testing_pipeline`. More information can be found in [Data Transforms](../basic_concepts/transforms.md)

+

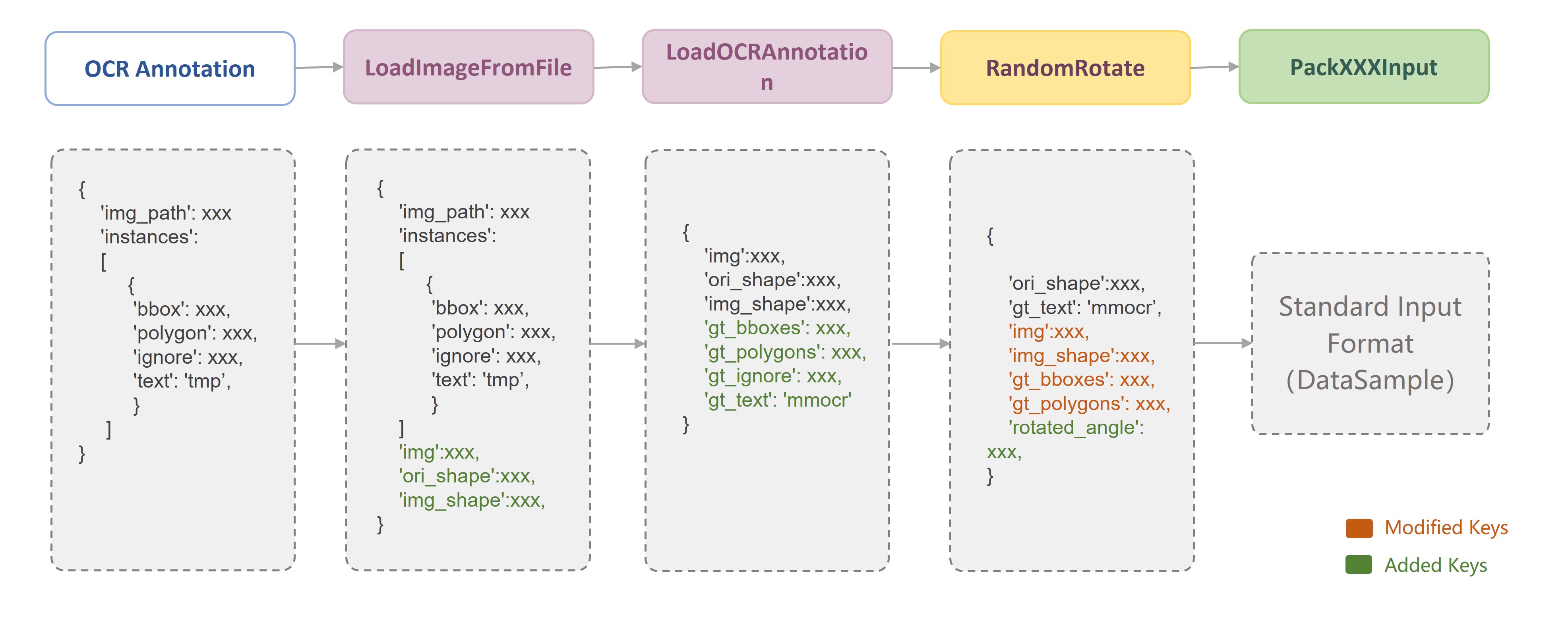

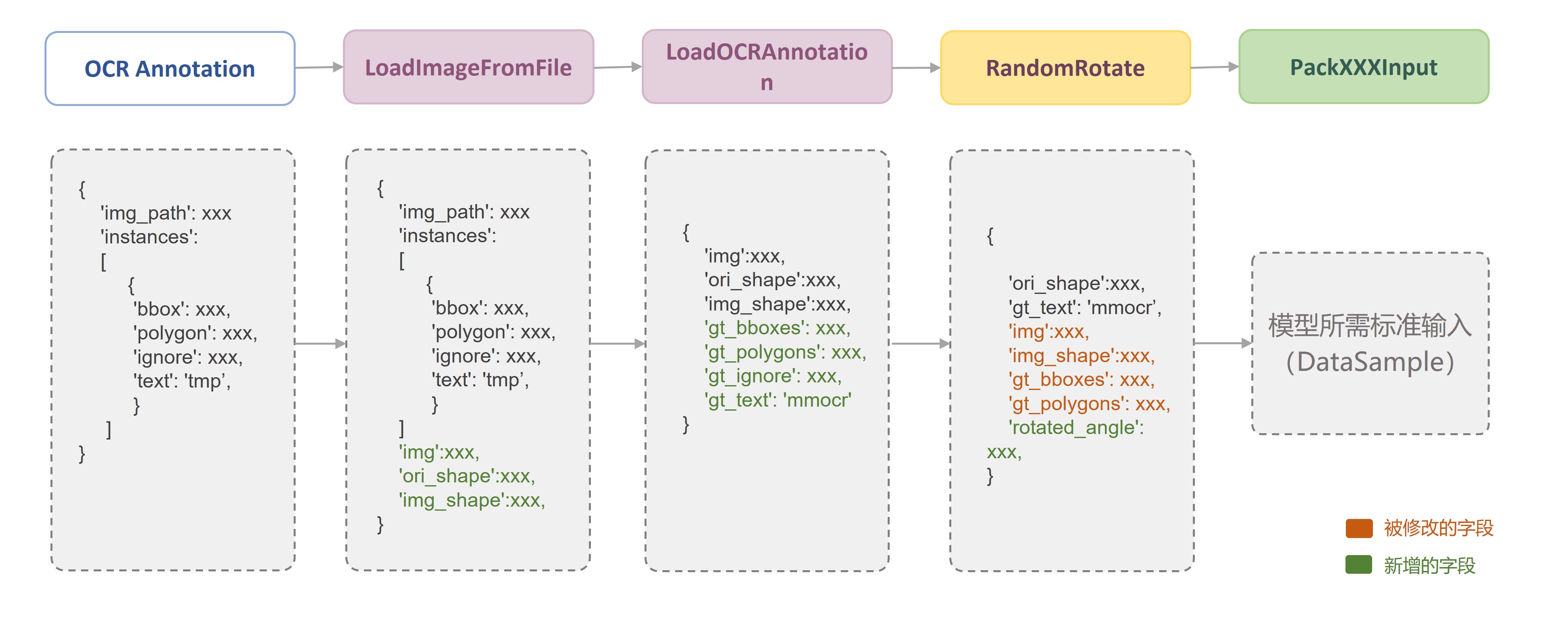

+- The data augmentation process of the training pipeline is usually: data loading (LoadImageFromFile) -> annotation information loading (LoadXXXAnntation) -> data augmentation -> data formatting (PackXXXInputs).

+

+- The data augmentation flow of the test pipeline is usually: Data Loading (LoadImageFromFile) -> Data Augmentation -> Annotation Loading (LoadXXXAnntation) -> Data Formatting (PackXXXInputs).

+

+Due to the specificity of the OCR task, different models have different data augmentation techniques, and even the same model can have different data augmentation strategies for different datasets. Take `CRNN` as an example.

+

+```Python

+# Data Augmentation

+file_client_args = dict(backend='disk')

+train_pipeline = [

+ dict(

+ type='LoadImageFromFile',

+ color_type='grayscale',

+ file_client_args=dict(backend='disk'),

+ ignore_empty=True,

+ min_size=5),

+ dict(type='LoadOCRAnnotations', with_text=True),

+ dict(type='Resize', scale=(100, 32), keep_ratio=False),

+ dict(

+ type='PackTextRecogInputs',

+ meta_keys=('img_path', 'ori_shape', 'img_shape', 'valid_ratio'))

+]

+test_pipeline = [

+ dict(

+ type='LoadImageFromFile',

+ color_type='grayscale',

+ file_client_args=dict(backend='disk')),

+ dict(

+ type='RescaleToHeight',

+ height=32,

+ min_width=32,

+ max_width=None,

+ width_divisor=16),

+ dict(type='LoadOCRAnnotations', with_text=True),

+ dict(

+ type='PackTextRecogInputs',

+ meta_keys=('img_path', 'ori_shape', 'img_shape', 'valid_ratio'))

+]

+```

+

+#### Dataloader Configuration

+

+The main configuration information needed to construct the dataset loader (dataloader), see {external+torch:doc}`PyTorch DataLoader

` for more tutorials.

+

+```Python

+# Dataloader

+train_dataloader = dict(

+ batch_size=64,

+ num_workers=8,

+ persistent_workers=True,

+ sampler=dict(type='DefaultSampler', shuffle=True),

+ dataset=dict(

+ type='ConcatDataset',

+ datasets=[mj_rec_train],

+ pipeline=train_pipeline))

+val_dataloader = dict(

+ batch_size=1,

+ num_workers=4,

+ persistent_workers=True,

+ drop_last=False,

+ sampler=dict(type='DefaultSampler', shuffle=False),

+ dataset=dict(

+ type='ConcatDataset',

+ datasets=[ic13_rec_test, ic15_rec_test],

+ pipeline=test_pipeline))

+test_dataloader = val_dataloader

+```

+

+### Model-related Configuration

+

+

+

+#### Network Configuration

+

+This section configures the network architecture. Different algorithmic tasks use different network architectures. Find more info about network architecture in [structures](../basic_concepts/structures.md)

+

+##### Text Detection

+

+Text detection consists of several parts:

+

+- `data_preprocessor`: [data_preprocessor](mmocr.models.textdet.data_preprocessors.TextDetDataPreprocessor)

+- `backbone`: backbone network configuration

+- `neck`: neck network configuration

+- `det_head`: detection head network configuration

+ - `module_loss`: module loss configuration

+ - `postprocessor`: postprocessor configuration

+

+We present the model configuration in text detection using DBNet as an example.

+

+```Python

+model = dict(

+ type='DBNet',

+ data_preprocessor=dict(

+ type='TextDetDataPreprocessor',

+ mean=[123.675, 116.28, 103.53],

+ std=[58.395, 57.12, 57.375],

+ bgr_to_rgb=True,

+ pad_size_divisor=32)

+ backbone=dict(

+ type='mmdet.ResNet',

+ depth=18,

+ num_stages=4,

+ out_indices=(0, 1, 2, 3),

+ frozen_stages=-1,

+ norm_cfg=dict(type='BN', requires_grad=True),

+ init_cfg=dict(type='Pretrained', checkpoint='torchvision://resnet18'),

+ norm_eval=False,

+ style='caffe'),

+ neck=dict(

+ type='FPNC', in_channels=[64, 128, 256, 512], lateral_channels=256),

+ det_head=dict(

+ type='DBHead',

+ in_channels=256,

+ module_loss=dict(type='DBModuleLoss'),

+ postprocessor=dict(type='DBPostprocessor', text_repr_type='quad')))

+```

+

+##### Text Recognition

+

+Text recognition mainly contains:

+

+- `data_processor`: [data preprocessor configuration](mmocr.models.textrecog.data_processors.TextRecDataPreprocessor)

+- `preprocessor`: network preprocessor configuration, e.g. TPS

+- `backbone`: backbone configuration

+- `encoder`: encoder configuration

+- `decoder`: decoder configuration

+ - `module_loss`: decoder module loss configuration

+ - `postprocessor`: decoder postprocessor configuration

+ - `dictionary`: dictionary configuration

+

+Using CRNN as an example.

+

+```Python

+# model

+model = dict(

+ type='CRNN',

+ data_preprocessor=dict(

+ type='TextRecogDataPreprocessor', mean=[127], std=[127])

+ preprocessor=None,

+ backbone=dict(type='VeryDeepVgg', leaky_relu=False, input_channels=1),

+ encoder=None,

+ decoder=dict(

+ type='CRNNDecoder',

+ in_channels=512,

+ rnn_flag=True,

+ module_loss=dict(type='CTCModuleLoss', letter_case='lower'),

+ postprocessor=dict(type='CTCPostProcessor'),

+ dictionary=dict(

+ type='Dictionary',

+ dict_file='dicts/lower_english_digits.txt',

+ with_padding=True)))

+```

+

+

+

+#### Checkpoint Loading Configuration

+

+The model weights in the checkpoint file can be loaded via the `load_from` parameter, simply by setting the `load_from` parameter to the path of the checkpoint file.

+

+You can also resume training by setting `resume=True` to load the training status information in the checkpoint. When both `load_from` and `resume=True` are set, MMEngine will load the training state from the checkpoint file at the `load_from` path.

+

+If only `resume=True` is set, the executor will try to find and read the latest checkpoint file from the `work_dir` folder

+

+```Python

+load_from = None # Path to load checkpoint

+resume = False # whether resume

+```

+

+More can be found in {external+mmengine:doc}`MMEngine: Load Weights or Recover Training ` and [OCR Advanced Tips - Resume Training from Checkpoints](train_test.md#resume-training-from-a-checkpoint).

+

+

+

+### Evaluation Configuration

+

+In model validation and model testing, quantitative measurement of model accuracy is often required. MMOCR performs this function by means of `Metric` and `Evaluator`. For more information, please refer to {external+mmengine:doc}`MMEngine: Evaluation

` and [Evaluation](../basic_concepts/evaluation.md)

+

+#### Evaluator

+

+Evaluator is mainly used to manage multiple datasets and multiple `Metrics`. For single and multiple dataset cases, there are single and multiple dataset evaluators, both of which can manage multiple `Metrics`.

+

+The single-dataset evaluator is configured as follows.

+

+```Python

+# Single Dataset Single Metric

+val_evaluator = dict(

+ type='Evaluator',

+ metrics=dict())

+

+# Single Dataset Multiple Metric

+val_evaluator = dict(

+ type='Evaluator',

+ metrics=[...])

+```

+

+`MultiDatasetsEvaluator` differs from single-dataset evaluation in two aspects: `type` and `dataset_prefixes`. The evaluator type must be `MultiDatasetsEvaluator` and cannot be omitted. The `dataset_prefixes` is mainly used to distinguish the results of different datasets with the same evaluation metrics, see [MultiDatasetsEvaluation](../basic_concepts/evaluation.md).

+

+Assuming that we need to test accuracy on IC13 and IC15 datasets, the configuration is as follows.

+

+```Python

+# Multiple datasets, single Metric

+val_evaluator = dict(

+ type='MultiDatasetsEvaluator',

+ metrics=dict(),

+ dataset_prefixes=['IC13', 'IC15'])

+

+# Multiple datasets, multiple Metrics

+val_evaluator = dict(

+ type='MultiDatasetsEvaluator',

+ metrics=[...],

+ dataset_prefixes=['IC13', 'IC15'])

+```

+

+#### Metric

+

+A metric evaluates a model's performance from a specific perspective. While there is no such common metric that fits all the tasks, MMOCR provides enough flexibility such that multiple metrics serving the same task can be used simultaneously. Here we list task-specific metrics for reference.

+

+Text detection: [`HmeanIOUMetric`](mmocr.evaluation.metrics.HmeanIOUMetric)

+

+Text recognition: [`WordMetric`](mmocr.evaluation.metrics.WordMetric), [`CharMetric`](mmocr.evaluation.metrics.CharMetric), [`OneMinusNEDMetric`](mmocr.evaluation.metrics.OneMinusNEDMetric)

+

+Key information extraction: [`F1Metric`](mmocr.evaluation.metrics.F1Metric)

+

+Text detection as an example, using a single `Metric` in the case of single dataset evaluation.

+

+```Python

+val_evaluator = dict(type='HmeanIOUMetric')

+```

+

+Take text recognition as an example, multiple datasets (`IC13` and `IC15`) are evaluated using multiple `Metric`s (`WordMetric` and `CharMetric`).

+

+```Python

+val_evaluator = dict(

+ type='MultiDatasetsEvaluator',

+ metrics=[

+ dict(

+ type='WordMetric',

+ mode=['exact', 'ignore_case', 'ignore_case_symbol']),

+ dict(type='CharMetric')

+ ],

+ dataset_prefixes=['IC13', 'IC15'])

+test_evaluator = val_evaluator

+```

+

+

+

+### Visualizaiton Configuration

+

+Each task is bound to a task-specific visualizer. The visualizer is mainly used for visualizing or storing intermediate results of user models and visualizing val and test prediction results. The visualization results can also be stored in different backends such as WandB, TensorBoard, etc. through the corresponding visualization backend. Commonly used modification operations can be found in [visualization](visualization.md).

+

+The default configuration of visualization for text detection is as follows.

+

+```Python

+vis_backends = [dict(type='LocalVisBackend')]

+visualizer = dict(

+ type='TextDetLocalVisualizer', # Different visualizers for different tasks

+ vis_backends=vis_backends,

+ name='visualizer')

+```

+

+## Directory Structure

+

+All configuration files of `MMOCR` are placed under the `configs` folder. To avoid config files from being too long and improve their reusability and clarity, MMOCR takes advantage of the inheritance mechanism and split config files into eight sections. Since each section is closely related to the task type, MMOCR provides a task folder for each task in `configs/`, namely `textdet` (text detection task), `textrecog` (text recognition task), and `kie` (key information extraction). Each folder is further divided into two parts: `_base_` folder and algorithm configuration folders.

+

+1. the `_base_` folder stores some general config files unrelated to specific algorithms, and each section is divided into datasets, training strategies and runtime configurations by directory.

+

+2. The algorithm configuration folder stores config files that are strongly related to the algorithm. The algorithm configuration folder has two kinds of config files.

+

+ 1. Config files starting with `_base_`: Configures the model and data pipeline of an algorithm. In OCR domain, data augmentation strategies are generally strongly related to the algorithm, so the model and data pipeline are usually placed in the same config file.

+

+ 2. Other config files, i.e. the algorithm-specific configurations on the specific dataset(s): These are the full config files that further configure training and testing settings, aggregating `_base_` configurations that are scattered in different locations. Inside some modifications to the fields in `_base_` configs may be performed, such as data pipeline, training strategy, etc.

+

+All these config files are distributed in different folders according to their contents as follows:

+

+

+

+

+

+The final directory structure is as follows.

+

+```Python

+configs

+├── textdet

+│ ├── _base_

+│ │ ├── datasets

+│ │ │ ├── icdar2015.py

+│ │ │ ├── icdar2017.py

+│ │ │ └── totaltext.py

+│ │ ├── schedules

+│ │ │ └── schedule_adam_600e.py

+│ │ └── default_runtime.py

+│ └── dbnet

+│ ├── _base_dbnet_resnet18_fpnc.py

+│ └── dbnet_resnet18_fpnc_1200e_icdar2015.py

+├── textrecog

+│ ├── _base_

+│ │ ├── datasets

+│ │ │ ├── icdar2015.py

+│ │ │ ├── icdar2017.py

+│ │ │ └── totaltext.py

+│ │ ├── schedules

+│ │ │ └── schedule_adam_base.py

+│ │ └── default_runtime.py

+│ └── crnn

+│ ├── _base_crnn_mini-vgg.py

+│ └── crnn_mini-vgg_5e_mj.py

+└── kie

+ ├── _base_

+ │ ├──datasets

+ │ └── default_runtime.py

+ └── sgdmr

+ └── sdmgr_novisual_60e_wildreceipt_openset.py

+```

+

+## Naming Conventions

+

+MMOCR has a convention to name config files, and contributors to the code base need to follow the same naming rules. The file names are divided into four sections: algorithm information, module information, training information, and data information. Words that logically belong to different sections are connected by an underscore `'_'`, and multiple words in the same section are connected by a hyphen `'-'`.

+

+```Python

+{{algorithm info}}_{{module info}}_{{training info}}_{{data info}}.py

+```

+

+- algorithm info: the name of the algorithm, such as dbnet, crnn, etc.

+

+- module info: list some intermediate modules in the order of data flow. Its content depends on the algorithm, and some modules strongly related to the model will be omitted to avoid an overly long name. For example:

+

+ - For the text detection task and the key information extraction task :

+

+ ```Python

+ {{algorithm info}}_{{backbone}}_{{neck}}_{{head}}_{{training info}}_{{data info}}.py

+ ```

+

+ `{head}` is usually omitted since it's algorithm-specific.

+

+ - For text recognition tasks.

+

+ ```Python

+ {{algorithm info}}_{{backbone}}_{{encoder}}_{{decoder}}_{{training info}}_{{data info}}.py

+ ```

+

+ Since encoder and decoder are generally bound to the algorithm, they are usually omitted.

+

+- training info: some settings of the training strategy, including batch size, schedule, etc.

+

+- data info: dataset name, modality, input size, etc., such as icdar2015 and synthtext.

diff --git a/docs/zh_cn/user_guides/config.md b/docs/zh_cn/user_guides/config.md

index 8c4f63256..fcec3fa67 100644

--- a/docs/zh_cn/user_guides/config.md

+++ b/docs/zh_cn/user_guides/config.md

@@ -5,7 +5,7 @@ MMOCR 主要使用 Python 文件作为配置文件。其配置文件系统的设

## 常见用法

```{note}

-本小节建议结合 [配置(Config)](https://github.com/open-mmlab/mmengine/blob/main/docs/zh_cn/tutorials/config.md) 中的初级用法共同阅读。

+本小节建议结合 {external+mmengine:doc}`MMEngine: 配置(Config) ` 中的初级用法共同阅读。

```

MMOCR 最常用的操作为三种:配置文件的继承,对 `_base_` 变量的引用以及对 `_base_` 变量的修改。对于 `_base_` 的继承与修改, MMEngine.Config 提供了两种语法,一种是针对 Python,Json, Yaml 均可使用的操作;另一种则仅适用于 Python 配置文件。在 MMOCR 中,我们**更推荐使用只针对Python的语法**,因此下文将以此为基础作进一步介绍。

@@ -144,7 +144,7 @@ train_dataloader = dict(

python tools/train.py example.py --cfg-options optim_wrapper.optimizer.lr=1

```

-更多详细用法参考[命令行修改配置](https://github.com/open-mmlab/mmengine/blob/main/docs/zh_cn/tutorials/config.md#%E5%91%BD%E4%BB%A4%E8%A1%8C%E4%BF%AE%E6%94%B9%E9%85%8D%E7%BD%AE)

+更多详细用法参考 {external+mmengine:ref}`MMEngine: 命令行修改配置 <命令行修改配置>`.

## 配置内容

@@ -162,16 +162,16 @@ env_cfg = dict(

cudnn_benchmark=True,

mp_cfg=dict(mp_start_method='fork', opencv_num_threads=0),

dist_cfg=dict(backend='nccl'))

-random_cfg = dict(seed=None)

+randomness = dict(seed=None)

```

主要包含三个部分:

-- 设置所有注册器的默认 `scope` 为 `mmocr`, 保证所有的模块首先从 `MMOCR` 代码库中进行搜索。若果该模块不存在,则继续从上游算法库 `MMEngine` 和 `MMCV` 中进行搜索(详见[注册器](https://github.com/open-mmlab/mmengine/blob/main/docs/zh_cn/tutorials/registry.md)。

+- 设置所有注册器的默认 `scope` 为 `mmocr`, 保证所有的模块首先从 `MMOCR` 代码库中进行搜索。若果该模块不存在,则继续从上游算法库 `MMEngine` 和 `MMCV` 中进行搜索,详见 {external+mmengine:doc}`MMEngine: 注册器 `。

-- `env_cfg` 设置分布式环境配置, 更多配置可以详见 [MMEngine Runner](https://github.com/open-mmlab/mmengine/blob/main/docs/zh_cn/tutorials/runner.md)

+- `env_cfg` 设置分布式环境配置, 更多配置可以详见 {external+mmengine:doc}`MMEngine: Runner `。

-- `random_cfg` 设置 numpy, torch,cudnn 等随机种子,更多配置详见 [Runner](https://github.com/open-mmlab/mmengine/blob/main/docs/zh_cn/tutorials/runner.md)

+- `randomness` 设置 numpy, torch,cudnn 等随机种子,更多配置详见 {external+mmengine:doc}`MMEngine: Runner `。

@@ -183,11 +183,11 @@ Hook 主要分为两个部分,默认 hook 以及自定义 hook。默认 hook

default_hooks = dict(

timer=dict(type='IterTimerHook'), # 时间记录,包括数据增强时间以及模型推理时间

logger=dict(type='LoggerHook', interval=1), # 日志打印间隔

- param_scheduler=dict(type='ParamSchedulerHook'), # 与param_scheduler 更新学习率等超参

+ param_scheduler=dict(type='ParamSchedulerHook'), # 更新学习率等超参

checkpoint=dict(type='CheckpointHook', interval=1),# 保存 checkpoint, interval控制保存间隔

sampler_seed=dict(type='DistSamplerSeedHook'), # 多机情况下设置种子

- sync_buffer=dict(type='SyncBuffersHook'), # 同步多卡情况下,buffer

- visualization=dict( # 用户可视化val 和 test 的结果

+ sync_buffer=dict(type='SyncBuffersHook'), # 多卡情况下,同步buffer

+ visualization=dict( # 可视化val 和 test 的结果

type='VisualizationHook',

interval=1,

enable=False,

@@ -203,9 +203,9 @@ default_hooks = dict(

- `CheckpointHook`:用于配置模型断点保存相关的行为,如保存最优权重,保存最新权重等。同样可以修改 `interval` 控制保存 checkpoint 的间隔。更多设置可参考 [CheckpointHook API](mmengine.hooks.CheckpointHook)

-- `VisualizationHook`:用于配置可视化相关行为,例如在验证或测试时可视化预测结果,默认为关。同时该 Hook 依赖[可视化配置](#TODO)。想要了解详细功能可以参考 [Visualizer](visualization.md)。更多配置可以参考 [VisualizationHook API](mmocr.engine.hooks.VisualizationHook)。

+- `VisualizationHook`:用于配置可视化相关行为,例如在验证或测试时可视化预测结果,**默认为关**。同时该 Hook 依赖[可视化配置](#可视化配置)。想要了解详细功能可以参考 [Visualizer](visualization.md)。更多配置可以参考 [VisualizationHook API](mmocr.engine.hooks.VisualizationHook)。

-如果想进一步了解默认 hook 的配置以及功能,可以参考[钩子(Hook)](https://github.com/open-mmlab/mmengine/blob/main/docs/zh_cn/tutorials/hook.md)。

+如果想进一步了解默认 hook 的配置以及功能,可以参考 {external+mmengine:doc}`MMEngine: 钩子(Hook) `。

@@ -220,13 +220,13 @@ log_processor = dict(type='LogProcessor',

by_epoch=True)

```

-- 日志配置等级与 [logging](https://docs.python.org/3/library/logging.html) 的配置一致,

+- 日志配置等级与 {external+python:doc}`Python: logging ` 的配置一致,

-- 日志处理器主要用来控制输出的格式,详细功能可参考[记录日志](https://github.com/open-mmlab/mmengine/blob/main/docs/zh_cn/advanced_tutorials/logging.md):

+- 日志处理器主要用来控制输出的格式,详细功能可参考 {external+mmengine:doc}`MMEngine: 记录日志 `:

- `by_epoch=True` 表示按照epoch输出日志,日志格式需要和 `train_cfg` 中的 `type='EpochBasedTrainLoop'` 参数保持一致。例如想按迭代次数输出日志,就需要令 `log_processor` 中的 ` by_epoch=False` 的同时 `train_cfg` 中的 `type = 'IterBasedTrainLoop'`。

- - `window_size` 表示损失的平滑窗口,即最近 `window_size` 次迭代的各种损失的均值。logger 中最终打印的 loss 值为经过各种损失的平均值。

+ - `window_size` 表示损失的平滑窗口,即最近 `window_size` 次迭代的各种损失的均值。logger 中最终打印的 loss 值为各种损失的平均值。

@@ -248,15 +248,15 @@ val_cfg = dict(type='ValLoop')

test_cfg = dict(type='TestLoop')

```

-- `optim_wrapper` : 主要包含两个部分,优化器封装 (OptimWrapper) 以及优化器 (Optimizer)。详情使用信息可见 [MMEngine 优化器封装](https://github.com/open-mmlab/mmengine/blob/main/docs/zh_cn/tutorials/optim_wrapper.md)

+- `optim_wrapper` : 主要包含两个部分,优化器封装 (OptimWrapper) 以及优化器 (Optimizer)。详情使用信息可见 {external+mmengine:doc}`MMEngine: 优化器封装 `

- 优化器封装支持不同的训练策略,包括混合精度训练(AMP)、梯度累加和梯度截断。

- - 优化器设置中支持了 PyTorch 所有的优化器,所有支持的优化器见 [PyTorch 优化器列表](torch.optim.algorithms)。

+ - 优化器设置中支持了 PyTorch 所有的优化器,所有支持的优化器见 {external+torch:ref}`PyTorch 优化器列表 `。

-- `param_scheduler` : 学习率调整策略,支持大部分 PyTorch 中的学习率调度器,例如 `ExponentialLR`,`LinearLR`,`StepLR`,`MultiStepLR` 等,使用方式也基本一致,所有支持的调度器见[调度器接口文档](mmengine.optim.scheduler), 更多功能可以[参考优化器参数调整策略](https://github.com/open-mmlab/mmengine/blob/main/docs/zh_cn/tutorials/param_scheduler.md)

+- `param_scheduler` : 学习率调整策略,支持大部分 PyTorch 中的学习率调度器,例如 `ExponentialLR`,`LinearLR`,`StepLR`,`MultiStepLR` 等,使用方式也基本一致,所有支持的调度器见[调度器接口文档](mmengine.optim.scheduler), 更多功能可以参考 {external+mmengine:doc}`MMEngine: 优化器参数调整策略 `。

-- `train/test/val_cfg` : 任务的执行流程,MMEngine 提供了四种流程:`EpochBasedTrainLoop`, `IterBasedTrainLoop`, `ValLoop`, `TestLoop` 更多可以参考[循环控制器](https://github.com/open-mmlab/mmengine/blob/main/docs/zh_cn/tutorials/runner.md)。

+- `train/test/val_cfg` : 任务的执行流程,MMEngine 提供了四种流程:`EpochBasedTrainLoop`, `IterBasedTrainLoop`, `ValLoop`, `TestLoop` 更多可以参考 {external+mmengine:doc}`MMEngine: 循环控制器 `。

### 数据相关配置

@@ -275,14 +275,14 @@ test_cfg = dict(type='TestLoop')

数据集字段的命名规则在 MMOCR 中为:

```Python

-{数据集名称缩写}_{算法任务}_{训练/测试} = dict(...)

+{数据集名称缩写}_{算法任务}_{训练/测试/验证} = dict(...)

```

- 数据集缩写:见 [数据集名称对应表](#TODO)

- 算法任务:文本检测-det,文字识别-rec,关键信息提取-kie

-- 训练/测试:数据集用于训练还是测试

+- 训练/测试/验证:数据集用于训练,测试还是验证

以识别为例,使用 Syn90k 作为训练集,以 icdar2013 和 icdar2015 作为测试集配置如下:

@@ -319,13 +319,11 @@ ic15_rec_test = dict(

MMOCR 中,数据集的构建与数据准备是相互解耦的。也就是说,`OCRDataset` 等数据集构建类负责完成标注文件的读取与解析功能;而数据变换方法(Data Transforms)则进一步实现了数据读取、数据增强、数据格式化等相关功能。

-同时一般情况下训练和测试会存在不同的增强策略,因此一般会存在训练流水线(train_pipeline)和测试流水线(test_pipeline)。

+同时一般情况下训练和测试会存在不同的增强策略,因此一般会存在训练流水线(train_pipeline)和测试流水线(test_pipeline)。更多信息可以参考[数据流水线](../basic_concepts/transforms.md)

-训练流水线的数据增强流程通常为:数据读取(LoadImageFromFile)->标注信息读取(LoadXXXAnntation)->数据增强->数据格式化(PackXXXInputs)。

+- 训练流水线的数据增强流程通常为:数据读取(LoadImageFromFile)->标注信息读取(LoadXXXAnntation)->数据增强->数据格式化(PackXXXInputs)。

-测试流水线的数据增强流程通常为:数据读取(LoadImageFromFile)->数据增强->标注信息读取(LoadXXXAnntation)->数据格式化(PackXXXInputs)。

-

-更多信息可以参考[数据流水线](../basic_concepts/transforms.md)

+- 测试流水线的数据增强流程通常为:数据读取(LoadImageFromFile)->数据增强->标注信息读取(LoadXXXAnntation)->数据格式化(PackXXXInputs)。

由于 OCR 任务的特殊性,一般情况下不同模型有不同数据增强的方式,相同模型在不同数据集一般也会有不同的数据增强方式。以 CRNN 为例:

@@ -367,7 +365,7 @@ test_pipeline = [

#### Dataloader 配置

-主要为构造数据集加载器(dataloader)所需的配置信息,更多教程看参考[PyTorch 数据加载器](torch.data)。

+主要为构造数据集加载器(dataloader)所需的配置信息,更多教程看参考 {external+torch:doc}`PyTorch 数据加载器 `。

```Python

# Dataloader 部分

@@ -388,7 +386,7 @@ val_dataloader = dict(

sampler=dict(type='DefaultSampler', shuffle=False),

dataset=dict(

type='ConcatDataset',

- datasets=[ic13_rec_test,ic15_rec_test],

+ datasets=[ic13_rec_test, ic15_rec_test],

pipeline=test_pipeline))

test_dataloader = val_dataloader

```

@@ -399,7 +397,7 @@ test_dataloader = val_dataloader

#### 网络配置

-用于配置模型的网络结构,不同的算法任务有不同的网络结构,

+用于配置模型的网络结构,不同的算法任务有不同的网络结构。更多信息可以参考[网络结构](../basic_concepts/structures.md)

##### 文本检测

@@ -493,13 +491,13 @@ load_from = None # 加载checkpoint的路径

resume = False # 是否 resume

```

-更多可以参考[加载权重或恢复训练](https://github.com/open-mmlab/mmengine/blob/main/docs/zh_cn/tutorials/runner.md)与[OCR进阶技巧-断点恢复训练](https://mmocr.readthedocs.io/zh_CN/dev-1.x/user_guides/train_test.html#id11)。

+更多可以参考 {external+mmengine:ref}`MMEngine: 加载权重或恢复训练 <加载权重或恢复训练>` 与 [OCR 进阶技巧-断点恢复训练](train_test.md#从断点恢复训练)。

### 评测配置

-在模型验证和模型测试中,通常需要对模型精度做定量评测。MMOCR 通过评测指标(Metric)和评测器(Evaluator)来完成这一功能。更多可以参考[评测指标(Metric)和评测器(Evaluator)](https://github.com/open-mmlab/mmengine/blob/main/docs/zh_cn/tutorials/evaluation.md)

+在模型验证和模型测试中,通常需要对模型精度做定量评测。MMOCR 通过评测指标(Metric)和评测器(Evaluator)来完成这一功能。更多可以参考{external+mmengine:doc}`MMEngine: 评测指标(Metric)和评测器(Evaluator)

` 和 [评测器](../basic_concepts/evaluation.md)

评测部分包含两个部分,评测器和评测指标。接下来我们分部分展开讲解。

@@ -551,13 +549,13 @@ val_evaluator = dict(

#### 评测指标

-评测指标指不同度量精度的方法,同时可以多个评测指标共同使用,更多评测指标原理参考[评测指标](https://github.com/open-mmlab/mmengine/blob/main/docs/zh_cn/tutorials/evaluation.md),在 MMOCR 中不同算法任务有不同的评测指标。

+评测指标指不同度量精度的方法,同时可以多个评测指标共同使用,更多评测指标原理参考 {external+mmengine:doc}`MMEngine: 评测指标 `,在 MMOCR 中不同算法任务有不同的评测指标。 更多 OCR 相关的评测指标可以参考 [评测指标](../basic_concepts/evaluation.md)。

-文字检测: `HmeanIOU`

+文字检测: [`HmeanIOUMetric`](mmocr.evaluation.metrics.HmeanIOUMetric)

-文字识别: `WordMetric`,`CharMetric`, `OneMinusNEDMetric`

+文字识别: [`WordMetric`](mmocr.evaluation.metrics.WordMetric),[`CharMetric`](mmocr.evaluation.metrics.CharMetric), [`OneMinusNEDMetric`](mmocr.evaluation.metrics.OneMinusNEDMetric)

-关键信息提取: `F1Metric`

+关键信息提取: [`F1Metric`](mmocr.evaluation.metrics.F1Metric)

以文本检测为例说明,在单数据集评测情况下,使用单个 `Metric`:

@@ -565,7 +563,7 @@ val_evaluator = dict(

val_evaluator = dict(type='HmeanIOUMetric')

```

-以文本识别为例,多数据集使用多个 `Metric` 评测:

+以文本识别为例,对多个数据集(IC13 和 IC15)用多个 `Metric` (`WordMetric` 和 `CharMetric`)进行评测:

```Python

# 评测部分

@@ -585,7 +583,7 @@ test_evaluator = val_evaluator

### 可视化配置

-每个任务配置该任务对应的可视化器。可视化器主要用于用户模型中间结果的可视化或存储,及 val 和 test 预测结果的可视化。同时可视化的结果可以通过可视化后端储存到不同的后端,比如 Wandb,TensorBoard 等。常用修改操作可见[可视化](visualization.md)。

+每个任务配置该任务对应的可视化器。可视化器主要用于用户模型中间结果的可视化或存储,及 val 和 test 预测结果的可视化。同时可视化的结果可以通过可视化后端储存到不同的后端,比如 WandB,TensorBoard 等。常用修改操作可见[可视化](visualization.md)。

文本检测的可视化默认配置如下:

@@ -599,7 +597,7 @@ visualizer = dict(

## 目录结构

-`MMOCR` 所有配置文件都放置在 `configs` 文件夹下。为了避免配置文件过长,同时提高配置文件的可复用性以及清晰性,MMOCR 利用 Config 文件的继承特性,将配置内容的八个部分做了拆分。因为每部分均与算法任务相关,因此 MMOCR 对每个任务在 Config 中提供了一个任务文件夹,即 `textdet` (文字检测任务)、`textrec` (文字识别任务)、`kie` (关键信息提取)。同时各个任务算法配置文件夹下进一步划分为两个部分:`_base_` 文件夹与诸多算法文件夹:

+`MMOCR` 所有配置文件都放置在 `configs` 文件夹下。为了避免配置文件过长,同时提高配置文件的可复用性以及清晰性,MMOCR 利用 Config 文件的继承特性,将配置内容的八个部分做了拆分。因为每部分均与算法任务相关,因此 MMOCR 对每个任务在 Config 中提供了一个任务文件夹,即 `textdet` (文字检测任务)、`textrecog` (文字识别任务)、`kie` (关键信息提取)。同时各个任务算法配置文件夹下进一步划分为两个部分:`_base_` 文件夹与诸多算法文件夹:

1. `_base_` 文件夹下主要存放与具体算法无关的一些通用配置文件,各部分依目录分为常用的数据集、常用的训练策略以及通用的运行配置。

@@ -607,7 +605,7 @@ visualizer = dict(

1. 算法的模型与数据流水线:OCR 领域中一般情况下数据增强策略与算法强相关,因此模型与数据流水线通常置于统一位置。

- 2. 算法在制定数据集上的特定配置:用于训练和测试的配置,将分散在不同位置的配置汇总。同时修改或配置一些在该数据集特有的配置比如batch size以及一些可能修改如数据流水线,训练策略等

+ 2. 算法在制定数据集上的特定配置:用于训练和测试的配置,将分散在不同位置的 *base* 配置汇总。同时可能会修改一些`_base_`中的变量,如batch size, 数据流水线,训练策略等

最后的将配置内容中的各个模块分布在不同配置文件中,最终各配置文件内容如下:

@@ -632,12 +630,12 @@ visualizer = dict(

数据集配置 |

- | schedulers |

+ schedules |

schedule_adam_600e.py

... |

训练策略配置 |

- defaults_runtime.py

|

+ default_runtime.py

|

- |

环境配置

默认hook配置

日志配置

权重加载配置

评测配置

可视化配置 |

@@ -658,7 +656,7 @@ visualizer = dict(

最终目录结构如下:

```Python

-config

+configs

├── textdet

│ ├── _base_

│ │ ├── datasets

@@ -699,7 +697,7 @@ MMOCR 按照以下风格进行配置文件命名,代码库的贡献者需要

{{算法信息}}_{{模块信息}}_{{训练信息}}_{{数据信息}}.py

```

-- 算法信息(algorithm info):算法名称,如 DBNet,CRNN 等

+- 算法信息(algorithm info):算法名称,如 dbnet, crnn 等

- 模块信息(module info):按照数据流的顺序列举一些中间的模块,其内容依赖于算法任务,同时为了避免Config过长,会省略一些与模型强相关的模块。下面举例说明:

@@ -717,7 +715,7 @@ MMOCR 按照以下风格进行配置文件命名,代码库的贡献者需要

{{算法信息}}_{{backbone}}_{{encoder}}_{{decoder}}_{{训练信息}}_{{数据信息}}.py

```

- 一般情况下 encode 和 decoder 位置一般为算法专有,因此一般省略。

+ 一般情况下 encoder 和 decoder 位置一般为算法专有,因此一般省略。

- 训练信息(training info):训练策略的一些设置,包括 batch size,schedule 等

From 794744826e5d0c7d7fd24eb89a54982bfc06be6d Mon Sep 17 00:00:00 2001

From: liukuikun <24622904+Harold-lkk@users.noreply.github.com>

Date: Fri, 23 Sep 2022 14:53:48 +0800

Subject: [PATCH 15/32] [Config] auto scale lr (#1326)

---

configs/kie/sdmgr/sdmgr_novisual_60e_wildreceipt-openset.py | 1 +

configs/kie/sdmgr/sdmgr_novisual_60e_wildreceipt.py | 2 ++

configs/kie/sdmgr/sdmgr_unet16_60e_wildreceipt.py | 2 ++

configs/textdet/dbnet/dbnet_resnet18_fpnc_100k_synthtext.py | 2 ++

configs/textdet/dbnet/dbnet_resnet18_fpnc_1200e_icdar2015.py | 2 ++

.../textdet/dbnet/dbnet_resnet50-dcnv2_fpnc_100k_synthtext.py | 2 ++

.../textdet/dbnet/dbnet_resnet50-dcnv2_fpnc_1200e_icdar2015.py | 2 ++

.../dbnetpp/dbnetpp_resnet50-dcnv2_fpnc_100k_synthtext.py | 2 ++

.../dbnetpp/dbnetpp_resnet50-dcnv2_fpnc_1200e_icdar2015.py | 2 ++

configs/textdet/drrg/drrg_resnet50_fpn-unet_1200e_ctw1500.py | 2 ++

.../textdet/fcenet/fcenet_resnet50-dcnv2_fpn_1500e_ctw1500.py | 2 ++

configs/textdet/fcenet/fcenet_resnet50_fpn_1500e_icdar2015.py | 2 ++

configs/textdet/maskrcnn/mask-rcnn_resnet50_fpn_160e_ctw1500.py | 2 ++

.../textdet/maskrcnn/mask-rcnn_resnet50_fpn_160e_icdar2015.py | 2 ++

configs/textdet/panet/panet_resnet18_fpem-ffm_600e_ctw1500.py | 2 ++

configs/textdet/panet/panet_resnet18_fpem-ffm_600e_icdar2015.py | 2 ++

configs/textdet/panet/panet_resnet50_fpem-ffm_600e_icdar2017.py | 2 ++

configs/textdet/psenet/psenet_resnet50_fpnf_600e_ctw1500.py | 2 ++

configs/textdet/psenet/psenet_resnet50_fpnf_600e_icdar2015.py | 2 ++

configs/textdet/psenet/psenet_resnet50_fpnf_600e_icdar2017.py | 2 ++

.../textsnake/textsnake_resnet50_fpn-unet_1200e_ctw1500.py | 2 ++

configs/textrecog/abinet/abinet-vision_20e_st-an_mj.py | 2 ++

configs/textrecog/abinet/abinet_20e_st-an_mj.py | 2 ++

configs/textrecog/crnn/crnn_mini-vgg_5e_mj.py | 2 ++

configs/textrecog/master/master_resnet31_12e_st_mj_sa.py | 2 ++

configs/textrecog/nrtr/nrtr_modality-transform_6e_st_mj.py | 2 ++

configs/textrecog/nrtr/nrtr_resnet31-1by16-1by8_6e_st_mj.py | 2 ++

.../robustscanner_resnet31_5e_st-sub_mj-sub_sa_real.py | 2 ++

.../sar_resnet31_parallel-decoder_5e_st-sub_mj-sub_sa_real.py | 2 ++

configs/textrecog/satrn/satrn_shallow_5e_st_mj.py | 2 ++

30 files changed, 59 insertions(+)

diff --git a/configs/kie/sdmgr/sdmgr_novisual_60e_wildreceipt-openset.py b/configs/kie/sdmgr/sdmgr_novisual_60e_wildreceipt-openset.py

index 716661930..bc3d52a1c 100644

--- a/configs/kie/sdmgr/sdmgr_novisual_60e_wildreceipt-openset.py

+++ b/configs/kie/sdmgr/sdmgr_novisual_60e_wildreceipt-openset.py

@@ -68,3 +68,4 @@

visualizer = dict(

type='KIELocalVisualizer', name='visualizer', is_openset=True)

+auto_scale_lr = dict(base_batch_size=4)

diff --git a/configs/kie/sdmgr/sdmgr_novisual_60e_wildreceipt.py b/configs/kie/sdmgr/sdmgr_novisual_60e_wildreceipt.py

index 6f979e91c..b56c2b9b6 100644

--- a/configs/kie/sdmgr/sdmgr_novisual_60e_wildreceipt.py

+++ b/configs/kie/sdmgr/sdmgr_novisual_60e_wildreceipt.py

@@ -24,3 +24,5 @@

sampler=dict(type='DefaultSampler', shuffle=False),

dataset=wildreceipt_test)

test_dataloader = val_dataloader

+

+auto_scale_lr = dict(base_batch_size=4)

diff --git a/configs/kie/sdmgr/sdmgr_unet16_60e_wildreceipt.py b/configs/kie/sdmgr/sdmgr_unet16_60e_wildreceipt.py

index 030f3b2c8..d49cbbc33 100644

--- a/configs/kie/sdmgr/sdmgr_unet16_60e_wildreceipt.py

+++ b/configs/kie/sdmgr/sdmgr_unet16_60e_wildreceipt.py

@@ -25,3 +25,5 @@

dataset=wildreceipt_test)

test_dataloader = val_dataloader

+

+auto_scale_lr = dict(base_batch_size=4)

diff --git a/configs/textdet/dbnet/dbnet_resnet18_fpnc_100k_synthtext.py b/configs/textdet/dbnet/dbnet_resnet18_fpnc_100k_synthtext.py

index dba5fd966..c992475cd 100644

--- a/configs/textdet/dbnet/dbnet_resnet18_fpnc_100k_synthtext.py

+++ b/configs/textdet/dbnet/dbnet_resnet18_fpnc_100k_synthtext.py

@@ -26,3 +26,5 @@

dataset=st_det_test)

test_dataloader = val_dataloader

+

+auto_scale_lr = dict(base_batch_size=16)

diff --git a/configs/textdet/dbnet/dbnet_resnet18_fpnc_1200e_icdar2015.py b/configs/textdet/dbnet/dbnet_resnet18_fpnc_1200e_icdar2015.py

index 5294552d0..13751a4ae 100644

--- a/configs/textdet/dbnet/dbnet_resnet18_fpnc_1200e_icdar2015.py

+++ b/configs/textdet/dbnet/dbnet_resnet18_fpnc_1200e_icdar2015.py

@@ -26,3 +26,5 @@

dataset=ic15_det_test)

test_dataloader = val_dataloader

+

+auto_scale_lr = dict(base_batch_size=16)

diff --git a/configs/textdet/dbnet/dbnet_resnet50-dcnv2_fpnc_100k_synthtext.py b/configs/textdet/dbnet/dbnet_resnet50-dcnv2_fpnc_100k_synthtext.py

index 63919808a..19c94f89a 100644

--- a/configs/textdet/dbnet/dbnet_resnet50-dcnv2_fpnc_100k_synthtext.py

+++ b/configs/textdet/dbnet/dbnet_resnet50-dcnv2_fpnc_100k_synthtext.py

@@ -26,3 +26,5 @@

dataset=st_det_test)

test_dataloader = val_dataloader

+

+auto_scale_lr = dict(base_batch_size=16)

diff --git a/configs/textdet/dbnet/dbnet_resnet50-dcnv2_fpnc_1200e_icdar2015.py b/configs/textdet/dbnet/dbnet_resnet50-dcnv2_fpnc_1200e_icdar2015.py

index ab05a2f23..074cf74b4 100644

--- a/configs/textdet/dbnet/dbnet_resnet50-dcnv2_fpnc_1200e_icdar2015.py

+++ b/configs/textdet/dbnet/dbnet_resnet50-dcnv2_fpnc_1200e_icdar2015.py

@@ -29,3 +29,5 @@

dataset=ic15_det_test)

test_dataloader = val_dataloader

+

+auto_scale_lr = dict(base_batch_size=16)

diff --git a/configs/textdet/dbnetpp/dbnetpp_resnet50-dcnv2_fpnc_100k_synthtext.py b/configs/textdet/dbnetpp/dbnetpp_resnet50-dcnv2_fpnc_100k_synthtext.py

index 6a12fb549..078cb9583 100644

--- a/configs/textdet/dbnetpp/dbnetpp_resnet50-dcnv2_fpnc_100k_synthtext.py

+++ b/configs/textdet/dbnetpp/dbnetpp_resnet50-dcnv2_fpnc_100k_synthtext.py

@@ -30,3 +30,5 @@

pipeline=_base_.test_pipeline))

test_dataloader = val_dataloader

+

+auto_scale_lr = dict(base_batch_size=16)

diff --git a/configs/textdet/dbnetpp/dbnetpp_resnet50-dcnv2_fpnc_1200e_icdar2015.py b/configs/textdet/dbnetpp/dbnetpp_resnet50-dcnv2_fpnc_1200e_icdar2015.py

index be14e04f3..6fe192657 100644

--- a/configs/textdet/dbnetpp/dbnetpp_resnet50-dcnv2_fpnc_1200e_icdar2015.py

+++ b/configs/textdet/dbnetpp/dbnetpp_resnet50-dcnv2_fpnc_1200e_icdar2015.py

@@ -30,3 +30,5 @@

pipeline=_base_.test_pipeline))

test_dataloader = val_dataloader

+

+auto_scale_lr = dict(base_batch_size=16)

diff --git a/configs/textdet/drrg/drrg_resnet50_fpn-unet_1200e_ctw1500.py b/configs/textdet/drrg/drrg_resnet50_fpn-unet_1200e_ctw1500.py

index 6f876ce87..c6a42b079 100644

--- a/configs/textdet/drrg/drrg_resnet50_fpn-unet_1200e_ctw1500.py

+++ b/configs/textdet/drrg/drrg_resnet50_fpn-unet_1200e_ctw1500.py

@@ -26,3 +26,5 @@

dataset=ctw_det_test)

test_dataloader = val_dataloader

+

+auto_scale_lr = dict(base_batch_size=16)

diff --git a/configs/textdet/fcenet/fcenet_resnet50-dcnv2_fpn_1500e_ctw1500.py b/configs/textdet/fcenet/fcenet_resnet50-dcnv2_fpn_1500e_ctw1500.py

index 9e61f8831..c08bb16ed 100644

--- a/configs/textdet/fcenet/fcenet_resnet50-dcnv2_fpn_1500e_ctw1500.py

+++ b/configs/textdet/fcenet/fcenet_resnet50-dcnv2_fpn_1500e_ctw1500.py

@@ -54,3 +54,5 @@

dataset=ctw_det_test)

test_dataloader = val_dataloader

+

+auto_scale_lr = dict(base_batch_size=8)

diff --git a/configs/textdet/fcenet/fcenet_resnet50_fpn_1500e_icdar2015.py b/configs/textdet/fcenet/fcenet_resnet50_fpn_1500e_icdar2015.py

index 93d332d02..5ad6fab31 100644

--- a/configs/textdet/fcenet/fcenet_resnet50_fpn_1500e_icdar2015.py

+++ b/configs/textdet/fcenet/fcenet_resnet50_fpn_1500e_icdar2015.py

@@ -33,3 +33,5 @@

dataset=ic15_det_test)

test_dataloader = val_dataloader

+

+auto_scale_lr = dict(base_batch_size=8)

diff --git a/configs/textdet/maskrcnn/mask-rcnn_resnet50_fpn_160e_ctw1500.py b/configs/textdet/maskrcnn/mask-rcnn_resnet50_fpn_160e_ctw1500.py

index 5c269aa2e..fb0186557 100644

--- a/configs/textdet/maskrcnn/mask-rcnn_resnet50_fpn_160e_ctw1500.py

+++ b/configs/textdet/maskrcnn/mask-rcnn_resnet50_fpn_160e_ctw1500.py

@@ -55,3 +55,5 @@

dataset=ctw_det_test)

test_dataloader = val_dataloader

+

+auto_scale_lr = dict(base_batch_size=8)

diff --git a/configs/textdet/maskrcnn/mask-rcnn_resnet50_fpn_160e_icdar2015.py b/configs/textdet/maskrcnn/mask-rcnn_resnet50_fpn_160e_icdar2015.py

index 07ff14262..399619c9a 100644

--- a/configs/textdet/maskrcnn/mask-rcnn_resnet50_fpn_160e_icdar2015.py

+++ b/configs/textdet/maskrcnn/mask-rcnn_resnet50_fpn_160e_icdar2015.py

@@ -35,3 +35,5 @@

dataset=ic15_det_test)

test_dataloader = val_dataloader

+

+auto_scale_lr = dict(base_batch_size=8)

diff --git a/configs/textdet/panet/panet_resnet18_fpem-ffm_600e_ctw1500.py b/configs/textdet/panet/panet_resnet18_fpem-ffm_600e_ctw1500.py

index d7142ddce..166b4b146 100644

--- a/configs/textdet/panet/panet_resnet18_fpem-ffm_600e_ctw1500.py

+++ b/configs/textdet/panet/panet_resnet18_fpem-ffm_600e_ctw1500.py

@@ -82,3 +82,5 @@

val_evaluator = dict(

type='HmeanIOUMetric', pred_score_thrs=dict(start=0.3, stop=1, step=0.05))

test_evaluator = val_evaluator

+

+auto_scale_lr = dict(base_batch_size=16)

diff --git a/configs/textdet/panet/panet_resnet18_fpem-ffm_600e_icdar2015.py b/configs/textdet/panet/panet_resnet18_fpem-ffm_600e_icdar2015.py

index efeb070d9..4a03cb2dc 100644

--- a/configs/textdet/panet/panet_resnet18_fpem-ffm_600e_icdar2015.py

+++ b/configs/textdet/panet/panet_resnet18_fpem-ffm_600e_icdar2015.py

@@ -31,3 +31,5 @@

val_evaluator = dict(

type='HmeanIOUMetric', pred_score_thrs=dict(start=0.3, stop=1, step=0.05))

test_evaluator = val_evaluator

+

+auto_scale_lr = dict(base_batch_size=64)

diff --git a/configs/textdet/panet/panet_resnet50_fpem-ffm_600e_icdar2017.py b/configs/textdet/panet/panet_resnet50_fpem-ffm_600e_icdar2017.py

index 489aa1542..ba8d37c46 100644

--- a/configs/textdet/panet/panet_resnet50_fpem-ffm_600e_icdar2017.py

+++ b/configs/textdet/panet/panet_resnet50_fpem-ffm_600e_icdar2017.py

@@ -77,3 +77,5 @@

val_evaluator = dict(

type='HmeanIOUMetric', pred_score_thrs=dict(start=0.3, stop=1, step=0.05))

test_evaluator = val_evaluator

+

+auto_scale_lr = dict(base_batch_size=64)

diff --git a/configs/textdet/psenet/psenet_resnet50_fpnf_600e_ctw1500.py b/configs/textdet/psenet/psenet_resnet50_fpnf_600e_ctw1500.py

index 7fa4eb298..9f36af2c6 100644

--- a/configs/textdet/psenet/psenet_resnet50_fpnf_600e_ctw1500.py

+++ b/configs/textdet/psenet/psenet_resnet50_fpnf_600e_ctw1500.py

@@ -51,3 +51,5 @@

dataset=ctw_det_test)

test_dataloader = val_dataloader

+

+auto_scale_lr = dict(base_batch_size=64 * 4)

diff --git a/configs/textdet/psenet/psenet_resnet50_fpnf_600e_icdar2015.py b/configs/textdet/psenet/psenet_resnet50_fpnf_600e_icdar2015.py

index 11d7ecf8a..fc5561780 100644

--- a/configs/textdet/psenet/psenet_resnet50_fpnf_600e_icdar2015.py

+++ b/configs/textdet/psenet/psenet_resnet50_fpnf_600e_icdar2015.py

@@ -40,3 +40,5 @@

dataset=ic15_det_test)

test_dataloader = val_dataloader

+

+auto_scale_lr = dict(base_batch_size=64 * 4)

diff --git a/configs/textdet/psenet/psenet_resnet50_fpnf_600e_icdar2017.py b/configs/textdet/psenet/psenet_resnet50_fpnf_600e_icdar2017.py

index ad472a21f..a813ea08a 100644

--- a/configs/textdet/psenet/psenet_resnet50_fpnf_600e_icdar2017.py

+++ b/configs/textdet/psenet/psenet_resnet50_fpnf_600e_icdar2017.py

@@ -12,3 +12,5 @@

train_dataloader = dict(dataset=ic17_det_train)

val_dataloader = dict(dataset=ic17_det_test)

test_dataloader = val_dataloader

+

+auto_scale_lr = dict(base_batch_size=64 * 4)

diff --git a/configs/textdet/textsnake/textsnake_resnet50_fpn-unet_1200e_ctw1500.py b/configs/textdet/textsnake/textsnake_resnet50_fpn-unet_1200e_ctw1500.py

index 484b4f26f..525c397fa 100644

--- a/configs/textdet/textsnake/textsnake_resnet50_fpn-unet_1200e_ctw1500.py

+++ b/configs/textdet/textsnake/textsnake_resnet50_fpn-unet_1200e_ctw1500.py

@@ -26,3 +26,5 @@

dataset=ctw_det_test)

test_dataloader = val_dataloader

+

+auto_scale_lr = dict(base_batch_size=4)

diff --git a/configs/textrecog/abinet/abinet-vision_20e_st-an_mj.py b/configs/textrecog/abinet/abinet-vision_20e_st-an_mj.py

index b6f220b85..39a60f783 100644

--- a/configs/textrecog/abinet/abinet-vision_20e_st-an_mj.py

+++ b/configs/textrecog/abinet/abinet-vision_20e_st-an_mj.py

@@ -54,3 +54,5 @@

val_evaluator = dict(

dataset_prefixes=['CUTE80', 'IIIT5K', 'SVT', 'SVTP', 'IC13', 'IC15'])

test_evaluator = val_evaluator

+

+auto_scale_lr = dict(base_batch_size=192 * 8)

diff --git a/configs/textrecog/abinet/abinet_20e_st-an_mj.py b/configs/textrecog/abinet/abinet_20e_st-an_mj.py

index 078bebf40..85b00cd9d 100644

--- a/configs/textrecog/abinet/abinet_20e_st-an_mj.py

+++ b/configs/textrecog/abinet/abinet_20e_st-an_mj.py

@@ -54,3 +54,5 @@

val_evaluator = dict(

dataset_prefixes=['CUTE80', 'IIIT5K', 'SVT', 'SVTP', 'IC13', 'IC15'])

test_evaluator = val_evaluator

+

+auto_scale_lr = dict(base_batch_size=192 * 8)

diff --git a/configs/textrecog/crnn/crnn_mini-vgg_5e_mj.py b/configs/textrecog/crnn/crnn_mini-vgg_5e_mj.py

index 7fd16506c..acc76cdde 100644

--- a/configs/textrecog/crnn/crnn_mini-vgg_5e_mj.py

+++ b/configs/textrecog/crnn/crnn_mini-vgg_5e_mj.py

@@ -45,3 +45,5 @@

val_evaluator = dict(

dataset_prefixes=['CUTE80', 'IIIT5K', 'SVT', 'SVTP', 'IC13', 'IC15'])

test_evaluator = val_evaluator

+

+auto_scale_lr = dict(base_batch_size=64 * 4)

diff --git a/configs/textrecog/master/master_resnet31_12e_st_mj_sa.py b/configs/textrecog/master/master_resnet31_12e_st_mj_sa.py

index 214b2db5e..4695e4cfb 100644

--- a/configs/textrecog/master/master_resnet31_12e_st_mj_sa.py

+++ b/configs/textrecog/master/master_resnet31_12e_st_mj_sa.py

@@ -55,3 +55,5 @@

val_evaluator = dict(

dataset_prefixes=['CUTE80', 'IIIT5K', 'SVT', 'SVTP', 'IC13', 'IC15'])

test_evaluator = val_evaluator

+

+auto_scale_lr = dict(base_batch_size=512 * 4)

diff --git a/configs/textrecog/nrtr/nrtr_modality-transform_6e_st_mj.py b/configs/textrecog/nrtr/nrtr_modality-transform_6e_st_mj.py

index 452831ed7..89784a0e7 100644

--- a/configs/textrecog/nrtr/nrtr_modality-transform_6e_st_mj.py

+++ b/configs/textrecog/nrtr/nrtr_modality-transform_6e_st_mj.py

@@ -51,3 +51,5 @@

val_evaluator = dict(

dataset_prefixes=['CUTE80', 'IIIT5K', 'SVT', 'SVTP', 'IC13', 'IC15'])

test_evaluator = val_evaluator

+

+auto_scale_lr = dict(base_batch_size=384)

diff --git a/configs/textrecog/nrtr/nrtr_resnet31-1by16-1by8_6e_st_mj.py b/configs/textrecog/nrtr/nrtr_resnet31-1by16-1by8_6e_st_mj.py

index f82980aed..3cc9a0d33 100644

--- a/configs/textrecog/nrtr/nrtr_resnet31-1by16-1by8_6e_st_mj.py

+++ b/configs/textrecog/nrtr/nrtr_resnet31-1by16-1by8_6e_st_mj.py

@@ -51,3 +51,5 @@

val_evaluator = dict(

dataset_prefixes=['CUTE80', 'IIIT5K', 'SVT', 'SVTP', 'IC13', 'IC15'])

test_evaluator = val_evaluator

+

+auto_scale_lr = dict(base_batch_size=384)

diff --git a/configs/textrecog/robust_scanner/robustscanner_resnet31_5e_st-sub_mj-sub_sa_real.py b/configs/textrecog/robust_scanner/robustscanner_resnet31_5e_st-sub_mj-sub_sa_real.py

index 5438cef90..2a9edbf15 100644

--- a/configs/textrecog/robust_scanner/robustscanner_resnet31_5e_st-sub_mj-sub_sa_real.py

+++ b/configs/textrecog/robust_scanner/robustscanner_resnet31_5e_st-sub_mj-sub_sa_real.py

@@ -64,3 +64,5 @@

val_evaluator = dict(

dataset_prefixes=['CUTE80', 'IIIT5K', 'SVT', 'SVTP', 'IC13', 'IC15'])

test_evaluator = val_evaluator

+

+auto_scale_lr = dict(base_batch_size=64 * 16)

diff --git a/configs/textrecog/sar/sar_resnet31_parallel-decoder_5e_st-sub_mj-sub_sa_real.py b/configs/textrecog/sar/sar_resnet31_parallel-decoder_5e_st-sub_mj-sub_sa_real.py

index 96626e48f..cfcdf5028 100644

--- a/configs/textrecog/sar/sar_resnet31_parallel-decoder_5e_st-sub_mj-sub_sa_real.py

+++ b/configs/textrecog/sar/sar_resnet31_parallel-decoder_5e_st-sub_mj-sub_sa_real.py

@@ -63,3 +63,5 @@

val_evaluator = dict(

dataset_prefixes=['CUTE80', 'IIIT5K', 'SVT', 'SVTP', 'IC13', 'IC15'])

test_evaluator = val_evaluator

+

+auto_scale_lr = dict(base_batch_size=64 * 48)

diff --git a/configs/textrecog/satrn/satrn_shallow_5e_st_mj.py b/configs/textrecog/satrn/satrn_shallow_5e_st_mj.py

index 76b647585..16a7ef50c 100644

--- a/configs/textrecog/satrn/satrn_shallow_5e_st_mj.py

+++ b/configs/textrecog/satrn/satrn_shallow_5e_st_mj.py

@@ -47,3 +47,5 @@

val_evaluator = dict(

dataset_prefixes=['CUTE80', 'IIIT5K', 'SVT', 'SVTP', 'IC13', 'IC15'])

test_evaluator = val_evaluator

+

+auto_scale_lr = dict(base_batch_size=64 * 8)

From e9d436484287481b47e351cb440f067be9ae170d Mon Sep 17 00:00:00 2001

From: Tong Gao

Date: Fri, 23 Sep 2022 14:54:28 +0800

Subject: [PATCH 16/32] [Fix] ImgAugWrapper: Do not cilp polygons if not

applicables (#1231)

---

mmocr/datasets/transforms/wrappers.py | 7 ++++++-

1 file changed, 6 insertions(+), 1 deletion(-)

diff --git a/mmocr/datasets/transforms/wrappers.py b/mmocr/datasets/transforms/wrappers.py

index f64ffa18e..7a3489ee5 100644

--- a/mmocr/datasets/transforms/wrappers.py

+++ b/mmocr/datasets/transforms/wrappers.py

@@ -1,4 +1,5 @@

# Copyright (c) OpenMMLab. All rights reserved.

+import warnings

from typing import Any, Dict, List, Optional, Tuple, Union

import imgaug

@@ -154,7 +155,11 @@ def _augment_polygons(self, aug: imgaug.augmenters.meta.Augmenter,

removed_poly_inds.append(i)

continue

new_poly = []

- for point in poly.clip_out_of_image(imgaug_polys.shape)[0]:

+ try:

+ poly = poly.clip_out_of_image(imgaug_polys.shape)[0]

+ except Exception as e:

+ warnings.warn(f'Failed to clip polygon out of image: {e}')

+ for point in poly:

new_poly.append(np.array(point, dtype=np.float32))

new_poly = np.array(new_poly, dtype=np.float32).flatten()

# Under some conditions, imgaug can generate "polygon" with only

From 5a88a771c305311fff1e37b45add5f2831d1ff30 Mon Sep 17 00:00:00 2001

From: Xinyu Wang <45810070+xinke-wang@users.noreply.github.com>

Date: Mon, 26 Sep 2022 14:11:04 +0800

Subject: [PATCH 17/32] [Docs] Metrics (#1399)

* init

* fix math

* fix

* apply comments

Co-authored-by: Tong Gao

* apply comments

Co-authored-by: Tong Gao

* apply comments

Co-authored-by: Tong Gao

* fix comments

* update

* update

Co-authored-by: Tong Gao

---

docs/en/basic_concepts/evaluation.md | 196 ++++++++++++++++++++++-

docs/zh_cn/basic_concepts/evaluation.md | 199 +++++++++++++++++++++++-

2 files changed, 392 insertions(+), 3 deletions(-)

diff --git a/docs/en/basic_concepts/evaluation.md b/docs/en/basic_concepts/evaluation.md

index b5313a418..540be3a4d 100644

--- a/docs/en/basic_concepts/evaluation.md

+++ b/docs/en/basic_concepts/evaluation.md

@@ -1,3 +1,197 @@

# Evaluation

-Coming Soon!

+```{note}

+Before reading this document, we recommend that you first read {external+mmengine:doc}`MMEngine: Model Accuracy Evaluation Basics `.

+```

+

+## Metrics

+

+MMOCR implements widely-used evaluation metrics for text detection, text recognition and key information extraction tasks based on the {external+mmengine:doc}`MMEngine: BaseMetric ` base class. Users can specify the metric used in the validation and test phases by modifying the `val_evaluator` and `test_evaluator` fields in the configuration file. For example, the following config shows how to use `HmeanIOUMetric` to evaluate the model performance in text detection task.

+

+```python

+val_evaluator = dict(type='HmeanIOUMetric')

+test_evaluator = val_evaluator

+

+# In addition, MMOCR also supports the combined evaluation of multiple metrics for the same task, such as using WordMetric and CharMetric at the same time

+val_evaluator = [

+ dict(type='WordMetric', mode=['exact', 'ignore_case', 'ignore_case_symbol']),

+ dict(type='CharMetric')

+]

+```

+

+```{tip}

+More evaluation related configurations can be found in the [evaluation configuration tutorial](../user_guides/config.md#evaluation-configuration).

+```

+

+As shown in the following table, MMOCR currently supports 5 evaluation metrics for text detection, text recognition, and key information extraction tasks, including `HmeanIOUMetric`, `WordMetric`, `CharMetric`, `OneMinusNEDMetric`, and `F1Metric`.

+

+| | | | |

+| --------------------------------------- | ------- | ------------------------------------------------- | --------------------------------------------------------------------- |

+| Metric | Task | Input Field | Output Field |

+| [HmeanIOUMetric](#hmeanioumetric) | TextDet | `pred_polygons`

`pred_scores`

`gt_polygons` | `recall`

`precision`

`hmean` |

+| [WordMetric](#wordmetric) | TextRec | `pred_text`

`gt_text` | `word_acc`

`word_acc_ignore_case`

`word_acc_ignore_case_symbol` |

+| [CharMetric](#charmetric) | TextRec | `pred_text`

`gt_text` | `char_recall`

`char_precision` |

+| [OneMinusNEDMetric](#oneminusnedmetric) | TextRec | `pred_text`

`gt_text` | `1-N.E.D` |

+| [F1Metric](#f1metric) | KIE | `pred_labels`

`gt_labels` | `macro_f1`

`micro_f1` |

+

+In general, the evaluation metric used in each task is conventionally determined. Users usually do not need to understand or manually modify the internal implementation of the evaluation metric. However, to facilitate more customized requirements, this document will further introduce the specific implementation details and configurable parameters of the built-in metrics in MMOCR.

+

+### HmeanIOUMetric

+

+[HmeanIOUMetric](mmocr.evaluation.metrics.hmean_iou_metric.HmeanIOUMetric) is one of the most widely used evaluation metrics in text detection tasks, because it calculates the harmonic mean (H-mean) between the detection precision (P) and recall rate (R). The `HmeanIOUMetric` can be calculated by the following equation:

+

+```{math}

+H = \frac{2}{\frac{1}{P} + \frac{1}{R}} = \frac{2PR}{P+R}

+```

+

+In addition, since it is equivalent to the F-score (also known as F-measure or F-metric) when {math}`\beta = 1`, `HmeanIOUMetric` is sometimes written as `F1Metric` or `f1-score`:

+

+```{math}

+F_1=(1+\beta^2)\cdot\frac{PR}{\beta^2\cdot P+R} = \frac{2PR}{P+R}

+```

+

+In MMOCR, the calculation of `HmeanIOUMetric` can be summarized as the following steps:

+

+1. Filter out invalid predictions

+

+ - Filter out predictions with a score is lower than `pred_score_thrs`

+ - Filter out predictions overlapping with `ignored` ground truth boxes with an overlap ratio higher than `ignore_precision_thr`

+

+ It is worth noting that `pred_score_thrs` will **automatically search** for the **best threshold** within a certain range by default, and users can also customize the search range by manually modifying the configuration file:

+

+ ```python

+ # By default, HmeanIOUMetric searches the best threshold within the range [0.3, 0.9] with a step size of 0.1

+ val_evaluator = dict(type='HmeanIOUMetric', pred_score_thrs=dict(start=0.3, stop=0.9, step=0.1))

+ ```

+

+2. Calculate the IoU matrix

+

+ - At the data processing stage, `HmeanIOUMetric` will calculate and maintain an {math}`M \times N` IoU matrix `iou_metric` for the convenience of the subsequent bounding box pairing step. Here, M and N represent the number of label bounding boxes and filtered prediction bounding boxes, respectively. Therefore, each element of this matrix stores the IoU between the m-th label bounding box and the n-th prediction bounding box.

+

+3. Compute the number of GT samples that can be accurately matched based on the corresponding pairing strategy

+

+ Although `HmeanIOUMetric` can be calculated by a fixed formula, there may still be some subtle differences in the specific implementations. These differences mainly reflect the use of different strategies to match gt and predicted bounding boxes, which leads to the difference in final scores. Currently, MMOCR supports two matching strategies, namely `vanilla` and `max_matching`, for the `HmeanIOUMetric`. As shown below, users can specify the matching strategies in the config.

+

+ - `vanilla` matching strategy

+

+ By default, `HmeanIOUMetric` adopts the `vanilla` matching strategy, which is consistent with the `hmean-iou` implementation in MMOCR 0.x and the **official** text detection competition evaluation standard of ICDAR series. The matching strategy adopts the first-come-first-served matching method to pair the labels and predictions.

+

+ ```python

+ # By default, HmeanIOUMetric adopts 'vanilla' matching strategy

+ val_evaluator = dict(type='HmeanIOUMetric')

+ ```

+

+ - `max_matching` matching strategy

+

+ To address the shortcomings of the existing matching mechanism, MMOCR has implemented a more efficient matching strategy to maximize the number of matches.

+

+ ```python

+ # Specify to use 'max_matching' matching strategy

+ val_evaluator = dict(type='HmeanIOUMetric', strategy='max_matching')

+ ```

+

+ ```{note}

+ We recommend that research-oriented developers use the default `vanilla` matching strategy to ensure consistency with other papers. For industry-oriented developers, you can use the `max_matching` matching strategy to achieve optimized performance.

+ ```

+

+4. Compute the final evaluation score according to the aforementioned matching strategy

+

+### WordMetric

+

+[WordMetric](mmocr.evaluation.metrics.recog_metric.WordMetric) implements **word-level** text recognition evaluation metrics and includes three text matching modes, namely `exact`, `ignore_case`, and `ignore_case_symbol`. Users can freely combine the output of one or more text matching modes in the configuration file by modifying the `mode` field.

+

+```python

+# Use WordMetric for text recognition task

+val_evaluator = [

+ dict(type='WordMetric', mode=['exact', 'ignore_case', 'ignore_case_symbol'])

+]

+```

+

+- `exact`:Full matching mode, i.e., only when the predicted text and the ground truth text are exactly the same, the predicted text is considered to be correct.

+- `ignore_case`:The mode ignores the case of the predicted text and the ground truth text.

+- `ignore_case_symbol`:The mode ignores the case and symbols of the predicted text and the ground truth text. This is also the text recognition accuracy reported by most academic papers. The performance reported by MMOCR uses the `ignore_case_symbol` mode by default.

+

+Assume that the real label is `MMOCR!` and the model output is `mmocr`. The `WordMetric` scores under the three matching modes are: `{'exact': 0, 'ignore_case': 0, 'ignore_case_symbol': 1}`.

+

+### CharMetric

+

+[CharMetric](mmocr.evaluation.metrics.recog_metric.CharMetric) implements **character-level** text recognition evaluation metrics that are **case-insensitive**.

+

+```python

+# Use CharMetric for text recognition task

+val_evaluator = [dict(type='CharMetric')]

+```

+

+Specifically, `CharMetric` will output two evaluation metrics, namely `char_precision` and `char_recall`. Let the number of correctly predicted characters (True Positive) be {math}`\sigma_{tp}`, then the precision *P* and recall *R* can be calculated by the following equation:

+

+```{math}

+P=\frac{\sigma_{tp}}{\sigma_{gt}}, R = \frac{\sigma_{tp}}{\sigma_{pred}}

+```

+

+where {math}`\sigma_{gt}` and {math}`\sigma_{pred}` represent the total number of characters in the label text and the predicted text, respectively.

+

+For example, assume that the label text is "MM**O**CR" and the predicted text is "mm**0**cR**1**". The score of the `CharMetric` is:

+

+```{math}

+P=\frac{4}{5}, R=\frac{4}{6}

+```

+

+### OneMinusNEDMetric

+

+[OneMinusNEDMetric(1-N.E.D)](mmocr.evaluation.metrics.recog_metric.OneMinusNEDMetric) is commonly used for text recognition evaluation of Chinese or English **text line-level** annotations. Unlike the full matching metric that requires the prediction and the gt text to be exactly the same, `1-N.E.D` uses the normalized [edit distance](https://en.wikipedia.org/wiki/Edit_distance) (also known as Levenshtein Distance) to measure the difference between the predicted and the gt text, so that the performance difference of the model can be better distinguished when evaluating long texts. Assume that the real and predicted texts are {math}`s_i` and {math}`\hat{s_i}`, respectively, and their lengths are {math}`l_{i}` and {math}`\hat{l_i}`, respectively. The `OneMinusNEDMetric` score can be calculated by the following formula:

+

+```{math}

+score = 1 - \frac{1}{N}\sum_{i=1}^{N}\frac{D(s_i, \hat{s_{i}})}{max(l_{i},\hat{l_{i}})}

+```

+

+where *N* is the total number of samples, and {math}`D(s_1, s_2)` is the edit distance between two strings.

+

+For example, assume that the real label is "OpenMMLabMMOCR", the prediction of model A is "0penMMLabMMOCR", and the prediction of model B is "uvwxyz". The results of the full matching and `OneMinusNEDMetric` evaluation metrics are as follows:

+

+| | | |

+| ------- | ---------- | ---------- |

+| | Full-match | 1 - N.E.D. |

+| Model A | 0 | 0.92857 |

+| Model B | 0 | 0 |

+

+As shown in the table above, although the model A only predicted one letter incorrectly, both models got 0 in when using full-match strategy. However, the `OneMinusNEDMetric` evaluation metric can better distinguish the performance of the two models on **long texts**.

+

+### F1Metric

+

+[F1Metric](mmocr.evaluation.metrics.f_metric.F1Metric) implements the F1-Metric evaluation metric for KIE tasks and provides two modes, namely `micro` and `macro`.

+

+```python

+val_evaluator = [

+ dict(type='F1Metric', mode=['micro', 'macro'],

+]

+```

+

+- `micro` mode: Calculate the global F1-Metric score based on the total number of True Positive, False Negative, and False Positive.

+

+- `macro` mode:Calculate the F1-Metric score for each class and then take the average.

+

+### Customized Metric

+

+MMOCR supports the implementation of customized evaluation metrics for users who pursue higher customization. In general, users only need to create a customized evaluation metric class `CustomizedMetric` and inherit {external+mmengine:doc}`MMEngine: BaseMetric `. Then, the data format processing method `process` and the metric calculation method `compute_metrics` need to be overwritten respectively. Finally, add it to the `METRICS` registry to implement any customized evaluation metric.

+

+```python

+from mmengine.evaluator import BaseMetric

+from mmocr.registry import METRICS

+

+@METRICS.register_module()

+class CustomizedMetric(BaseMetric):

+

+ def process(self, data_batch: Sequence[Dict], predictions: Sequence[Dict]):

+ """ process receives two parameters, data_batch stores the gt label information, and predictions stores the predicted results.

+ """

+ pass

+

+ def compute_metrics(self, results: List):

+ """ compute_metric receives the results of the process method as input and returns the evaluation results.

+ """

+ pass

+```

+

+```{note}

+More details can be found in {external+mmengine:doc}`MMEngine Documentation: BaseMetric `.

+```

diff --git a/docs/zh_cn/basic_concepts/evaluation.md b/docs/zh_cn/basic_concepts/evaluation.md

index 8d1229e5c..272754c00 100644

--- a/docs/zh_cn/basic_concepts/evaluation.md

+++ b/docs/zh_cn/basic_concepts/evaluation.md

@@ -1,3 +1,198 @@

-# 评估

+# 模型评测

-待更新

+```{note}

+阅读此文档前,建议您先了解 {external+mmengine:doc}`MMEngine: 模型精度评测基本概念 `。

+```

+

+## 评测指标

+

+MMOCR 基于 {external+mmengine:doc}`MMEngine: BaseMetric ` 基类实现了常用的文本检测、文本识别以及关键信息抽取任务的评测指标,用户可以通过修改配置文件中的 `val_evaluator` 与 `test_evaluator` 字段来便捷地指定验证与测试阶段采用的评测方法。例如,以下配置展示了如何在文本检测算法中使用 `HmeanIOUMetric` 来评测模型性能。

+

+```python

+# 文本检测任务中通常使用 HmeanIOUMetric 来评测模型性能

+val_evaluator = [dict(type='HmeanIOUMetric')]

+

+# 此外,MMOCR 也支持相同任务下的多种指标组合评测,如同时使用 WordMetric 及 CharMetric

+val_evaluator = [

+ dict(type='WordMetric', mode=['exact', 'ignore_case', 'ignore_case_symbol']),

+ dict(type='CharMetric')

+]

+```

+

+```{tip}

+更多评测相关配置请参考[评测配置教程](../user_guides/config.md#评测配置)。

+```

+

+如下表所示,MMOCR 目前针对文本检测、识别、及关键信息抽取等任务共内置了 5 种评测指标,分别为 `HmeanIOUMetric`,`WordMetric`,`CharMetric`,`OneMinusNEDMetric`,和 `F1Metric`。

+

+| | | | |

+| --------------------------------------- | ------------ | ------------------------------------------------- | --------------------------------------------------------------------- |

+| 评测指标 | 任务类型 | 输入字段 | 输出字段 |

+| [HmeanIOUMetric](#hmeanioumetric) | 文本检测 | `pred_polygons`

`pred_scores`

`gt_polygons` | `recall`

`precision`

`hmean` |

+| [WordMetric](#wordmetric) | 文本识别 | `pred_text`

`gt_text` | `word_acc`

`word_acc_ignore_case`

`word_acc_ignore_case_symbol` |

+| [CharMetric](#charmetric) | 文本识别 | `pred_text`

`gt_text` | `char_recall`

`char_precision` |

+| [OneMinusNEDMetric](#oneminusnedmetric) | 文本识别 | `pred_text`

`gt_text` | `1-N.E.D` |

+| [F1Metric](#f1metric) | 关键信息抽取 | `pred_labels`

`gt_labels` | `macro_f1`

`micro_f1` |

+

+通常来说,每一类任务所采用的评测标准是约定俗成的,用户一般无须深入了解或手动修改评测方法的内部实现。然而,为了方便用户实现更加定制化的需求,本文档将进一步介绍了 MMOCR 内置评测算法的具体实现策略,以及可配置参数。

+

+### HmeanIOUMetric

+

+[HmeanIOUMetric](mmocr.evaluation.metrics.hmean_iou_metric.HmeanIOUMetric) 是文本检测任务中应用最广泛的评测指标之一,因其计算了检测精度(Precision)与召回率(Recall)之间的调和平均数(Harmonic mean, H-mean),故得名 `HmeanIOUMetric`。记精度为 *P*,召回率为 *R*,则 `HmeanIOUMetric` 可由下式计算得到:

+

+```{math}

+H = \frac{2}{\frac{1}{P} + \frac{1}{R}} = \frac{2PR}{P+R}

+```

+

+另外,由于其等价于 {math}`\beta = 1` 时的 F-score (又称 F-measure 或 F-metric),`HmeanIOUMetric` 有时也被写作 `F1Metric` 或 `f1-score` 等:

+

+```{math}

+F_1=(1+\beta^2)\cdot\frac{PR}{\beta^2\cdot P+R} = \frac{2PR}{P+R}

+```

+

+在 MMOCR 的设计中,`HmeanIOUMetric` 的计算可以概括为以下几个步骤:

+

+1. 过滤无效的预测边界盒

+

+ - 依据置信度阈值 `pred_score_thrs` 过滤掉得分较低的预测边界盒

+ - 依据 `ignore_precision_thr` 阈值过滤掉与 `ignored` 样本重合度过高的预测边界盒

+

+ 值得注意的是,`pred_score_thrs` 默认将**自动搜索**一定范围内的**最佳阈值**,用户也可以通过手动修改配置文件来自定义搜索范围:

+

+ ```python

+ # HmeanIOUMetric 默认以 0.1 为步长搜索 [0.3, 0.9] 范围内的最佳得分阈值

+ val_evaluator = dict(type='HmeanIOUMetric', pred_score_thrs=dict(start=0.3, stop=0.9, step=0.1))

+ ```

+

+2. 计算 IoU 矩阵

+

+ - 在数据处理阶段,`HmeanIOUMetric` 会计算并维护一个 {math}`M \times N` 的 IoU 矩阵 `iou_metric`,以方便后续的边界盒配对步骤。其中,M 和 N 分别为标签边界盒与过滤后预测边界盒的数量。由此,该矩阵的每个元素都存放了第 m 个标签边界盒与第 n 个预测边界盒之间的交并比(IoU)。

+

+3. 基于相应的配对策略统计能被准确匹配的 GT 样本数

+

+ 尽管 `HmeanIOUMetric` 可以由固定的公式计算取得,不同的任务或算法库内部的具体实现仍可能存在一些细微差别。这些差异主要体现在采用不同的策略来匹配真实与预测边界盒,从而导致最终得分的差距。目前,MMOCR 内部的 `HmeanIOUMetric` 共支持两种不同的匹配策略,即 `vanilla` 与 `max_matching`。如下所示,用户可以通过修改配置文件来指定不同的匹配策略。

+

+ - `vanilla` 匹配策略

+

+ `HmeanIOUMetric` 默认采用 `vanilla` 匹配策略,该实现与 MMOCR 0.x 版本中的 `hmean-iou` 及 ICDAR 系列**官方文本检测竞赛的评测标准保持一致**,采用先到先得的匹配方式对标签边界盒(Ground-truth bbox)与预测边界盒(Predicted bbox)进行配对。

+

+ ```python

+ # 不指定 strategy 时,HmeanIOUMetric 默认采用 'vanilla' 匹配策略

+ val_evaluator = dict(type='HmeanIOUMetric')

+ ```

+

+ - `max_matching` 匹配策略

+

+ 针对现有匹配机制中的不完善之处,MMOCR 算法库实现了一套更高效的匹配策略,用以最大化匹配数目。

+

+ ```python

+ # 指定采用 'max_matching' 匹配策略

+ val_evaluator = dict(type='HmeanIOUMetric', strategy='max_matching')

+ ```

+

+ ```{note}

+ 我们建议面向学术研究的开发用户采用默认的 `vanilla` 匹配策略,以保证与其他论文的对比结果保持一致。而面向工业应用的开发用户则可以采用 `max_matching` 匹配策略,以获得精准的结果。

+ ```

+

+4. 根据上文介绍的 `HmeanIOUMetric` 公式计算最终的评测得分

+

+### WordMetric

+

+[WordMetric](mmocr.evaluation.metrics.recog_metric.WordMetric) 实现了**单词级别**的文本识别评测指标,并内置了 `exact`,`ignore_case`,及 `ignore_case_symbol` 三种文本匹配模式,用户可以在配置文件中修改 `mode` 字段来自由组合输出一种或多种文本匹配模式下的 `WordMetric` 得分。

+

+```python

+# 在文本识别任务中使用 WordMetric 评测

+val_evaluator = [

+ dict(type='WordMetric', mode=['exact', 'ignore_case', 'ignore_case_symbol'])

+]

+```

+

+- `exact`:全匹配模式,即,预测与标签完全一致才能被记录为正确样本。

+- `ignore_case`:忽略大小写的匹配模式。

+- `ignore_case_symbol`:忽略大小写及符号的匹配模式,这也是大部分学术论文中报告的文本识别准确率;MMOCR 报告的识别模型性能默认采用该匹配模式。

+

+假设真实标签为 `MMOCR!`,模型的输出结果为 `mmocr`,则三种匹配模式下的 `WordMetric` 得分分别为:`{'exact': 0, 'ignore_case': 0, 'ignore_case_symbol': 1}`。

+

+### CharMetric

+

+[CharMetric](mmocr.evaluation.metrics.recog_metric.CharMetric) 实现了**不区分大小写**的**字符级别**的文本识别评测指标。

+

+```python

+# 在文本识别任务中使用 CharMetric 评测

+val_evaluator = [dict(type='CharMetric')]

+```

+

+具体而言,`CharMetric` 会输出两个评测评测指标,即字符精度 `char_precision` 和字符召回率 `char_recall`。设正确预测的字符(True Positive)数量为 {math}`\sigma_{tp}`,则精度 *P* 和召回率 *R* 可由下式计算取得:

+

+```{math}

+P=\frac{\sigma_{tp}}{\sigma_{gt}}, R = \frac{\sigma_{tp}}{\sigma_{pred}}

+```

+

+其中,{math}`\sigma_{gt}` 与 {math}`\sigma_{pred}` 分别为标签文本与预测文本所包含的字符总数。

+

+例如,假设标签文本为 "MM**O**CR",预测文本为 "mm**0**cR**1**",则使用 `CharMetric` 评测指标的得分为:

+

+```{math}

+P=\frac{4}{5}, R=\frac{4}{6}

+```

+

+### OneMinusNEDMetric

+

+[`OneMinusNEDMetric(1-N.E.D)`](mmocr.evaluation.metrics.recog_metric.OneMinusNEDMetric) 常用于中文或英文**文本行级别**标注的文本识别评测,不同于全匹配的评测标准要求预测与真实样本完全一致,该评测指标使用归一化的[编辑距离](https://en.wikipedia.org/wiki/Edit_distance)(Edit Distance,又名莱温斯坦距离 Levenshtein Distance)来测量预测文本与真实文本之间的差异性,从而在评测长文本样本时能够更好地区分出模型的性能差异。假设真实和预测文本分别为 {math}`s_i` 和 {math}`\hat{s_i}`,其长度分别为 {math}`l_{i}` 和 {math}`\hat{l_i}`,则 `OneMinusNEDMetric` 得分可由下式计算得到:

+

+```{math}

+score = 1 - \frac{1}{N}\sum_{i=1}^{N}\frac{D(s_i, \hat{s_{i}})}{max(l_{i},\hat{l_{i}})}

+```

+

+其中,*N* 是样本总数,{math}`D(s_1, s_2)` 为两个字符串之间的编辑距离。

+

+例如,假设真实标签为 "OpenMMLabMMOCR",模型 A 的预测结果为 "0penMMLabMMOCR", 模型 B 的预测结果为 "uvwxyz",则采用全匹配和 `OneMinusNEDMetric` 评测指标的结果分别为:

+

+| | | |

+| ------ | ------ | ---------- |

+| | 全匹配 | 1 - N.E.D. |

+| 模型 A | 0 | 0.92857 |

+| 模型 B | 0 | 0 |

+

+由上表可以发现,尽管模型 A 仅预测错了一个字母,而模型 B 全部预测错误,在使用全匹配的评测指标时,这两个模型的得分都为0;而使用 `OneMinuesNEDMetric` 的评测指标则能够更好地区分模型在**长文本**上的性能差异。

+

+### F1Metric

+

+[F1Metric](mmocr.evaluation.metrics.f_metric.F1Metric) 实现了针对 KIE 任务的 F1-Metric 评测指标,并提供了 `micro` 和 `macro` 两种评测模式。

+

+```python

+val_evaluator = [

+ dict(type='F1Metric', mode=['micro', 'macro'],

+]

+```

+

+- `micro` 模式:依据 True Positive,False Negative,及 False Positive 总数来计算全局 F1-Metric 得分。

+

+- `macro` 模式:依据类别标签计算每一类的 F1-Metric,并求平均值。

+

+### 自定义评测指标

+

+对于追求更高定制化功能的用户,MMOCR 也支持自定义实现不同类型的评测指标。一般来说,用户只需要新建自定义评测指标类 `CustomizedMetric` 并继承 {external+mmengine:doc}`MMEngine: BaseMetric `,然后分别重写数据格式处理方法 `process` 以及指标计算方法 `compute_metrics`。最后,将其加入 `METRICS` 注册器即可实现任意定制化的评测指标。

+

+```python

+from mmengine.evaluator import BaseMetric

+from mmocr.registry import METRICS

+

+@METRICS.register_module()

+class CustomizedMetric(BaseMetric):

+

+ def process(self, data_batch: Sequence[Dict], predictions: Sequence[Dict]):

+ """ process 接收两个参数,分别为 data_batch 存放真实标签信息,以及 predictions

+ 存放预测结果。process 方法负责将标签信息转换并存放至 self.results 变量中

+ """

+ pass

+

+ def compute_metrics(self, results: List):

+ """ compute_metric 使用经过 process 方法处理过的标签数据计算最终评测得分

+ """

+ pass

+```

+

+```{note}

+更多内容可参见 {external+mmengine:doc}`MMEngine 文档: BaseMetric `。

+```

From 77ab13b3ffe8f5d4011748cbc20f1e7f91728454 Mon Sep 17 00:00:00 2001

From: Tong Gao

Date: Tue, 27 Sep 2022 10:44:32 +0800

Subject: [PATCH 18/32] [Docs] Add version switcher to menu (#1407)

* [Docs] Add version switcher to menu

* fix link

---

docs/en/conf.py | 27 +++++++++++++++++++++++++++

docs/zh_cn/conf.py | 23 +++++++++++++++++++++++

2 files changed, 50 insertions(+)

diff --git a/docs/en/conf.py b/docs/en/conf.py

index a0e96d834..e87a4a1b3 100644

--- a/docs/en/conf.py

+++ b/docs/en/conf.py

@@ -95,6 +95,15 @@

'name':

'Upstream',

'children': [

+ {

+ 'name':

+ 'MMEngine',

+ 'url':

+ 'https://github.com/open-mmlab/mmengine',

+ 'description':

+ 'Foundational library for training deep '

+ 'learning models'

+ },

{

'name': 'MMCV',

'url': 'https://github.com/open-mmlab/mmcv',

@@ -107,6 +116,24 @@

},

]

},

+ {

+ 'name':

+ 'Version',

+ 'children': [

+ {

+ 'name': 'MMOCR 0.x',

+ 'url': 'https://mmocr.readthedocs.io/en/latest/',

+ 'description': 'Main branch'

+ },

+ {

+ 'name': 'MMOCR 1.x',

+ 'url': 'https://mmocr.readthedocs.io/en/dev-1.x/',

+ 'description': '1.x branch'

+ },

+ ],

+ 'active':

+ True,

+ },

],

# Specify the language of shared menu

'menu_lang':

diff --git a/docs/zh_cn/conf.py b/docs/zh_cn/conf.py

index 91038a717..61a07194b 100644

--- a/docs/zh_cn/conf.py

+++ b/docs/zh_cn/conf.py

@@ -96,6 +96,11 @@

'name':

'上游库',

'children': [

+ {

+ 'name': 'MMEngine',

+ 'url': 'https://github.com/open-mmlab/mmengine',

+ 'description': '深度学习模型训练基础库'

+ },

{

'name': 'MMCV',

'url': 'https://github.com/open-mmlab/mmcv',

@@ -108,6 +113,24 @@

},

]

},

+ {

+ 'name':

+ '版本',

+ 'children': [

+ {

+ 'name': 'MMOCR 0.x',

+ 'url': 'https://mmocr.readthedocs.io/zh_CN/latest/',

+ 'description': 'main 分支文档'

+ },

+ {

+ 'name': 'MMOCR 1.x',

+ 'url': 'https://mmocr.readthedocs.io/zh_CN/dev-1.x/',

+ 'description': '1.x 分支文档'

+ },

+ ],

+ 'active':

+ True,

+ },

],

# Specify the language of shared menu

'menu_lang':

From 22283b4acd047bd67184019cb37eec1c3116ebde Mon Sep 17 00:00:00 2001

From: Xinyu Wang <45810070+xinke-wang@users.noreply.github.com>

Date: Tue, 27 Sep 2022 10:48:41 +0800

Subject: [PATCH 19/32] [Docs] Data Transforms (#1392)

* init

* reorder

* update

* fix comments

* update

* update images

* update

---

docs/en/basic_concepts/transforms.md | 230 ++++++++++++++++++++++-

docs/zh_cn/basic_concepts/transforms.md | 231 +++++++++++++++++++++++-

docs/zh_cn/migration/dataset.md | 2 +-

3 files changed, 458 insertions(+), 5 deletions(-)

diff --git a/docs/en/basic_concepts/transforms.md b/docs/en/basic_concepts/transforms.md

index ef62fde8d..a5974cf7d 100644

--- a/docs/en/basic_concepts/transforms.md

+++ b/docs/en/basic_concepts/transforms.md

@@ -1,3 +1,229 @@

-# Data Transforms

+# Data Transforms and Pipeline

-Coming Soon!

+In the design of MMOCR, dataset construction and preparation are decoupled. That is, dataset construction classes such as [`OCRDataset`](mmocr.datasets.ocr_dataset.OCRDataset) are responsible for loading and parsing annotation files; while data transforms further apply data preprocessing, augmentation, formatting, and other related functions. Currently, there are five types of data transforms implemented in MMOCR, as shown in the following table.

+

+| | | |

+| -------------------------------- | --------------------------------------------------------------------- | --------------------------------------------------------------------------------------------------- |

+| Transforms Type | File | Description |

+| Data Loading | loading.py | Implemented the data loading functions. |

+| Data Formatting | formatting.py | Formatting the data required by different tasks. |

+| Cross Project Data Adapter | adapters.py | Converting the data format between other OpenMMLab projects and MMOCR. |

+| Data Augmentation Functions | ocr_transforms.py

textdet_transforms.py

textrecog_transforms.py | Various built-in data augmentation methods designed for different tasks. |

+| Wrappers of Third Party Packages | wrappers.py | Wrapping the transforms implemented in popular third party packages such as [ImgAug](https://github.com/aleju/imgaug), and adapting them to MMOCR format. |

+

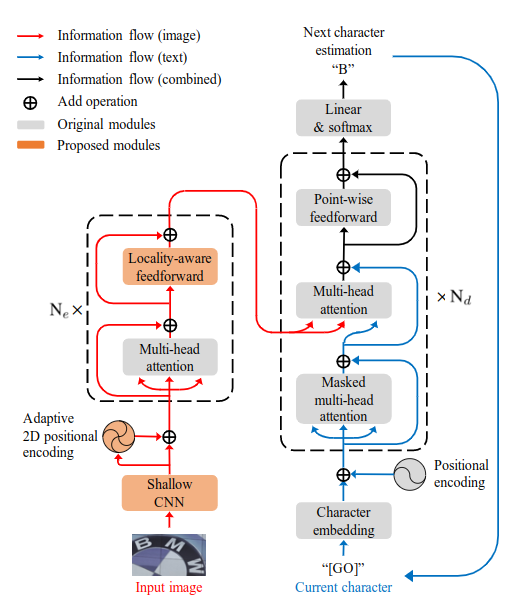

+Since each data transform class is independent of each other, we can easily combine any data transforms to build a data pipeline after we have defined the data fields. As shown in the following figure, in MMOCR, a typical training data pipeline consists of three stages: **data loading**, **data augmentation**, and **data formatting**. Users only need to define the data pipeline list in the configuration file and specify the specific data transform class and its parameters:

+

+

+

+

+

+

+

+```python

+train_pipeline_r18 = [

+ # Loading images

+ dict(

+ type='LoadImageFromFile',

+ file_client_args=file_client_args,

+ color_type='color_ignore_orientation'),

+ # Loading annotations

+ dict(

+ type='LoadOCRAnnotations',

+ with_polygon=True,

+ with_bbox=True,

+ with_label=True,

+ ),

+ # Data augmentation

+ dict(

+ type='ImgAugWrapper',

+ args=[['Fliplr', 0.5],

+ dict(cls='Affine', rotate=[-10, 10]), ['Resize', [0.5, 3.0]]]),

+ dict(type='RandomCrop', min_side_ratio=0.1),

+ dict(type='Resize', scale=(640, 640), keep_ratio=True),

+ dict(type='Pad', size=(640, 640)),

+ # Data formatting

+ dict(

+ type='PackTextDetInputs',

+ meta_keys=('img_path', 'ori_shape', 'img_shape'))

+]

+```

+