'

+ for category in categories

+ ]

+ new_index = index_lines[:list_start_index + 1]

+ new_index.extend(categories_entries)

+ new_index.extend(index_lines[list_end_index:])

+ new_index_content = "\n".join(new_index)

+ write_file(path=GALLERY_INDEX_PATH, content=new_index_content)

+

+

+GALLERY_PAGE_TEMPLATE = """

+# Example Workflows - {category}

+

+Below you can find example workflows you can use as inspiration to build your apps.

+

+{examples}

+""".strip()

+

+

+def generate_gallery_page_for_category(

+ category: str,

+ entries: List[WorkflowGalleryEntry],

+) -> None:

+ examples = [

+ generate_gallery_entry_docs(entry=entry)

+ for entry in entries

+ ]

+ page_content = GALLERY_PAGE_TEMPLATE.format(

+ category=category,

+ examples="\n\n".join(examples)

+ )

+ file_path = generate_gallery_page_file_path(category=category)

+ write_file(path=file_path, content=page_content)

+

+

+GALLERY_ENTRY_TEMPLATE = """

+## {title}

+

+{description}

+

+??? tip "Workflow definition"

+

+ ```json

+ {workflow_definition}

+ ```

+""".strip()

+

+

+def generate_gallery_entry_docs(entry: WorkflowGalleryEntry) -> str:

+ return GALLERY_ENTRY_TEMPLATE.format(

+ title=entry.use_case_title,

+ description=entry.use_case_description,

+ workflow_definition="\n\t".join(json.dumps(entry.workflow_definition, indent=4).split("\n")),

+ )

+

+

+def read_file(path: str) -> str:

+ with open(path, "r") as f:

+ return f.read()

+

+

+def write_file(path: str, content: str) -> None:

+ path = os.path.abspath(path)

+ parent_dir = os.path.dirname(path)

+ os.makedirs(parent_dir, exist_ok=True)

+ with open(path, "w") as f:

+ f.write(content)

+

+

+def find_line_with_marker(lines: List[str], marker: str) -> Optional[int]:

+ for i, line in enumerate(lines):

+ if marker in line:

+ return i

+ return None

+

+

+def generate_gallery_page_link(category: str) -> str:

+ file_path = generate_gallery_page_file_path(category=category)

+ return file_path[len(DOCS_ROOT):-3]

+

+

+def generate_gallery_page_file_path(category: str) -> str:

+ category_slug = slugify_category(category=category)

+ return os.path.join(GALLERY_DIR_PATH, f"{category_slug}.md")

+

+

+def slugify_category(category: str) -> str:

+ return category.lower().replace(" ", "_").replace("/", "_")

+

+

+if __name__ == "__main__":

+ generate_gallery()

diff --git a/docs/enterprise/active-learning/active_learning.md b/docs/enterprise/active-learning/active_learning.md

index 512cc72ace..89262d462e 100644

--- a/docs/enterprise/active-learning/active_learning.md

+++ b/docs/enterprise/active-learning/active_learning.md

@@ -36,7 +36,7 @@ Active learning can be disabled by setting `ACTIVE_LEARNING_ENABLED=false` in th

## Usage patterns

Active Learning data collection may be combined with different components of the Roboflow ecosystem. In particular:

-- the `inference` Python package can be used to get predictions from the model and register them at Roboflow platform

+- the `inference` Python package can be used to get predictions from the model and register them on the Roboflow platform

- one may want to use `InferencePipeline` to get predictions from video and register its video frames using Active Learning

- self-hosted `inference` server - where data is collected while processing requests

- Roboflow hosted `inference` - where you let us make sure you get your predictions and data registered. No

diff --git a/docs/foundation/clip.md b/docs/foundation/clip.md

index 632e248716..15499c82e2 100644

--- a/docs/foundation/clip.md

+++ b/docs/foundation/clip.md

@@ -18,7 +18,7 @@ In this guide, we will show:

## How can I use CLIP model in `inference`?

- directly from `inference[clip]` package, integrating the model directly into your code

-- using `inference` HTTP API (hosted locally, or at Roboflow platform), integrating via HTTP protocol

+- using `inference` HTTP API (hosted locally, or on the Roboflow platform), integrating via HTTP protocol

- using `inference-sdk` package (`pip install inference-sdk`) and [`InferenceHTTPClient`](/docs/inference_sdk/http_client.md)

- creating custom code to make HTTP requests (see [API Reference](/api/))

diff --git a/docs/inference_helpers/inference_sdk.md b/docs/inference_helpers/inference_sdk.md

index c1b0c301fc..f94cc0b47d 100644

--- a/docs/inference_helpers/inference_sdk.md

+++ b/docs/inference_helpers/inference_sdk.md

@@ -7,7 +7,7 @@ You can use this client to run models hosted:

1. On the Roboflow platform (use client version `v0`), and;

2. On device with Inference.

-For models trained at Roboflow platform, client accepts the following inputs:

+For models trained on the Roboflow platform, client accepts the following inputs:

- A single image (Given as a local path, URL, `np.ndarray` or `PIL.Image`);

- Multiple images;

@@ -60,7 +60,7 @@ result = loop.run_until_complete(

)

```

-## Configuration options (used for models trained at Roboflow platform)

+## Configuration options (used for models trained on the Roboflow platform)

### configuring with context managers

@@ -195,8 +195,8 @@ Methods that support batching / parallelism:

## Client for core models

-`InferenceHTTPClient` now supports core models hosted via `inference`. Part of the models can be used at Roboflow hosted

-inference platform (use `https://infer.roboflow.com` as url), other are possible to be deployed locally (usually

+`InferenceHTTPClient` now supports core models hosted via `inference`. Part of the models can be used on the Roboflow

+hosted inference platform (use `https://infer.roboflow.com` as url), other are possible to be deployed locally (usually

local server will be available under `http://localhost:9001`).

!!! tip

@@ -705,12 +705,12 @@ to prevent errors)

## Why does the Inference client have two modes (`v0` and `v1`)?

We are constantly improving our `infrence` package - initial version (`v0`) is compatible with

-models deployed at Roboflow platform (task types: `classification`, `object-detection`, `instance-segmentation` and

+models deployed on the Roboflow platform (task types: `classification`, `object-detection`, `instance-segmentation` and

`keypoints-detection`)

are supported. Version `v1` is available in locally hosted Docker images with HTTP API.

Locally hosted `inference` server exposes endpoints for model manipulations, but those endpoints are not available

-at the moment for models deployed at Roboflow platform.

+at the moment for models deployed on the Roboflow platform.

`api_url` parameter passed to `InferenceHTTPClient` will decide on default client mode - URLs with `*.roboflow.com`

will be defaulted to version `v0`.

diff --git a/docs/workflows/about.md b/docs/workflows/about.md

index 0449dfc901..d18d93a867 100644

--- a/docs/workflows/about.md

+++ b/docs/workflows/about.md

@@ -1,23 +1,34 @@

-# Inference Workflows

+# Workflows

-## What is a Workflow?

+## What is Roboflow Workflows?

-Workflows allow you to define multi-step processes that run one or more models to return results based on model outputs and custom logic.

+Roboflow Workflows is an ecosystem that enables users to create machine learning applications using a wide range

+of pluggable and reusable blocks. These blocks are organized in a way that makes it easy for users to design

+and connect different components. Graphical interface allows to visually construct workflows

+without needing extensive technical expertise. Once the workflow is designed, Workflows engine runs the

+application, ensuring all the components work together seamlessly, providing a rapid transition

+from prototype to production-ready solutions, allowing you to quickly iterate and deploy applications.

+

+Roboflow offers a growing selection of workflows blocks, and the community can also create new blocks, ensuring

+that the ecosystem is continuously expanding and evolving. Moreover, Roboflow provides flexible deployment options,

+including on-premises and cloud-based solutions, allowing users to deploy their applications in the environment

+that best suits their needs.

With Workflows, you can:

-- Detect, classify, and segment objects in images.

-- Apply logic filters such as establish detection consensus or filter detections by confidence.

+- Detect, classify, and segment objects in images using state-of-the-art models.

+

- Use Large Multimodal Models (LMMs) to make determinations at any stage in a workflow.

+- Introduce elements of business logic to translate model predictions into your domain language

+

-You can build and configure Workflows in the Roboflow web interface that you can then deploy using the Roboflow Hosted API, self-host locally and on the cloud using inference, or offline to your hardware devices. You can also build more advanced workflows by writing a Workflow configuration directly in the JSON editor.

In this section of documentation, we walk through what you need to know to create and run workflows. Let’s get started!

diff --git a/docs/workflows/blocks_bundling.md b/docs/workflows/blocks_bundling.md

new file mode 100644

index 0000000000..fd565b792b

--- /dev/null

+++ b/docs/workflows/blocks_bundling.md

@@ -0,0 +1,121 @@

+# Bundling Workflows blocks

+

+To efficiently manage the Workflows ecosystem, a standardized method for building and distributing blocks is

+essential. This allows users to create their own blocks and bundle them into Workflow plugins. A Workflow plugin

+is essentially a Python library that implements a defined interface and can be structured in various ways.

+

+This page outlines the mandatory interface requirements and suggests a structure for blocks that aligns with

+the [Workflows versioning](/workflows/versioning) guidelines.

+

+## Proposed structure of plugin

+

+We propose the following structure of plugin:

+

+```

+.

+├── requirements.txt # file with requirements

+├── setup.py # use different package creation method if you like

+├── {plugin_name}

+│ ├── __init__.py # main module that contains loaders

+│ ├── kinds.py # optionally - definitions of custom kinds

+│ ├── {block_name} # package for your block

+│ │ ├── v1.py # version 1 of your block

+│ │ ├── ... # ... next versions

+│ │ └── v5.py # version 5 of your block

+│ └── {block_name} # package for another block

+└── tests # tests for blocks

+```

+

+## Required interface

+

+Plugin must only provide few extensions to `__init__.py` in main package

+compared to standard Python library:

+

+* `load_blocks()` function to provide list of blocks' classes (required)

+

+* `load_kinds()` function to return all custom [kinds](/workflows/kinds/) the plugin defines (optional)

+

+* `REGISTERED_INITIALIZERS` module property which is a dict mapping name of block

+init parameter into default value or parameter-free function constructing that value - optional

+

+

+### `load_blocks()` function

+

+Function is supposed to enlist all blocks in the plugin - it is allowed to define

+a block once.

+

+Example:

+

+```python

+from typing import List, Type

+from inference.core.workflows.prototypes.block import WorkflowBlock

+

+# example assumes that your plugin name is `my_plugin` and

+# you defined the blocks that are imported here

+from my_plugin.block_1.v1 import Block1V1

+from my_plugin.block_2.v1 import Block2V1

+

+def load_blocks() -> List[Type[WorkflowBlock]]:

+ return [

+ Block1V1,

+ Block2V1,

+]

+```

+

+### `load_kinds()` function

+

+`load_kinds()` function to return all custom kinds the plugin defines. It is optional as your blocks

+may not need custom kinds.

+

+Example:

+

+```python

+from typing import List

+from inference.core.workflows.execution_engine.entities.types import Kind

+

+# example assumes that your plugin name is `my_plugin` and

+# you defined the imported kind

+from my_plugin.kinds import MY_KIND

+

+

+def load_kinds() -> List[Kind]:

+ return [MY_KIND]

+```

+

+

+## `REGISTERED_INITIALIZERS` dictionary

+

+As you know from [the docs describing the Workflows Compiler](/workflows/workflows_compiler/)

+and the [blocks development guide](/workflows/create_workflow_block/), Workflow

+blocs are dynamically initialized during compilation and may require constructor

+parameters. Those parameters can default to values registered in the `REGISTERED_INITIALIZERS`

+dictionary. To expose default a value for an init parameter of your block -

+simply register the name of the init param and its value (or a function generating a value) in the dictionary.

+This is optional part of the plugin interface, as not every block requires a constructor.

+

+Example:

+

+```python

+import os

+

+def init_my_param() -> str:

+ # do init here

+ return "some-value"

+

+REGISTERED_INITIALIZERS = {

+ "param_1": 37,

+ "param_2": init_my_param,

+}

+```

+

+## Enabling plugin in your Workflows ecosystem

+

+To load a plugin you must:

+

+* install the Python package with the plugin in the environment you run Workflows

+

+* export an environment variable named `WORKFLOWS_PLUGINS` set to a comma-separated list of names

+of plugins you want to load.

+

+ * Example: to load two plugins `plugin_a` and `plugin_b`, you need to run

+ `export WORKFLOWS_PLUGINS="plugin_a,plugin_b"`

diff --git a/docs/workflows/blocks_connections.md b/docs/workflows/blocks_connections.md

new file mode 100644

index 0000000000..ae53ae73a7

--- /dev/null

+++ b/docs/workflows/blocks_connections.md

@@ -0,0 +1,75 @@

+# Rules dictating which blocks can be connected

+

+A natural question you might ask is: *How do I know which blocks to connect to achieve my desired outcome?*

+This is a crucial question, which is why we've created auto-generated

+[documentation for all supported Workflow blocks](/workflows/blocks/). In this guide, we’ll show you how to use

+these docs effectively and explain key details that help you understand why certain connections between

+blocks are possible, while others may not be.

+

+!!! Note

+

+ Using the Workflows UI in the Roboflow APP you may find compatible connections between steps found

+ automatically without need for your input. This page explains briefly how to deduce if two

+ blocks can be connected, making it possible to connect steps manually if needed. Logically,

+ the page must appear before a link to [blocks gallery](/workflows/blocks/), as it explains

+ how to effectively use these docs. At the same time, it introduces references to concepts

+ further explained in the User and Developer Guide. Please continue reading those sections

+ if you find some concepts presented here needing further explanation.

+

+

+## Navigation the blocks documentation

+

+When you open the blocks documentation, you’ll see a list of all blocks supported by Roboflow. Each block entry

+includes a name, brief description, category, and license for the block. You can click on any block to see more

+detailed information.

+

+On the block details page, you’ll find documentation for all supported versions of that block,

+starting with the latest version. For each version, you’ll see:

+

+- detailed description of the block

+

+- type identifier, which is required in Workflow definitions to identify the specific block used for a step

+

+- table of configuration properties, listing the fields that can be specified in a Workflow definition,

+including their types, descriptions, and whether they can accept a dynamic selector or just a fixed value.

+

+- Available connections, showing which blocks can provide inputs to this block and which can use its outputs.

+

+- A list of input and output bindings:

+

+ - input bindings are the names of step definition properties that can hold selectors, along with the type

+ (or `kind`) of data they pass.

+

+ - output bindings are names and kinds for block outputs that can be used as inputs by steps defined in

+ Workflow definition

+

+- An example of a Workflow step based on the documented block.

+

+The `kind` mentioned above refers to the type of data flowing through the connection during execution,

+and this is further explained in the developer guide.

+

+## What makes connections valid?

+

+Each block provides a manifest that lists the fields to be included in the Workflow Definition when creating a step.

+The Values of these fields in a Workflow Definition may contain:

+

+- References ([selectors](/workflows/definitions/)) to data the block will process, such as step outputs or

+[batch-oriented workflow inputs](/workflows/workflow_execution)

+

+- Configuration values: Specific settings for the step or references ([selectors](/workflows/definitions/)) that

+provide configuration parameters dynamically during execution.

+

+The manifest also includes the block's outputs.

+

+For each step definition field (if it can hold a [selector](/workflows/definitions/)) and step output,

+the expected [kind](/workflows/kinds) is specified. A [kind](/workflows/kinds) is a high-level definition

+of the type of data that will be passed during workflow execution. Simply put, it describes the data that

+will replace the [selector](/workflows/definitions/) during block execution.

+

+To ensure steps are correctly connected, the Workflow Compiler checks if the input and output [kinds](/workflows/kinds)

+match. If they do, the connection is valid.

+

+Additionally, the [`dimensionality level`](/workflows/workflow_execution#dimensionality-level) of the data is considered when

+validating connections. This ensures that data from multiple sources is compatible across the entire Workflow,

+not just between two connected steps. More details on dimensionality levels can be found in the

+[user guide describing workflow execution](/workflows/workflow_execution).

\ No newline at end of file

diff --git a/docs/workflows/create_and_run.md b/docs/workflows/create_and_run.md

index d562475483..1669cad929 100644

--- a/docs/workflows/create_and_run.md

+++ b/docs/workflows/create_and_run.md

@@ -1,220 +1,161 @@

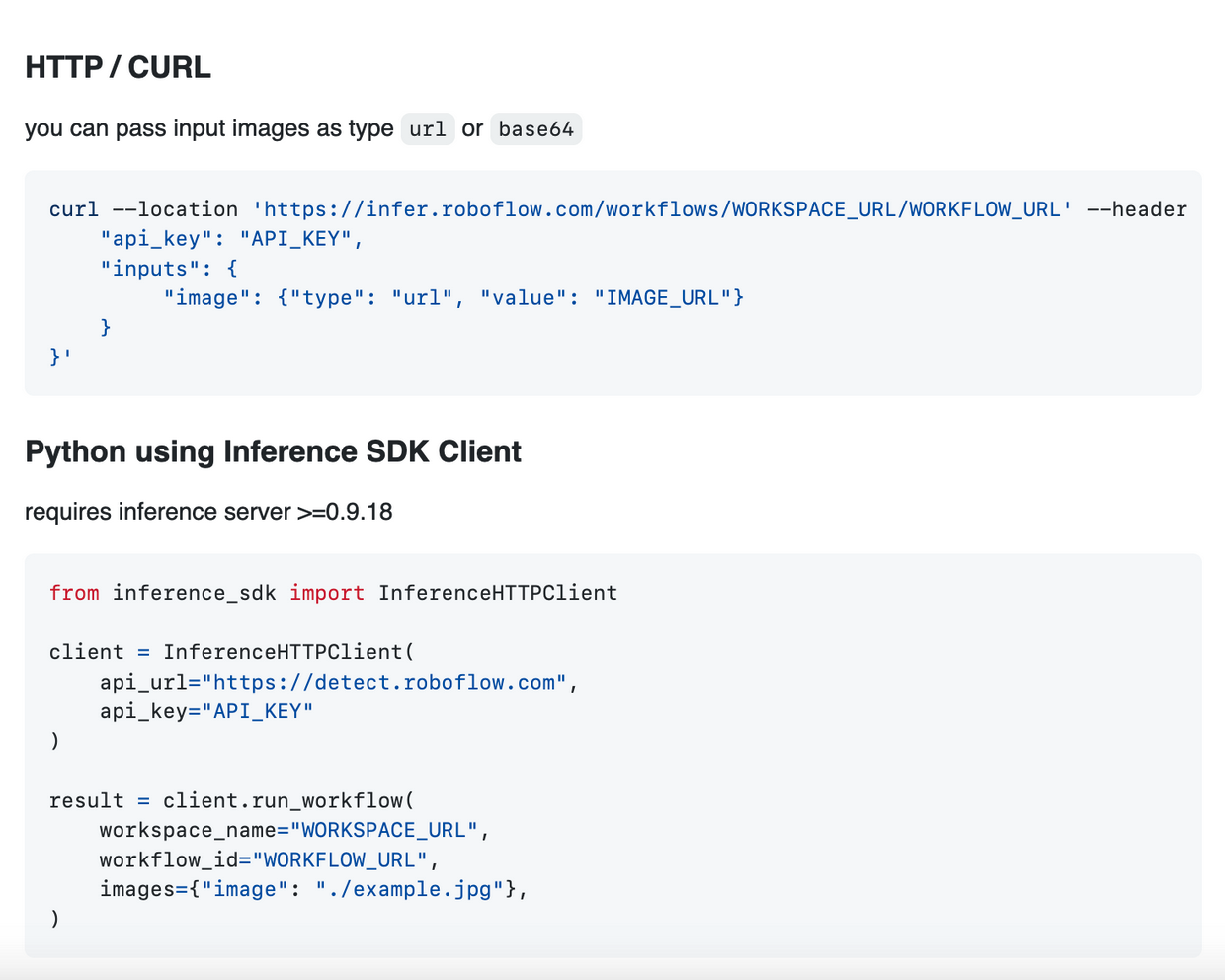

# How to Create and Run a Workflow

-## Example (Web App)

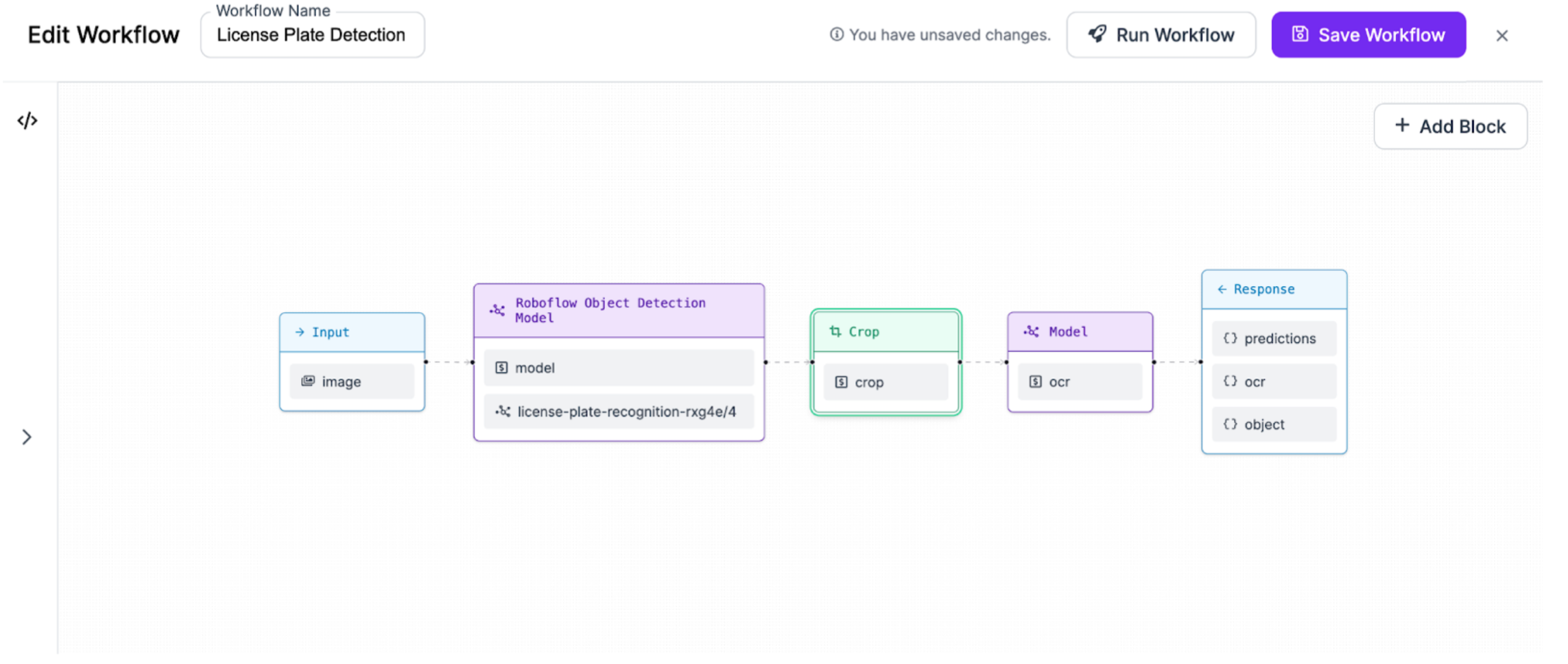

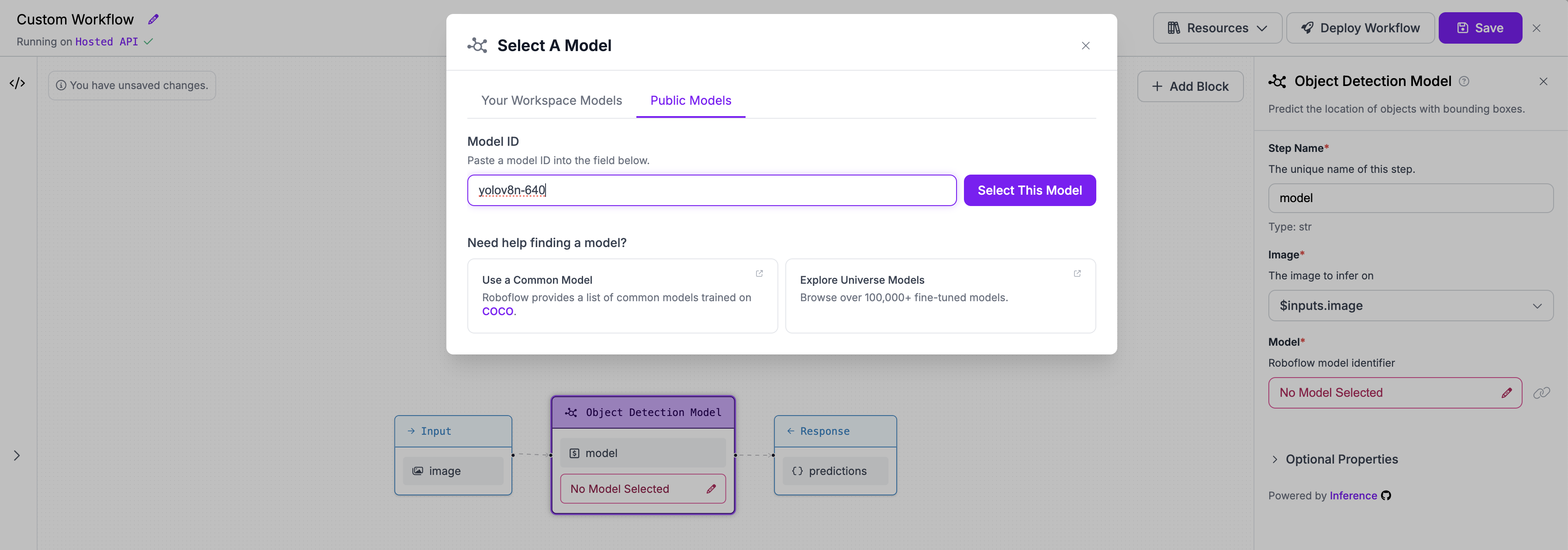

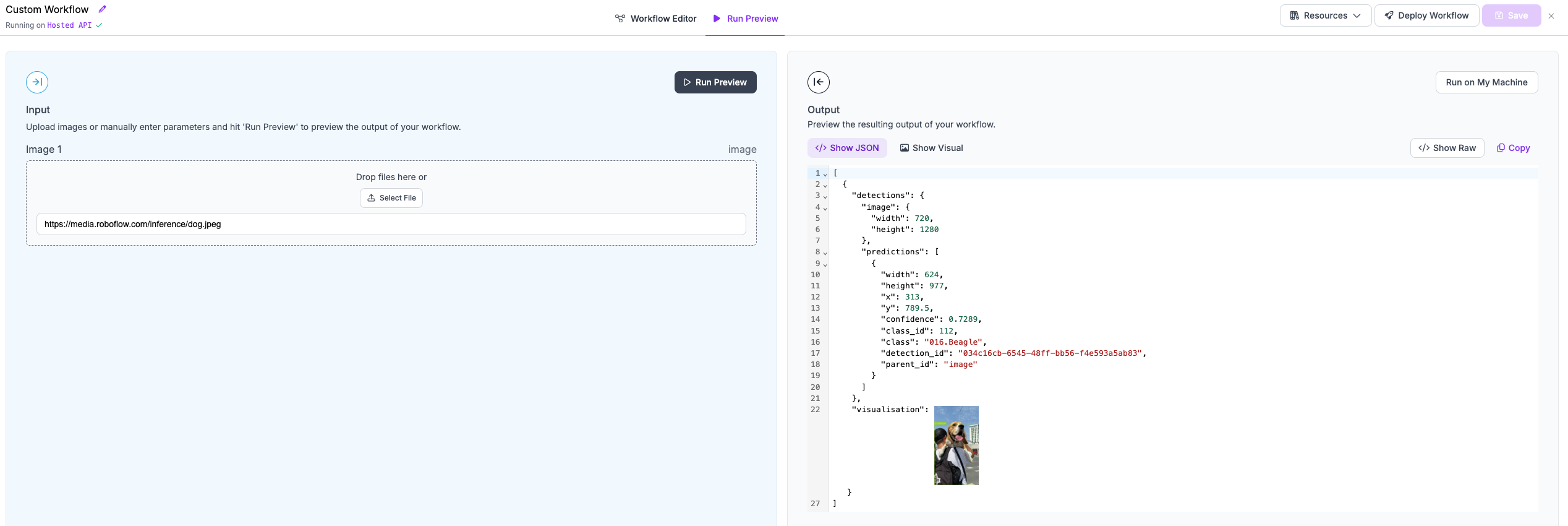

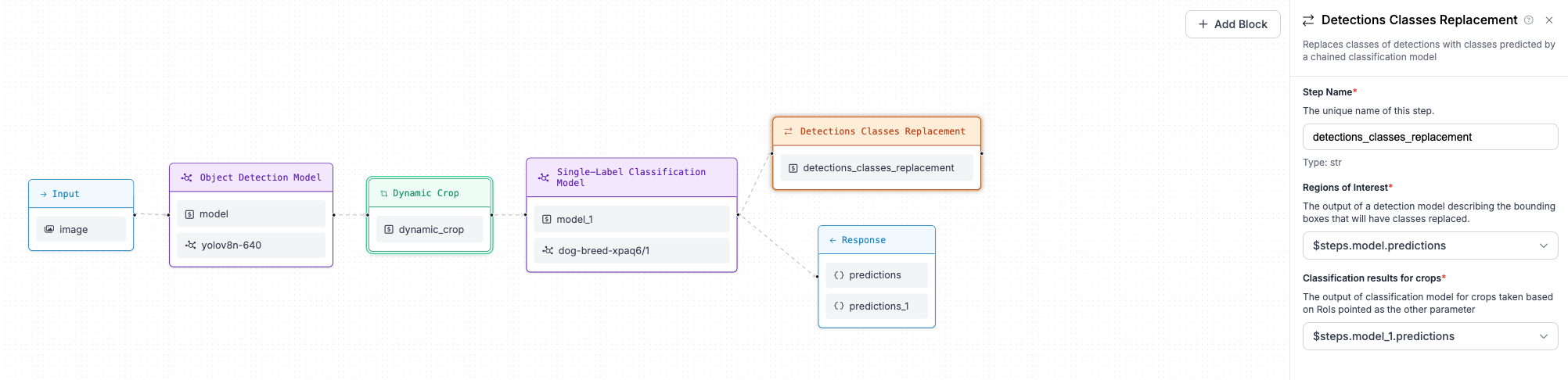

+In this example, we are going to build a Workflow from scratch that detects dogs, classifies their breeds and

+visualizes results.

-In this example, we are going to build a Workflow from scratch that detects license plates, crops the license plate, and then runs OCR on the license plate.

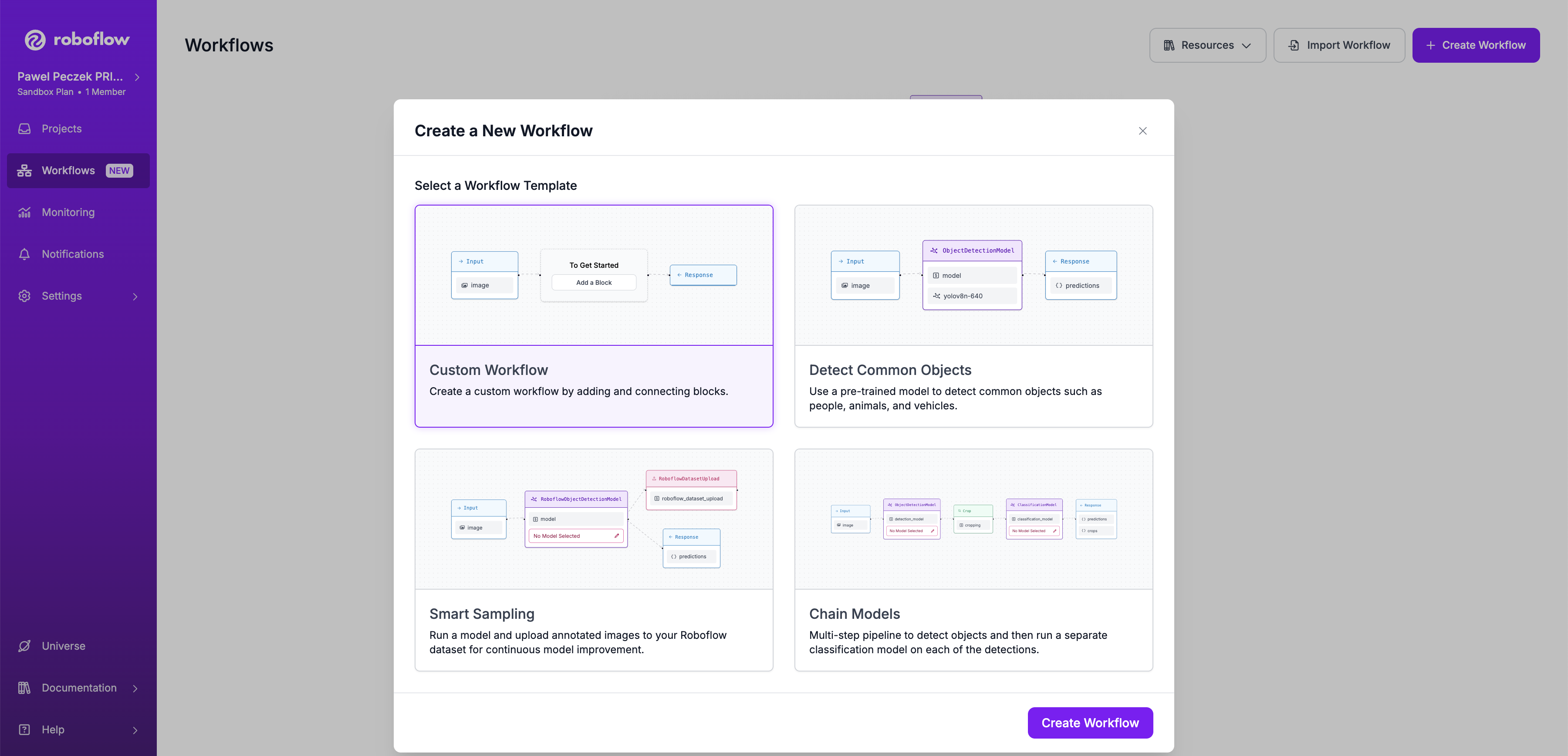

+## Step 1: Create a Workflow

-### Step 1: Create a Workflow

+Open [https://app.roboflow.com/](https://app.roboflow.com/) in your browser, and navigate to the Workflows tab to click

+the Create Workflows button. Select Custom Workflow to start the creation process.

-Navigate to the Workflows tab at the top of your workspace and select the Create Workflows button. We are going to start with a Single Model Workflow.

+

-

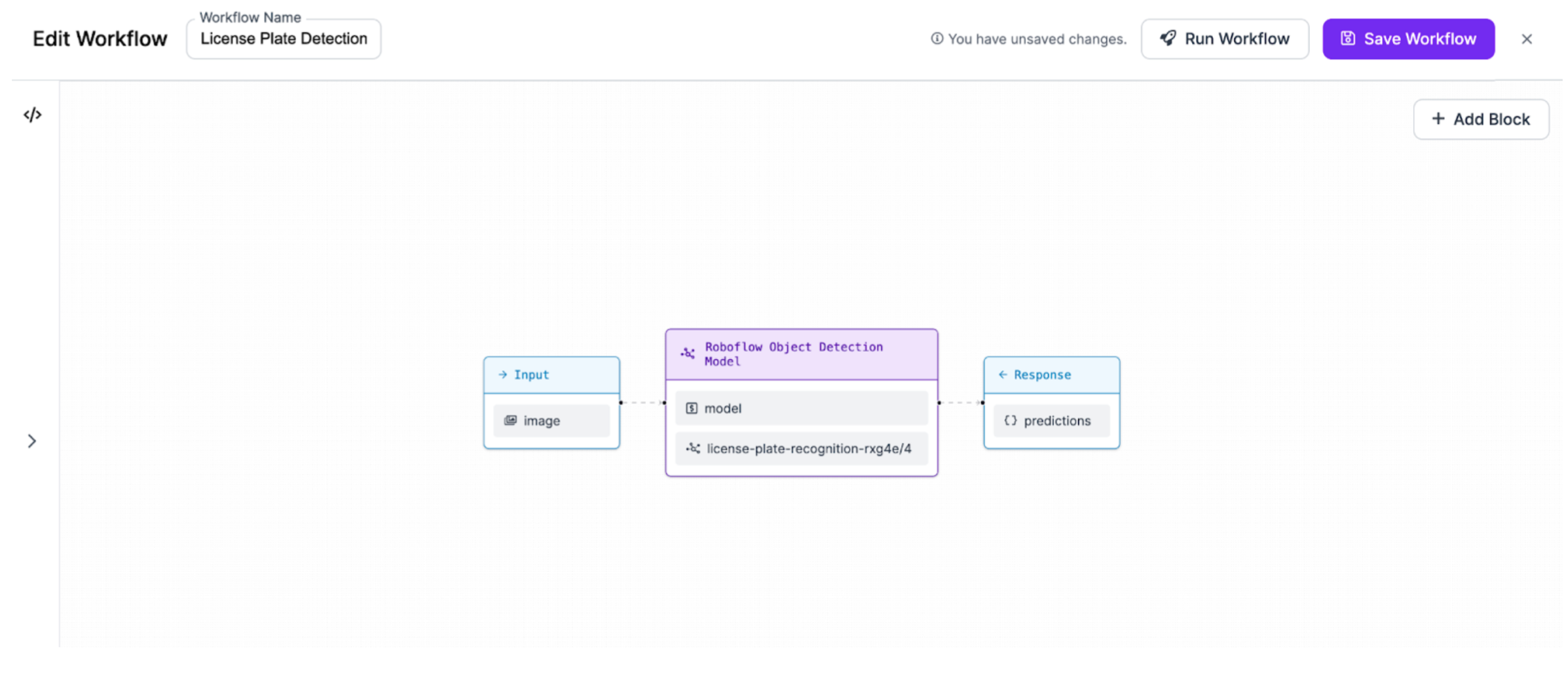

-### Step 2: Add Crop

+## Step 2: Add an object detection model

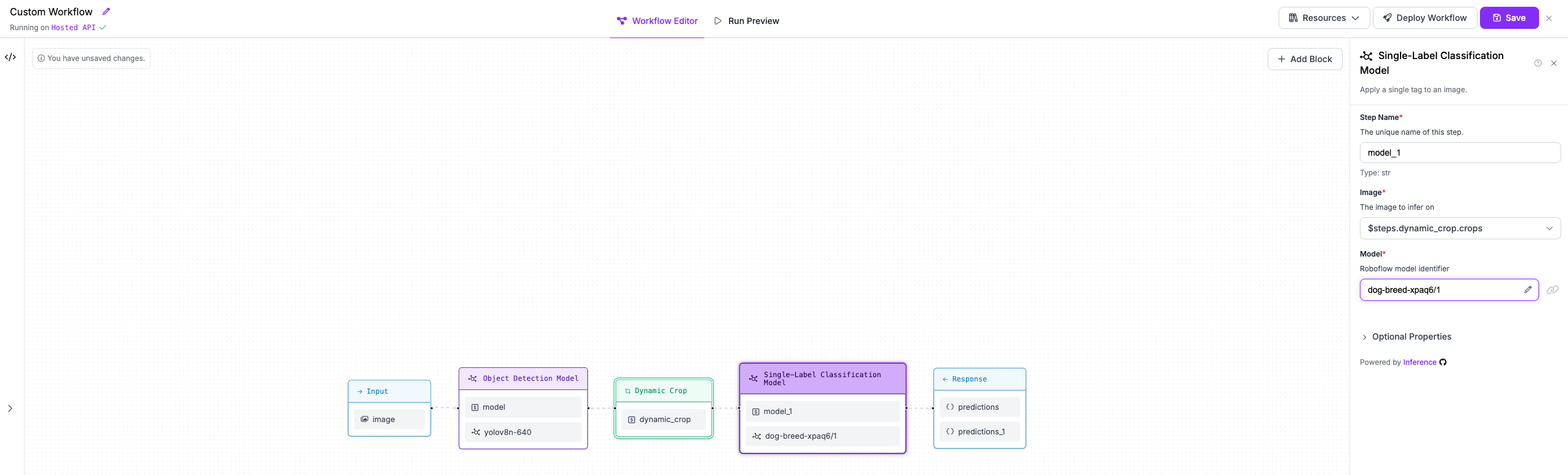

+We need to add a block with an object detection model to the existing workflow. We will use the `yolov8n-640` model.

-Next, we are going to add a block to our Workflow that crops the objects that our first model detects.

-

-

-

-### Step 3: Add OCR

+

-We are then going to add an OCR model for text recognition to our Workflow. We will need to adjust the parameters in order to set the cropped object from our previous block as the input for this block.

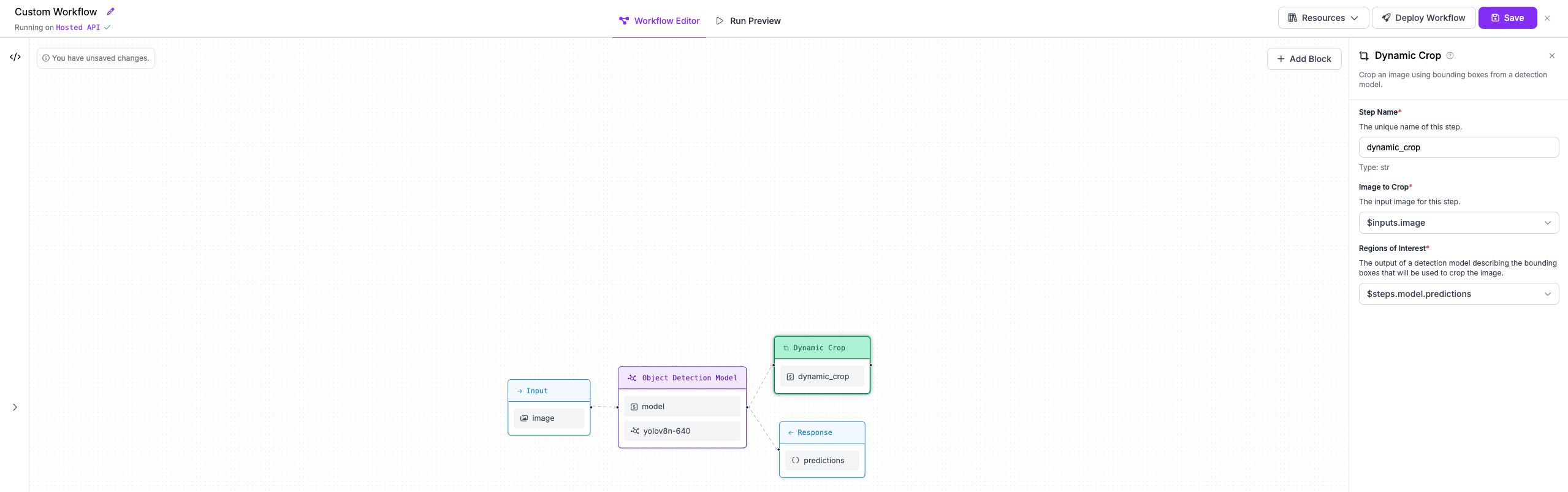

+## Step 3: Crop each detected object to run breed classification

-

-

-### Step 4: Add outputs to our response

+Next, we are going to add a block to our Workflow that crops the objects that our first model detects.

-Finally, we are going to add an output to our response which includes the object that we cropped, alongside the outputs of both our detection model and our OCR model.

+

-

+## Step 4: Classify dog breeds with second stage model

-### Run the Workflow

+We are then going to add a classification model that runs on each crop to classify its content. We will use

+Roboflow Universe model `dog-breed-xpaq6/1`. Please make sure that in the block configuration, the `Image` property

+points to the `crops` output of the Dynamic Crop block.

-Selecting the Run Workflow button generates the code snippets to then deploy your Workflow via the Roboflow Hosted API, locally on images via the Inference Server, and locally on video streams via the Inference Pipeline.

-

+

-You now have a workflow you can run on your own hardware!

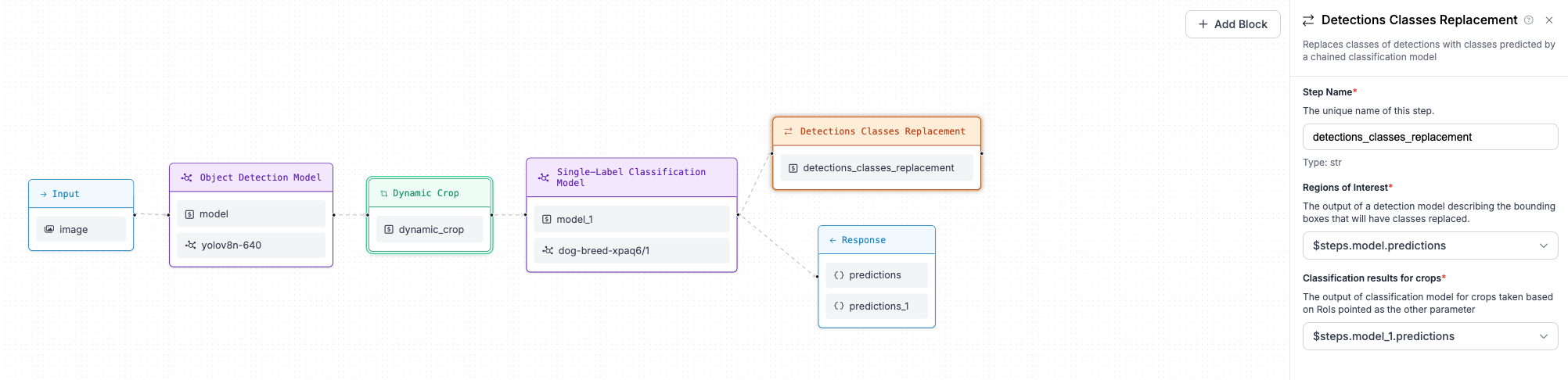

+## Step 5: Replace Bounding Box classes with classification model predictions

-## Example (Code, Advanced)

+When each crop is classified, we would like to assign the class predicted for each crop (dog breed) as a class

+of the dog bounding boxes from the object detection model . To do this we use Detections Classes Replacement block,

+which accepts a reference to predictions of a object detection model, as well as a reference to the classification

+results on the crops.

-Workflows allow you to define multi-step processes that run one or more models and return a result based on the output of the models.

+

-You can create and deploy workflows in the cloud or in Inference.

-To create an advanced workflow for use with Inference, you need to define a specification. A specification is a JSON document that states:

+## Step 6: Visualise predictions

-1. The version of workflows you are using.

-2. The expected inputs.

-3. The steps the workflow should run (i.e. models to run, filters to apply, etc.).

-4. The expected output format.

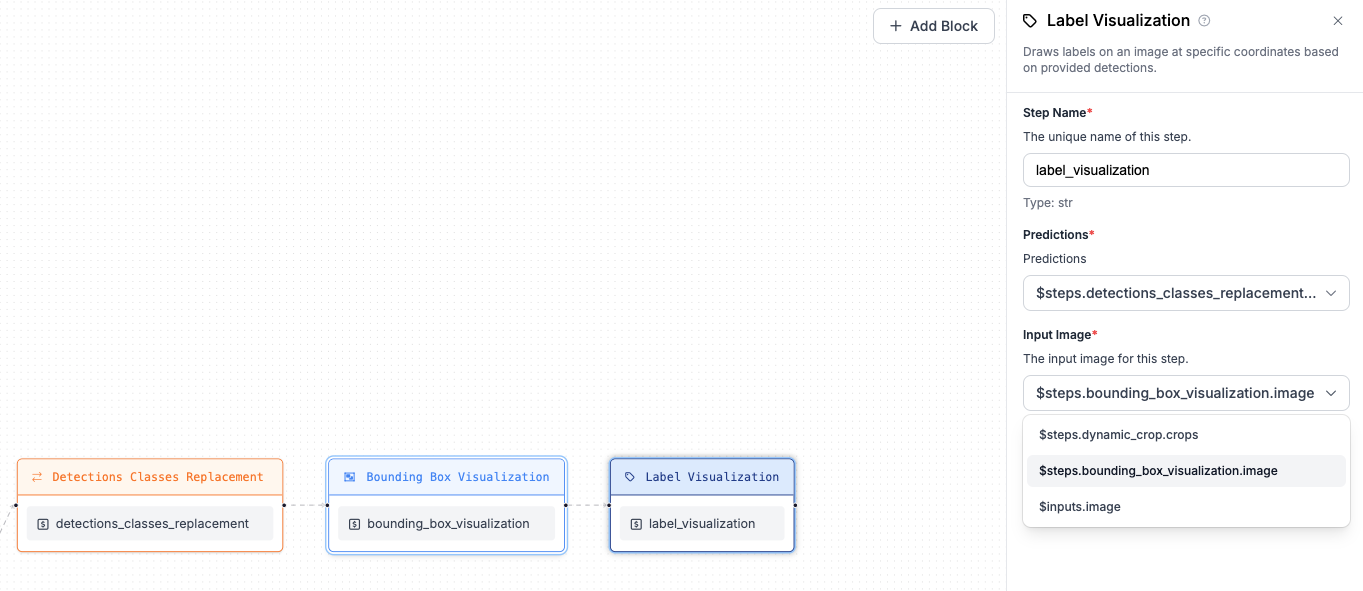

+As a final step of the workflow, we would like to visualize our predictions. We will use two

+visualization blocks: Bounding Box Visualization and Label Visualization chained together.

+At first, add Bounding Box Visualization referring to `$inputs.image` for the Image property (that's the

+image sent as your input to workflow), the second step (Label Visualization) however, should point to

+the output of Bounding Box Visualization step. Both visualization steps should refer to predictions

+from the Detections Classes Replacement step.

-In this guide, we walk through how to create a basic workflow that completes three steps:

+

-1. Run a model to detect objects in an image.

-2. Crops each region.

-3. Runs OCR on each region.

+## Step 7: Construct output

+You have everything ready to construct your workflow output. You can use any intermediate step output that you

+need, but in this example we will only select bounding boxes with replaced classes (output from Detections

+Classes Replacement step) and visualisation (output from Label Visualization step).

-You can use the guidance below as a template to learn the structure of workflows, or verbatim to create your own detect-then-OCR workflows.

-## Step #1: Define an Input

+## Step 8: Running the workflow

+Now your workflow, is ready. You can click the `Save` button and move to the `Run Preview` panel.

-Workflows require a specification to run. A specification takes the follwoing form:

+We will run our workflow against the following example image `https://media.roboflow.com/inference/dog.jpeg`.

+Here are the results

-```python

-SPECIFICATION = {

- "specification": {

- "version": "1.0",

- "inputs": [],

- "steps": [],

- "outputs": []

- }

-}

-```

+

-Within this structure, we need to define our:

+Clicking on the `Show Visual` button you will find results of our visualization efforts.

+

-1. Model inputs

-2. The steps to run

-3. The expected output

-First, let's define our inputs.

+## Different ways of running your workflow

+Your workflow is now saved on the Roboflow Platform. This means you can run it in multiple different ways, including:

-For this workflow, we will specify an image input:

+- HTTP request to Roboflow Hosted API

-```json

-"steps": [

- { "type": "InferenceImage", "name": "image" }, # definition of input image

-]

-```

+- HTTP request to your local instance of `inference server`

-## Step #2: Define Processing Steps

+- on video

-Next, we need to define our processing steps. For this guide, we want to:

+To see code snippets, click the `Deploy Workflow` button:

+

-1. Run a model to detect license plates.

-2. Crop each license plate.

-3. Run OCR on each license plate.

+## Workflow definition for quick reproduction

-We can define these steps as follows:

+To make it easier to reproduce the workflow, below you can find a workflow definition you can copy-paste to UI editor.

-```json

-"steps": [

+??? Tip "Workflow definition"

+

+ ```json

{

- "type": "ObjectDetectionModel", # definition of object detection model

- "name": "plates_detector",

- "image": "$inputs.image", # linking input image into detection model

- "model_id": "vehicle-registration-plates-trudk/2", # pointing model to be used

- },

+ "version": "1.0",

+ "inputs": [

{

- "type": "DetectionOffset", # DocTR model usually works better if there is slight padding around text to be detected - hence we are offseting predictions

- "name": "offset",

- "predictions": "$steps.plates_detector.predictions", # reference to the object detection model output

- "offset_x": 200, # value of offset

- "offset_y": 40, # value of offset

- },

- {

- "type": "Crop", # we would like to run OCR against each and every plate detected - hece we are cropping inputr image using offseted predictions

- "name": "cropping",

- "image": "$inputs.image", # we need to point image to crop

- "detections": "$steps.offset.predictions", # we need to point detections that will be used to crop image (in this case - we use offseted prediction)

- },

- {

- "type": "OCRModel", # we define OCR model

- "name": "step_ocr",

- "image": "$steps.cropping.crops", # OCR model as an input takes a reference to crops that were created based on detections

- },

-],

-```

-

-## Step #3: Define an Output

-

-Finally, we need to define the output for our workflow:

-

-```json

-"outputs": [

- { "type": "JsonField", "name": "predictions", "selector": "$steps.plates_detector.predictions" }, # output with object detection model predictions

- { "type": "JsonField", "name": "image", "selector": "$steps.plates_detector.image" }, # output with image metadata - required by `supervision`

- { "type": "JsonField", "name": "recognised_plates", "selector": "$steps.step_ocr.result" }, # field that will retrieve OCR result

- { "type": "JsonField", "name": "crops", "selector": "$steps.cropping.crops" }, # crops that were made based on plates detections - used here just to ease visualisation

-]

-```

-

-## Step #4: Run Your Workflow

-

-Now that we have our specification, we can run our workflow using the Inference SDK.

-

-=== "Run Locally with Inference"

-

- Use `inference_cli` to start server

-

- ```bash

- inference server start

- ```

-

- ```python

- from inference_sdk import InferenceHTTPClient, VisualisationResponseFormat, InferenceConfiguration

- import supervision as sv

- import cv2

- from matplotlib import pyplot as plt

-

- client = InferenceHTTPClient(

- api_url="http://127.0.0.1:9001",

- api_key="YOUR_API_KEY"

- )

-

- client.configure(

- InferenceConfiguration(output_visualisation_format=VisualisationResponseFormat.NUMPY)

- )

-

- license_plate_image_1 = cv2.imread("./images/license_plate_1.jpg")

-

- license_plate_result_1 = client.infer_from_workflow(

- specification=READING_PLATES_SPECIFICATION["specification"],

- images={"image": license_plate_image_1},

- )

-

- plt.title(f"Recognised plate: {license_plate_result_1['recognised_plates']}")

- plt.imshow(license_plate_result_1["crops"][0]["value"][:, :, ::-1])

- plt.show()

- ```

-

- Here are the results:

-

-

-

-=== "Run in the Roboflow Cloud"

-

- ```python

- from inference_sdk import InferenceHTTPClient

-

- client = InferenceHTTPClient(

- api_url="https://detect.roboflow.com",

- api_key="YOUR_API_KEY"

- )

-

- client.configure(

- InferenceConfiguration(output_visualisation_format=VisualisationResponseFormat.NUMPY)

- )

-

- license_plate_image_1 = cv2.imread("./images/license_plate_1.jpg")

-

- license_plate_result_1 = client.infer_from_workflow(

- specification=READING_PLATES_SPECIFICATION["specification"],

- images={"image": license_plate_image_1},

- )

-

- plt.title(f"Recognised plate: {license_plate_result_1['recognised_plates']}")

- plt.imshow(license_plate_result_1["crops"][0]["value"][:, :, ::-1])

- plt.show()

+ "type": "InferenceImage",

+ "name": "image"

+ }

+ ],

+ "steps": [

+ {

+ "type": "roboflow_core/roboflow_object_detection_model@v1",

+ "name": "model",

+ "images": "$inputs.image",

+ "model_id": "yolov8n-640"

+ },

+ {

+ "type": "roboflow_core/dynamic_crop@v1",

+ "name": "dynamic_crop",

+ "images": "$inputs.image",

+ "predictions": "$steps.model.predictions"

+ },

+ {

+ "type": "roboflow_core/roboflow_classification_model@v1",

+ "name": "model_1",

+ "images": "$steps.dynamic_crop.crops",

+ "model_id": "dog-breed-xpaq6/1"

+ },

+ {

+ "type": "roboflow_core/detections_classes_replacement@v1",

+ "name": "detections_classes_replacement",

+ "object_detection_predictions": "$steps.model.predictions",

+ "classification_predictions": "$steps.model_1.predictions"

+ },

+ {

+ "type": "roboflow_core/bounding_box_visualization@v1",

+ "name": "bounding_box_visualization",

+ "predictions": "$steps.detections_classes_replacement.predictions",

+ "image": "$inputs.image"

+ },

+ {

+ "type": "roboflow_core/label_visualization@v1",

+ "name": "label_visualization",

+ "predictions": "$steps.detections_classes_replacement.predictions",

+ "image": "$steps.bounding_box_visualization.image"

+ }

+ ],

+ "outputs": [

+ {

+ "type": "JsonField",

+ "name": "detections",

+ "coordinates_system": "own",

+ "selector": "$steps.detections_classes_replacement.predictions"

+ },

+ {

+ "type": "JsonField",

+ "name": "visualisation",

+ "coordinates_system": "own",

+ "selector": "$steps.label_visualization.image"

+ }

+ ]

+ }

```

- Here are the results:

-

-

## Next Steps

Now that you have created and run your first workflow, you can explore our other supported blocks and create a more complex workflow.

Refer to our [Supported Blocks](/workflows/blocks/) documentation to learn more about what blocks are supported.

+We also recommend reading the [Understanding workflows](/workflows/understanding/) page.

diff --git a/docs/workflows/create_workflow_block.md b/docs/workflows/create_workflow_block.md

new file mode 100644

index 0000000000..ae2dab937f

--- /dev/null

+++ b/docs/workflows/create_workflow_block.md

@@ -0,0 +1,1913 @@

+# Creating Workflow blocks

+

+Workflows blocks development requires an understanding of the

+Workflow Ecosystem. Before diving deeper into the details, let's summarize the

+required knowledge:

+

+Understanding of [Workflow execution](/workflows/workflow_execution/), in particular:

+

+* what is the relation of Workflow blocks and steps in Workflow definition

+

+* how Workflow blocks and their manifests are used by [Workflows Compiler](/workflows/workflows_compiler/)

+

+* what is the `dimensionality level` of batch-oriented data passing through Workflow

+

+* how [Execution Engine](/workflows/workflows_execution_engine/) interacts with step, regarding

+its inputs and outputs

+

+* what is the nature and role of [Workflow `kinds`](/workflows/kinds/)

+

+* understanding how [`pydantic`](https://docs.pydantic.dev/latest/) works

+

+## Prototypes

+

+To create a Workflow block you need some amount of imports from the core of Workflows library.

+Here is the list of imports that you may find useful while creating a block:

+

+```python

+from inference.core.workflows.execution_engine.entities.base import (

+ Batch, # batches of data will come in Batch[X] containers

+ OutputDefinition, # class used to declare outputs in your manifest

+ WorkflowImageData, # internal representation of image

+ # - use whenever your input kind is image

+)

+

+from inference.core.workflows.prototypes.block import (

+ BlockResult, # type alias for result of `run(...)` method

+ WorkflowBlock, # base class for your block

+ WorkflowBlockManifest, # base class for block manifest

+)

+

+from inference.core.workflows.execution_engine.entities.types import *

+# module with `kinds` from the core library

+```

+

+The most important are:

+

+* `WorkflowBlock` - base class for your block

+

+* `WorkflowBlockManifest` - base class for block manifest

+

+## Block manifest

+

+A manifest is a crucial component of a Workflow block that defines a prototype

+for step declaration that can be placed in a Workflow definition to use the block.

+In particular, it:

+

+* **Uses `pydantic` to power syntax parsing of Workflows definitions:**

+It inherits from [`pydantic BaseModel`](https://docs.pydantic.dev/latest/api/base_model/) features to parse and

+validate Workflow definitions. This schema can also be automatically exported to a format compatible with the

+Workflows UI, thanks to `pydantic's` integration with the OpenAPI standard.

+

+* **Defines Data Bindings:** It specifies which fields in the manifest are selectors for data flowing through

+the workflow during execution and indicates their kinds.

+

+* **Describes Block Outputs:** It outlines the outputs that the block will produce.

+

+* **Specifies Dimensionality:** It details the properties related to input and output dimensionality.

+

+* **Indicates Batch Inputs and Empty Values:** It informs the Execution Engine whether the step accepts batch

+inputs and empty values.

+

+* **Ensures Compatibility:** It dictates the compatibility with different Execution Engine versions to maintain

+stability. For more details, see [versioning](/workflows/versioning/).

+

+### Scaffolding for manifest

+

+To understand how manifests work, let's define one step-by-step. The example block that we build here will be

+calculating images similarity. We start from imports and class scaffolding:

+

+```python

+from typing import Literal

+from inference.core.workflows.prototypes.block import (

+ WorkflowBlockManifest,

+)

+

+class ImagesSimilarityManifest(WorkflowBlockManifest):

+ type: Literal["my_plugin/images_similarity@v1"]

+ name: str

+```

+

+This is the minimal representation of a manifest. It defines two special fields that are important for

+Compiler and Execution engine:

+

+* `type` - required to parse syntax of Workflows definitions based on dynamic pool of blocks - this is the

+[`pydantic` type discriminator](https://docs.pydantic.dev/latest/concepts/unions/#discriminated-unions) that lets the Compiler understand which block manifest is to be verified when

+parsing specific steps in a Workflow definition

+

+* `name` - this property will be used to give the step a unique name and let other steps selects it via selectors

+

+### Adding batch-oriented inputs

+

+We want our step to take two batch-oriented inputs with images to be compared - so effectively

+we will be creating SIMD block.

+

+??? example "Adding batch-oriented inputs"

+

+ Let's see how to add definitions of those inputs to manifest:

+

+ ```{ .py linenums="1" hl_lines="2 6 7 8 9 17 18 19 20 21 22"}

+ from typing import Literal, Union

+ from pydantic import Field

+ from inference.core.workflows.prototypes.block import (

+ WorkflowBlockManifest,

+ )

+ from inference.core.workflows.execution_engine.entities.types import (

+ StepOutputImageSelector,

+ WorkflowImageSelector,

+ )

+

+ class ImagesSimilarityManifest(WorkflowBlockManifest):

+ type: Literal["my_plugin/images_similarity@v1"]

+ name: str

+ # all properties apart from `type` and `name` are treated as either

+ # definitions of batch-oriented data to be processed by block or its

+ # parameters that influence execution of steps created based on block

+ image_1: Union[WorkflowImageSelector, StepOutputImageSelector] = Field(

+ description="First image to calculate similarity",

+ )

+ image_2: Union[WorkflowImageSelector, StepOutputImageSelector] = Field(

+ description="Second image to calculate similarity",

+ )

+ ```

+

+ * in the lines `2-9`, we've added a couple of imports to ensure that we have everything needed

+

+ * line `17` defines `image_1` parameter - as manifest is prototype for Workflow Definition,

+ the only way to tell about image to be used by step is to provide selector - we have

+ two specialised types in core library that can be used - `WorkflowImageSelector` and `StepOutputImageSelector`.

+ If you look deeper into codebase, you will discover those are type aliases - telling `pydantic`

+ to expect string matching `$inputs.{name}` and `$steps.{name}.*` patterns respectively, additionally providing

+ extra schema field metadata that tells Workflows ecosystem components that the `kind` of data behind selector is

+ [image](/workflows/kinds/batch_image/).

+

+ * denoting `pydantic` `Field(...)` attribute in the last parts of line `17` is optional, yet appreciated,

+ especially for blocks intended to cooperate with Workflows UI

+

+ * starting in line `20`, you can find definition of `image_2` parameter which is very similar to `image_1`.

+

+

+Such definition of manifest can handle the following step declaration in Workflow definition:

+

+```json

+{

+ "type": "my_plugin/images_similarity@v1",

+ "name": "my_step",

+ "image_1": "$inputs.my_image",

+ "image_2": "$steps.image_transformation.image"

+}

+```

+

+This definition will make the Compiler and Execution Engine:

+

+* select as a step prototype the block which declared manifest with type discriminator being

+`my_plugin/images_similarity@v1`

+

+* supply two parameters for the steps run method:

+

+ * `input_1` of type `WorkflowImageData` which will be filled with image submitted as Workflow execution input

+

+ * `imput_2` of type `WorkflowImageData` which will be generated at runtime, by another step called

+ `image_transformation`

+

+

+### Adding parameter to the manifest

+

+Let's now add the parameter that will influence step execution. The parameter is not assumed to be

+batch-oriented and will affect all batch elements passed to the step.

+

+??? example "Adding parameter to the manifest"

+

+ ```{ .py linenums="1" hl_lines="9 10 11 26 27 28 29 30 31 32"}

+ from typing import Literal, Union

+ from pydantic import Field

+ from inference.core.workflows.prototypes.block import (

+ WorkflowBlockManifest,

+ )

+ from inference.core.workflows.execution_engine.entities.types import (

+ StepOutputImageSelector,

+ WorkflowImageSelector,

+ FloatZeroToOne,

+ WorkflowParameterSelector,

+ FLOAT_ZERO_TO_ONE_KIND,

+ )

+

+ class ImagesSimilarityManifest(WorkflowBlockManifest):

+ type: Literal["my_plugin/images_similarity@v1"]

+ name: str

+ # all properties apart from `type` and `name` are treated as either

+ # definitions of batch-oriented data to be processed by block or its

+ # parameters that influence execution of steps created based on block

+ image_1: Union[WorkflowImageSelector, StepOutputImageSelector] = Field(

+ description="First image to calculate similarity",

+ )

+ image_2: Union[WorkflowImageSelector, StepOutputImageSelector] = Field(

+ description="Second image to calculate similarity",

+ )

+ similarity_threshold: Union[

+ FloatZeroToOne,

+ WorkflowParameterSelector(kind=[FLOAT_ZERO_TO_ONE_KIND]),

+ ] = Field(

+ default=0.4,

+ description="Threshold to assume that images are similar",

+ )

+ ```

+

+ * line `9` imports `FloatZeroToOne` which is type alias providing validation

+ for float values in range 0.0-1.0 - this is based on native `pydantic` mechanism and

+ everyone could create this type annotation locally in module hosting block

+

+ * line `10` imports function `WorkflowParameterSelector(...)` capable to dynamically create

+ `pydantic` type annotation for selector to workflow input parameter (matching format `$inputs.param_name`),

+ declaring union of kinds compatible with the field

+

+ * line `11` imports [`float_zero_to_one`](/workflows/kinds/float_zero_to_one) `kind` definition which will be used later

+

+ * in line `26` we start defining parameter called `similarity_threshold`. Manifest will accept

+ either float values (in range `[0.0-1.0]`) or selector to workflow input of `kind`

+ [`float_zero_to_one`](/workflows/kinds/float_zero_to_one). Please point out on how

+ function creating type annotation (`WorkflowParameterSelector(...)`) is used -

+ in particular, expected `kind` of data is passed as list of `kinds` - representing union

+ of expected data `kinds`.

+

+Such definition of manifest can handle the following step declaration in Workflow definition:

+

+```{ .json linenums="1" hl_lines="6"}

+{

+ "type": "my_plugin/images_similarity@v1",

+ "name": "my_step",

+ "image_1": "$inputs.my_image",

+ "image_2": "$steps.image_transformation.image",

+ "similarity_threshold": "$inputs.my_similarity_threshold"

+}

+```

+

+or alternatively:

+

+```{ .json linenums="1" hl_lines="6"}

+{

+ "type": "my_plugin/images_similarity@v1",

+ "name": "my_step",

+ "image_1": "$inputs.my_image",

+ "image_2": "$steps.image_transformation.image",

+ "similarity_threshold": "0.5"

+}

+```

+

+??? hint "LEARN MORE: Selecting step outputs"

+

+ Our siplified example showcased declaration of properties that accept selectors to

+ images produced by other steps via `StepOutputImageSelector`.

+

+ You can use function `StepOutputSelector(...)` creating field annotations dynamically

+ to express the that block accepts batch-oriented outputs from other steps of specified

+ kinds

+

+ ```{ .py linenums="1" hl_lines="9 10 25"}

+ from typing import Literal, Union

+ from pydantic import Field

+ from inference.core.workflows.prototypes.block import (

+ WorkflowBlockManifest,

+ )

+ from inference.core.workflows.execution_engine.entities.types import (

+ StepOutputImageSelector,

+ WorkflowImageSelector,

+ StepOutputSelector,

+ NUMPY_ARRAY_KIND,

+ )

+

+ class ImagesSimilarityManifest(WorkflowBlockManifest):

+ type: Literal["my_plugin/images_similarity@v1"]

+ name: str

+ # all properties apart from `type` and `name` are treated as either

+ # definitions of batch-oriented data to be processed by block or its

+ # parameters that influence execution of steps created based on block

+ image_1: Union[WorkflowImageSelector, StepOutputImageSelector] = Field(

+ description="First image to calculate similarity",

+ )

+ image_2: Union[WorkflowImageSelector, StepOutputImageSelector] = Field(

+ description="Second image to calculate similarity",

+ )

+ example: StepOutputSelector(kind=[NUMPY_ARRAY_KIND])

+ ```

+

+### Declaring block outputs

+

+Our manifest is ready regarding properties that can be declared in Workflow definitions,

+but we still need to provide additional information for the Execution Engine to successfully

+run the block.

+

+??? example "Declaring block outputs"

+

+ Minimal set of information required is outputs description. Additionally,

+ to increase block stability, we advise to provide information about execution engine

+ compatibility.

+

+ ```{ .py linenums="1" hl_lines="1 5 13 33-40 42-44"}

+ from typing import Literal, Union, List, Optional

+ from pydantic import Field

+ from inference.core.workflows.prototypes.block import (

+ WorkflowBlockManifest,

+ OutputDefinition,

+ )

+ from inference.core.workflows.execution_engine.entities.types import (

+ StepOutputImageSelector,

+ WorkflowImageSelector,

+ FloatZeroToOne,

+ WorkflowParameterSelector,

+ FLOAT_ZERO_TO_ONE_KIND,

+ BOOLEAN_KIND,

+ )

+

+ class ImagesSimilarityManifest(WorkflowBlockManifest):

+ type: Literal["my_plugin/images_similarity@v1"]

+ name: str

+ image_1: Union[WorkflowImageSelector, StepOutputImageSelector] = Field(

+ description="First image to calculate similarity",

+ )

+ image_2: Union[WorkflowImageSelector, StepOutputImageSelector] = Field(

+ description="Second image to calculate similarity",

+ )

+ similarity_threshold: Union[

+ FloatZeroToOne,

+ WorkflowParameterSelector(kind=[FLOAT_ZERO_TO_ONE_KIND]),

+ ] = Field(

+ default=0.4,

+ description="Threshold to assume that images are similar",

+ )

+

+ @classmethod

+ def describe_outputs(cls) -> List[OutputDefinition]:

+ return [

+ OutputDefinition(

+ name="images_match",

+ kind=[BOOLEAN_KIND],

+ )

+ ]

+

+ @classmethod

+ def get_execution_engine_compatibility(cls) -> Optional[str]:

+ return ">=1.0.0,<2.0.0"

+ ```

+

+ * line `1` contains additional imports from `typing`

+

+ * line `5` imports class that is used to describe step outputs

+

+ * line `13` imports [`boolean`](/workflows/kinds/boolean) `kind` to be used

+ in outputs definitions

+

+ * lines `33-40` declare class method to specify outputs from the block -

+ each entry in list declare one return property for each batch element and its `kind`.

+ Our block will return boolean flag `images_match` for each pair of images.

+

+ * lines `42-44` declare compatibility of the block with Execution Engine -

+ see [versioning page](/workflows/versioning/) for more details

+

+As a result of those changes:

+

+* Execution Engine would understand that steps created based on this block

+are supposed to deliver specified outputs and other steps can refer to those outputs

+in their inputs

+

+* the blocks loading mechanism will not load the block given that Execution Engine is not in version `v1`

+

+??? hint "LEARN MORE: Dynamic outputs"

+

+ Some blocks may not be able to arbitrailry define their outputs using

+ classmethod - regardless of the content of step manifest that is available after

+ parsing. To support this we introduced the following convention:

+

+ * classmethod `describe_outputs(...)` shall return list with one element of

+ name `*` and kind `*` (aka `WILDCARD_KIND`)

+

+ * additionally, block manifest should implement instance method `get_actual_outputs(...)`

+ that provides list of actual outputs that can be generated based on filled manifest data

+

+ ```{ .py linenums="1" hl_lines="14 35-42 44-49"}

+ from typing import Literal, Union, List, Optional

+ from pydantic import Field

+ from inference.core.workflows.prototypes.block import (

+ WorkflowBlockManifest,

+ OutputDefinition,

+ )

+ from inference.core.workflows.execution_engine.entities.types import (

+ StepOutputImageSelector,

+ WorkflowImageSelector,

+ FloatZeroToOne,

+ WorkflowParameterSelector,

+ FLOAT_ZERO_TO_ONE_KIND,

+ BOOLEAN_KIND,

+ WILDCARD_KIND,

+ )

+

+ class ImagesSimilarityManifest(WorkflowBlockManifest):

+ type: Literal["my_plugin/images_similarity@v1"]

+ name: str

+ image_1: Union[WorkflowImageSelector, StepOutputImageSelector] = Field(

+ description="First image to calculate similarity",

+ )

+ image_2: Union[WorkflowImageSelector, StepOutputImageSelector] = Field(

+ description="Second image to calculate similarity",

+ )

+ similarity_threshold: Union[

+ FloatZeroToOne,

+ WorkflowParameterSelector(kind=[FLOAT_ZERO_TO_ONE_KIND]),

+ ] = Field(

+ default=0.4,

+ description="Threshold to assume that images are similar",

+ )

+ outputs: List[str]

+

+ @classmethod

+ def describe_outputs(cls) -> List[OutputDefinition]:

+ return [

+ OutputDefinition(

+ name="*",

+ kind=[WILDCARD_KIND],

+ ),

+ ]

+

+ def get_actual_outputs(self) -> List[OutputDefinition]:

+ # here you have access to `self`:

+ return [

+ OutputDefinition(name=e, kind=[BOOLEAN_KIND])

+ for e in self.outputs

+ ]

+ ```

+

+

+## Definition of block class

+

+At this stage, the manifest of our simple block is ready, we will continue

+with our example. You can check out the [advanced topics](#advanced-topics) section for more details that would just

+be a distractions now.

+

+### Base implementation

+

+Having the manifest ready, we can prepare baseline implementation of the

+block.

+

+??? example "Block scaffolding"

+

+ ```{ .py linenums="1" hl_lines="1 5 6 8-11 56-68"}

+ from typing import Literal, Union, List, Optional, Type

+ from pydantic import Field

+ from inference.core.workflows.prototypes.block import (

+ WorkflowBlockManifest,

+ WorkflowBlock,

+ BlockResult,

+ )

+ from inference.core.workflows.execution_engine.entities.base import (

+ OutputDefinition,

+ WorkflowImageData,

+ )

+ from inference.core.workflows.execution_engine.entities.types import (

+ StepOutputImageSelector,

+ WorkflowImageSelector,

+ FloatZeroToOne,

+ WorkflowParameterSelector,

+ FLOAT_ZERO_TO_ONE_KIND,

+ BOOLEAN_KIND,

+ )

+

+ class ImagesSimilarityManifest(WorkflowBlockManifest):

+ type: Literal["my_plugin/images_similarity@v1"]

+ name: str

+ image_1: Union[WorkflowImageSelector, StepOutputImageSelector] = Field(

+ description="First image to calculate similarity",

+ )

+ image_2: Union[WorkflowImageSelector, StepOutputImageSelector] = Field(

+ description="Second image to calculate similarity",

+ )

+ similarity_threshold: Union[

+ FloatZeroToOne,

+ WorkflowParameterSelector(kind=[FLOAT_ZERO_TO_ONE_KIND]),

+ ] = Field(

+ default=0.4,

+ description="Threshold to assume that images are similar",

+ )

+

+ @classmethod

+ def describe_outputs(cls) -> List[OutputDefinition]:

+ return [

+ OutputDefinition(

+ name="images_match",

+ kind=[BOOLEAN_KIND],

+ ),

+ ]

+

+ @classmethod

+ def get_execution_engine_compatibility(cls) -> Optional[str]:

+ return ">=1.0.0,<2.0.0"

+

+

+ class ImagesSimilarityBlock(WorkflowBlock):

+

+ @classmethod

+ def get_manifest(cls) -> Type[WorkflowBlockManifest]:

+ return ImagesSimilarityManifest

+

+ def run(

+ self,

+ image_1: WorkflowImageData,

+ image_2: WorkflowImageData,

+ similarity_threshold: float,

+ ) -> BlockResult:

+ pass

+ ```

+

+ * lines `1`, `5-6` and `8-9` added changes into import surtucture to

+ provide additional symbols required to properly define block class and all

+ of its methods signatures

+

+ * line `59` defines class method `get_manifest(...)` to simply return

+ the manifest class we cretaed earlier

+

+ * lines `62-68` define `run(...)` function, which Execution Engine

+ will invoke with data to get desired results

+

+### Providing implementation for block logic

+

+Let's now add an example implementation of the `run(...)` method to our block, such that

+it can produce meaningful results.

+

+!!! Note

+

+ The Content of this section is supposed to provide examples on how to interact

+ with the Workflow ecosystem as block creator, rather than providing robust

+ implementation of the block.

+

+??? example "Implementation of `run(...)` method"

+

+ ```{ .py linenums="1" hl_lines="3 56-58 70-81"}

+ from typing import Literal, Union, List, Optional, Type

+ from pydantic import Field

+ import cv2

+

+ from inference.core.workflows.prototypes.block import (

+ WorkflowBlockManifest,

+ WorkflowBlock,

+ BlockResult,

+ )

+ from inference.core.workflows.execution_engine.entities.base import (

+ OutputDefinition,

+ WorkflowImageData,

+ )

+ from inference.core.workflows.execution_engine.entities.types import (

+ StepOutputImageSelector,

+ WorkflowImageSelector,

+ FloatZeroToOne,

+ WorkflowParameterSelector,

+ FLOAT_ZERO_TO_ONE_KIND,

+ BOOLEAN_KIND,

+ )

+

+ class ImagesSimilarityManifest(WorkflowBlockManifest):

+ type: Literal["my_plugin/images_similarity@v1"]

+ name: str

+ image_1: Union[WorkflowImageSelector, StepOutputImageSelector] = Field(

+ description="First image to calculate similarity",

+ )

+ image_2: Union[WorkflowImageSelector, StepOutputImageSelector] = Field(

+ description="Second image to calculate similarity",

+ )

+ similarity_threshold: Union[

+ FloatZeroToOne,

+ WorkflowParameterSelector(kind=[FLOAT_ZERO_TO_ONE_KIND]),

+ ] = Field(

+ default=0.4,

+ description="Threshold to assume that images are similar",

+ )

+

+ @classmethod

+ def describe_outputs(cls) -> List[OutputDefinition]:

+ return [

+ OutputDefinition(

+ name="images_match",

+ kind=[BOOLEAN_KIND],

+ ),

+ ]

+

+ @classmethod

+ def get_execution_engine_compatibility(cls) -> Optional[str]:

+ return ">=1.0.0,<2.0.0"

+

+

+ class ImagesSimilarityBlock(WorkflowBlock):

+

+ def __init__(self):

+ self._sift = cv2.SIFT_create()

+ self._matcher = cv2.FlannBasedMatcher(dict(algorithm=1, trees=5), dict(checks=50))

+

+ @classmethod

+ def get_manifest(cls) -> Type[WorkflowBlockManifest]:

+ return ImagesSimilarityManifest

+

+ def run(

+ self,

+ image_1: WorkflowImageData,

+ image_2: WorkflowImageData,

+ similarity_threshold: float,

+ ) -> BlockResult:

+ image_1_gray = cv2.cvtColor(image_1.numpy_image, cv2.COLOR_BGR2GRAY)

+ image_2_gray = cv2.cvtColor(image_2.numpy_image, cv2.COLOR_BGR2GRAY)

+ kp_1, des_1 = self._sift.detectAndCompute(image_1_gray, None)

+ kp_2, des_2 = self._sift.detectAndCompute(image_2_gray, None)

+ matches = self._matcher.knnMatch(des_1, des_2, k=2)

+ good_matches = []

+ for m, n in matches:

+ if m.distance < similarity_threshold * n.distance:

+ good_matches.append(m)

+ return {

+ "images_match": len(good_matches) > 0,

+ }

+ ```

+

+ * in line `3` we import OpenCV

+

+ * lines `56-58` defines block constructor, thanks to this - state of block

+ is initialised once and live through consecutive invocation of `run(...)` method - for

+ instance when Execution Engine runs on consecutive frames of video

+

+ * lines `70-81` provide implementation of block functionality - the details are trully not

+ important regarding Workflows ecosystem, but there are few details you should focus:

+

+ * lines `70` and `71` make use of `WorkflowImageData` abstraction, showcasing how

+ `numpy_image` property can be used to get `np.ndarray` from internal representation of images

+ in Workflows. We advise to expole remaining properties of `WorkflowImageData` to discover more.

+

+ * result of workflow block execution, declared in lines `79-81` is in our case just a dictionary

+ **with the keys being the names of outputs declared in manifest**, in line `44`. Be sure to provide all

+ declared outputs - otherwise Execution Engine will raise error.

+

+You may ask yourself how it is possible that implemented block accepts batch-oriented workflow input, but do not

+operate on batches directly. This is due to the fact that the default block behaviour is to run one-by-one against

+all elements of input batches. We will show how to change that in [advanced topics](#advanced-topics) section.

+

+!!! note

+

+ One important note: blocks, like all other classes, have constructors that may initialize a state. This state can

+ persist across multiple Workflow runs when using the same instance of the Execution Engine. If the state management

+ needs to be aware of which batch element it processes (e.g., in object tracking scenarios), the block creator

+ should use dedicated batch-oriented inputs. These inputs, provide relevant metadatadata — like the

+ `WorkflowVideoMetadata` input, which is crucial for tracking use cases and can be used along with `WorkflowImage`

+ input in a block implementing tracker.

+

+ The ecosystem is evolving, and new input types will be introduced over time. If a specific input type needed for

+ a use case is not available, an alternative is to design the block to process entire input batches. This way,

+ you can rely on the Batch container's indices property, which provides an index for each batch element, allowing

+ you to maintain the correct order of processing.

+

+

+## Exposing block in `plugin`

+

+Now, your block is ready to be used, but if you declared step using it in your Workflow definition you

+would see an error. This is because no plugin exports the block you just created. Details of blocks bundling

+will be covered in [separate page](/workflows/blocks_bundling/), but the remaining thing to do is to

+add block class into list returned from your plugins' `load_blocks(...)` function:

+

+```python

+# __init__.py of your plugin

+

+from my_plugin.images_similarity.v1 import ImagesSimilarityBlock

+# this is example import! requires adjustment

+

+def load_blocks():

+ return [ImagesSimilarityBlock]

+```

+

+

+## Advanced topics

+

+### Blocks processing batches of inputs

+

+Sometimes, performance of your block may benefit if all input data is processed at once as batch. This may

+happen for models running on GPU. Such mode of operation is supported for Workflows blocks - here is the example

+on how to use it for your block.

+

+??? example "Implementation of blocks accepting batches"

+

+ ```{ .py linenums="1" hl_lines="13 41-43 71-72 75-78 86-87"}

+ from typing import Literal, Union, List, Optional, Type

+ from pydantic import Field

+ import cv2

+

+ from inference.core.workflows.prototypes.block import (

+ WorkflowBlockManifest,

+ WorkflowBlock,

+ BlockResult,

+ )

+ from inference.core.workflows.execution_engine.entities.base import (

+ OutputDefinition,

+ WorkflowImageData,

+ Batch,

+ )

+ from inference.core.workflows.execution_engine.entities.types import (

+ StepOutputImageSelector,

+ WorkflowImageSelector,

+ FloatZeroToOne,

+ WorkflowParameterSelector,

+ FLOAT_ZERO_TO_ONE_KIND,

+ BOOLEAN_KIND,

+ )

+

+ class ImagesSimilarityManifest(WorkflowBlockManifest):

+ type: Literal["my_plugin/images_similarity@v1"]

+ name: str

+ image_1: Union[WorkflowImageSelector, StepOutputImageSelector] = Field(

+ description="First image to calculate similarity",

+ )

+ image_2: Union[WorkflowImageSelector, StepOutputImageSelector] = Field(

+ description="Second image to calculate similarity",

+ )

+ similarity_threshold: Union[

+ FloatZeroToOne,

+ WorkflowParameterSelector(kind=[FLOAT_ZERO_TO_ONE_KIND]),

+ ] = Field(

+ default=0.4,

+ description="Threshold to assume that images are similar",

+ )

+

+ @classmethod

+ def accepts_batch_input(cls) -> bool:

+ return True

+

+ @classmethod

+ def describe_outputs(cls) -> List[OutputDefinition]:

+ return [

+ OutputDefinition(

+ name="images_match",

+ kind=[BOOLEAN_KIND],

+ ),

+ ]

+

+ @classmethod

+ def get_execution_engine_compatibility(cls) -> Optional[str]:

+ return ">=1.0.0,<2.0.0"

+

+

+ class ImagesSimilarityBlock(WorkflowBlock):

+

+ def __init__(self):

+ self._sift = cv2.SIFT_create()

+ self._matcher = cv2.FlannBasedMatcher(dict(algorithm=1, trees=5), dict(checks=50))

+

+ @classmethod

+ def get_manifest(cls) -> Type[WorkflowBlockManifest]:

+ return ImagesSimilarityManifest

+

+ def run(

+ self,

+ image_1: Batch[WorkflowImageData],

+ image_2: Batch[WorkflowImageData],

+ similarity_threshold: float,

+ ) -> BlockResult:

+ results = []

+ for image_1_element, image_2_element in zip(image_1, image_2):

+ image_1_gray = cv2.cvtColor(image_1_element.numpy_image, cv2.COLOR_BGR2GRAY)

+ image_2_gray = cv2.cvtColor(image_2_element.numpy_image, cv2.COLOR_BGR2GRAY)

+ kp_1, des_1 = self._sift.detectAndCompute(image_1_gray, None)

+ kp_2, des_2 = self._sift.detectAndCompute(image_2_gray, None)

+ matches = self._matcher.knnMatch(des_1, des_2, k=2)

+ good_matches = []

+ for m, n in matches:

+ if m.distance < similarity_threshold * n.distance:

+ good_matches.append(m)

+ results.append({"images_match": len(good_matches) > 0})

+ return results

+ ```

+

+ * line `13` imports `Batch` from core of workflows library - this class represent container which is

+ veri similar to list (but read-only) to keep batch elements

+

+ * lines `41-43` define class method that changes default behaviour of the block and make it capable

+ to process batches

+

+ * changes introduced above made the signature of `run(...)` method to change, now `image_1` and `image_2`

+ are not instances of `WorkflowImageData`, but rather batches of elements of this type

+

+ * lines `75-78`, `86-87` present changes that needed to be introduced to run processing across all batch

+ elements - showcasing how to iterate over batch elements if needed

+

+ * it is important to note how outputs are constructed in line `86` - each element of batch will be given

+ its entry in the list which is returned from `run(...)` method. Order must be aligned with order of batch

+ elements. Each output dictionary must provide all keys declared in block outputs.

+

+### Implementation of flow-control block

+

+Flow-control blocks differs quite substantially from other blocks that just process the data. Here we will show

+how to create a flow control block, but first - a little bit of theory:

+

+* flow-control block is the block that declares compatibility with step selectors in their manifest (selector to step

+is defined as `$steps.{step_name}` - similar to step output selector, but without specification of output name)

+

+* flow-control blocks cannot register outputs, they are meant to return `FlowControl` objects

+

+* `FlowControl` object specify next steps (from selectors provided in step manifest) that for given

+batch element (SIMD flow-control) or whole workflow execution (non-SIMD flow-control) should pick up next

+

+??? example "Implementation of flow-control - SIMD block"

+

+ Example provides and comments out implementation of random continue block

+

+ ```{ .py linenums="1" hl_lines="10 14 26 28-31 55-56"}

+ from typing import List, Literal, Optional, Type, Union

+ import random

+

+ from pydantic import Field

+ from inference.core.workflows.execution_engine.entities.base import (

+ OutputDefinition,

+ WorkflowImageData,

+ )

+ from inference.core.workflows.execution_engine.entities.types import (

+ StepSelector,

+ WorkflowImageSelector,

+ StepOutputImageSelector,

+ )

+ from inference.core.workflows.execution_engine.v1.entities import FlowControl

+ from inference.core.workflows.prototypes.block import (

+ BlockResult,

+ WorkflowBlock,

+ WorkflowBlockManifest,

+ )

+

+

+

+ class BlockManifest(WorkflowBlockManifest):

+ type: Literal["my_plugin/random_continue@v1"]

+ name: str

+ image: Union[WorkflowImageSelector, StepOutputImageSelector] = ImageInputField

+ probability: float

+ next_steps: List[StepSelector] = Field(

+ description="Reference to step which shall be executed if expression evaluates to true",

+ examples=[["$steps.on_true"]],

+ )

+

+ @classmethod

+ def describe_outputs(cls) -> List[OutputDefinition]:

+ return []

+

+ @classmethod

+ def get_execution_engine_compatibility(cls) -> Optional[str]:

+ return ">=1.0.0,<2.0.0"

+

+

+ class RandomContinueBlockV1(WorkflowBlock):

+

+ @classmethod

+ def get_manifest(cls) -> Type[WorkflowBlockManifest]:

+ return BlockManifest

+

+ def run(

+ self,

+ image: WorkflowImageData,

+ probability: float,

+ next_steps: List[str],

+ ) -> BlockResult:

+ if not next_steps or random.random() > probability:

+ return FlowControl()

+ return FlowControl(context=next_steps)

+ ```

+

+ * line `10` imports type annotation for step selector which will be used to

+ notify Execution Engine that the block controls the flow

+

+ * line `14` imports `FlowControl` class which is the only viable response from

+ flow-control block

+

+ * line `26` specifies `image` which is batch-oriented input making the block SIMD -

+ which means that for each element of images batch, block will make random choice on

+ flow-control - if not that input block would operate in non-SIMD mode

+

+ * line `28` defines list of step selectors **which effectively turns the block into flow-control one**

+

+ * lines `55` and `56` show how to construct output - `FlowControl` object accept context being `None`, `string` or

+ `list of strings` - `None` represent flow termination for the batch element, strings are expected to be selectors

+ for next steps, passed in input.

+

+??? example "Implementation of flow-control non-SIMD block"

+

+ Example provides and comments out implementation of random continue block

+

+ ```{ .py linenums="1" hl_lines="9 11 24-27 50-51"}

+ from typing import List, Literal, Optional, Type, Union

+ import random

+

+ from pydantic import Field

+ from inference.core.workflows.execution_engine.entities.base import (

+ OutputDefinition,

+ )

+ from inference.core.workflows.execution_engine.entities.types import (

+ StepSelector,

+ )

+ from inference.core.workflows.execution_engine.v1.entities import FlowControl

+ from inference.core.workflows.prototypes.block import (

+ BlockResult,

+ WorkflowBlock,

+ WorkflowBlockManifest,

+ )

+

+

+

+ class BlockManifest(WorkflowBlockManifest):

+ type: Literal["my_plugin/random_continue@v1"]

+ name: str

+ probability: float

+ next_steps: List[StepSelector] = Field(

+ description="Reference to step which shall be executed if expression evaluates to true",

+ examples=[["$steps.on_true"]],

+ )

+

+ @classmethod

+ def describe_outputs(cls) -> List[OutputDefinition]:

+ return []

+

+ @classmethod

+ def get_execution_engine_compatibility(cls) -> Optional[str]:

+ return ">=1.0.0,<2.0.0"

+

+

+ class RandomContinueBlockV1(WorkflowBlock):

+

+ @classmethod

+ def get_manifest(cls) -> Type[WorkflowBlockManifest]:

+ return BlockManifest

+

+ def run(

+ self,

+ probability: float,

+ next_steps: List[str],

+ ) -> BlockResult:

+ if not next_steps or random.random() > probability:

+ return FlowControl()

+ return FlowControl(context=next_steps)

+ ```

+

+ * line `9` imports type annotation for step selector which will be used to

+ notify Execution Engine that the block controls the flow

+

+ * line `11` imports `FlowControl` class which is the only viable response from

+ flow-control block

+

+ * lines `24-27` defines list of step selectors **which effectively turns the block into flow-control one**

+

+ * lines `50` and `51` show how to construct output - `FlowControl` object accept context being `None`, `string` or

+ `list of strings` - `None` represent flow termination for the batch element, strings are expected to be selectors

+ for next steps, passed in input.

+

+### Nested selectors

+

+Some block will require list of selectors or dictionary of selectors to be

+provided in block manifest field. Version `v1` of Execution Engine supports only

+one level of nesting - so list of lists of selectors or dictionary with list of selectors

+will not be recognised properly.

+

+Practical use cases showcasing usage of nested selectors are presented below.

+

+#### Fusion of predictions from variable number of models

+

+Let's assume that you want to build a block to get majority vote on multiple classifiers predictions - then you would

+like your run method to look like that:

+

+```python

+# pseud-code here

+def run(self, predictions: List[dict]) -> BlockResult:

+ predicted_classes = [p["class"] for p in predictions]

+ counts = Counter(predicted_classes)

+ return {"top_class": counts.most_common(1)[0]}

+```

+

+??? example "Nested selectors - models ensemble"

+

+ ```{ .py linenums="1" hl_lines="23-26 50"}

+ from typing import List, Literal, Optional, Type

+

+ from pydantic import Field

+ import supervision as sv

+ from inference.core.workflows.execution_engine.entities.base import (

+ OutputDefinition,

+ )

+ from inference.core.workflows.execution_engine.entities.types import (

+ StepOutputSelector,

+ BATCH_OF_OBJECT_DETECTION_PREDICTION_KIND,

+ )

+ from inference.core.workflows.prototypes.block import (

+ BlockResult,

+ WorkflowBlock,

+ WorkflowBlockManifest,

+ )

+

+

+

+ class BlockManifest(WorkflowBlockManifest):

+ type: Literal["my_plugin/fusion_of_predictions@v1"]

+ name: str

+ predictions: List[StepOutputSelector(kind=[BATCH_OF_OBJECT_DETECTION_PREDICTION_KIND])] = Field(

+ description="Selectors to step outputs",

+ examples=[["$steps.model_1.predictions", "$steps.model_2.predictions"]],

+ )

+

+ @classmethod

+ def describe_outputs(cls) -> List[OutputDefinition]:

+ return [

+ OutputDefinition(

+ name="predictions",

+ kind=[BATCH_OF_OBJECT_DETECTION_PREDICTION_KIND],

+ )

+ ]

+

+ @classmethod

+ def get_execution_engine_compatibility(cls) -> Optional[str]:

+ return ">=1.0.0,<2.0.0"

+

+

+ class FusionBlockV1(WorkflowBlock):

+

+ @classmethod

+ def get_manifest(cls) -> Type[WorkflowBlockManifest]:

+ return BlockManifest

+

+ def run(

+ self,

+ predictions: List[sv.Detections],

+ ) -> BlockResult:

+ merged = sv.Detections.merge(predictions)

+ return {"predictions": merged}

+ ```

+

+ * lines `23-26` depict how to define manifest field capable of accepting

+ list of selectors

+

+ * line `50` shows what to expect as input to block's `run(...)` method -

+ list of objects which are representation of specific kind. If the block accepted

+ batches, the input type of `predictions` field would be `List[Batch[sv.Detections]`

+

+Such block is compatible with the following step declaration:

+

+```{ .json linenums="1" hl_lines="4-7"}

+{

+ "type": "my_plugin/fusion_of_predictions@v1",

+ "name": "my_step",

+ "predictions": [

+ "$steps.model_1.predictions",

+ "$steps.model_2.predictions"

+ ]

+}

+```

+

+#### Block with data transformations allowing dynamic parameters

+

+Occasionally, blocks may need to accept group of "named" selectors,

+which names and values are to be defined by creator of Workflow definition.

+In such cases, block manifest shall accept dictionary of selectors, where

+keys serve as names for those selectors.

+

+??? example "Nested selectors - named selectors"

+

+ ```{ .py linenums="1" hl_lines="23-26 47"}

+ from typing import List, Literal, Optional, Type, Any

+

+ from pydantic import Field

+ import supervision as sv

+ from inference.core.workflows.execution_engine.entities.base import (

+ OutputDefinition,

+ )

+ from inference.core.workflows.execution_engine.entities.types import (

+ StepOutputSelector,

+ WorkflowParameterSelector,

+ )

+ from inference.core.workflows.prototypes.block import (

+ BlockResult,

+ WorkflowBlock,

+ WorkflowBlockManifest,

+ )

+

+

+

+ class BlockManifest(WorkflowBlockManifest):

+ type: Literal["my_plugin/named_selectors_example@v1"]

+ name: str

+ data: Dict[str, StepOutputSelector(), WorkflowParameterSelector()] = Field(

+ description="Selectors to step outputs",

+ examples=[{"a": $steps.model_1.predictions", "b": "$Inputs.data"}],

+ )

+

+ @classmethod

+ def describe_outputs(cls) -> List[OutputDefinition]:

+ return [