Text generation is a type of natural language processing that uses computational linguistics and artificial intelligence to automatically produce text that can meet specific communicative needs.

In this demo we have the models:

- Generative Pre-trained Transformer 2 (GPT-2) model for text prediction.

- Generative Pre-trained Transformer Neo (GPT-Neo) model for text prediction.

- PersonaGPT (PersonaGPT) model for Conversation.

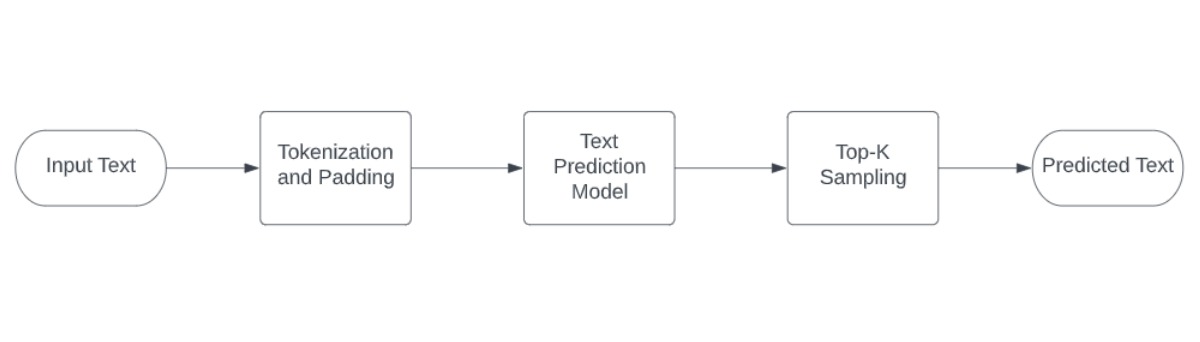

The complete pipeline of this Text Generation is shown below:

This notebook demonstrates how to generate text using user input. By entering the beginning of a sentence or paragraph, the network generates additional text to complete the sequence. Also, This process can be repeated as many times as the user desires, and the model responds to each input, allowing users to engage in a conversation-like interaction.

The following images show an example of the input sequence and corresponding predicted sequence.

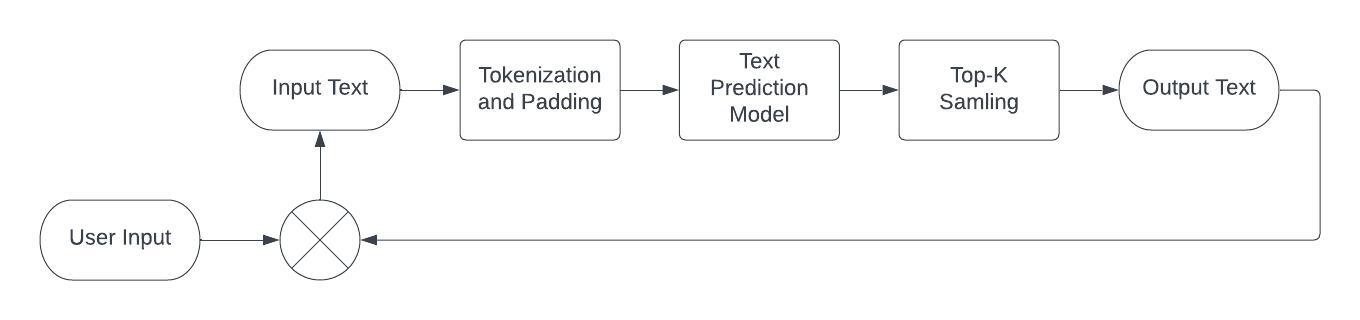

The Modified Pipeline For Conversation is shown below.

The following image shows an example of a conversation.

The notebook demonstrates text prediction with OpenVINO using the following models

- GPT-2 model from HuggingFace Transformers.

- GPT-Neo 125M model from HuggingFace Transformers.

- PersonaGPT model from HuggingFace Transformers

This is a self-contained example that relies solely on its own code.

We recommend running the notebook in a virtual environment. You only need a Jupyter server to start.

For details, please refer to Installation Guide.