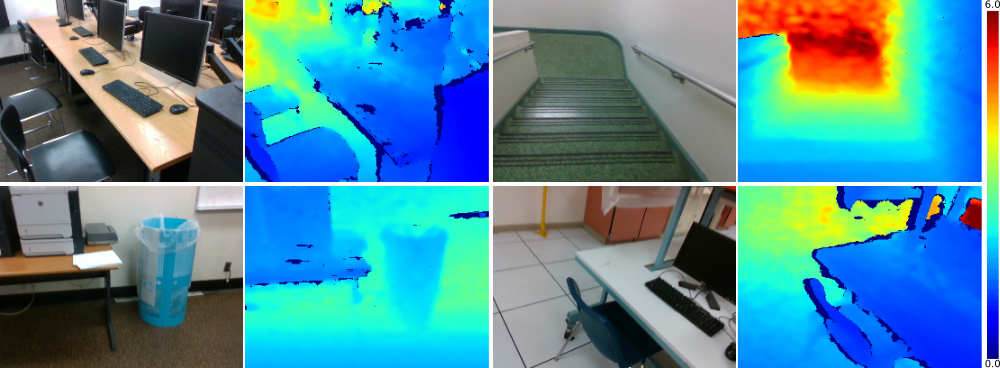

A visual-inertial depth estimation pipeline that integrates monocular depth estimation and visual-inertial odometry to produce dense depth estimates with metric scale has been demonstrated via this notebook.

The entirety of this notebook tutorial has been adapted from the VI-Depth repository. Some pieces of the code dealing with the inference have been adapted as it is the utils directory and have been used in the notebook. The data for inference has been obtained as a subset of the data that has been linked here. Due to the compressed format of the data in openly available Google drive links, uncompressing the same for few inference examples is not recommended. Hence this OpenVINO™ tutorial downloads data on the fly.

The authors have published their work here:

Monocular Visual-Inertial Depth Estimation

Diana Wofk, René Ranftl, Matthias Müller, Vladlen Koltun

The notebook contains a detailed tutorial of the visual-inertial depth estimation pipeline as follows:

- Import required packages and install the PyTorch deep learning library associated with image models (

timm v.0.6.12). - Download an appropriate depth predictor from the VI-Depth repository.

- Get the appropriate predictor model corresponding to the above depth (a

PyTorchmodel callable). - Download images and their depth maps for dummy input creation.

- Convert and transform the models to the OpenVINO IR representation for inference using the dummy inputs from 4.

- Download another set of images similar to the one used in step 4. and finally run the models against them.

If you have not installed all required dependencies, follow the Installation Guide.