Translate an image from a source domain X to a target domain Y in the absence of paired examples.

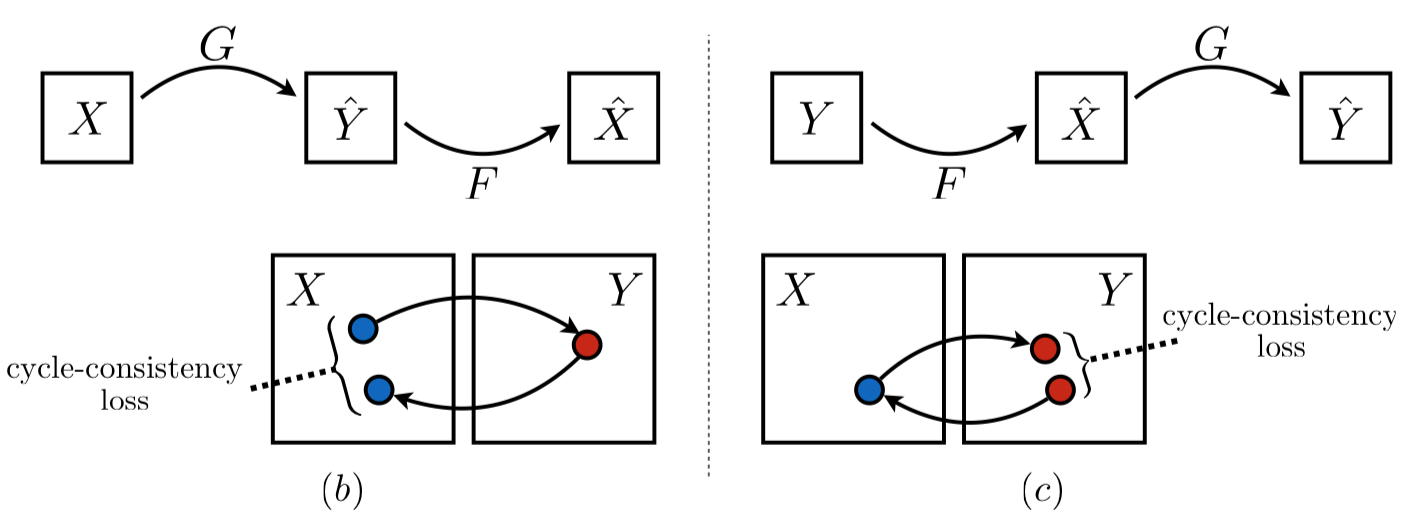

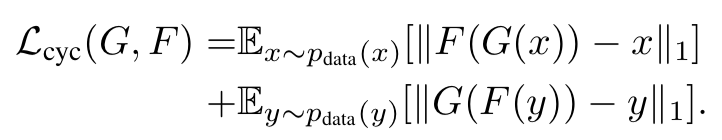

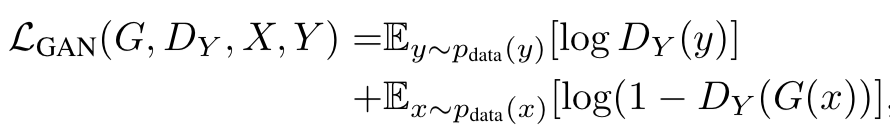

Train mappings G: X -> Y and F: Y -> X simultaneously with a cycle consistency loss and adversarial loss.

Auto Encoder from fast neural-style transfer

Two stride-2 convolutions -> Residual Blocks(6 for 128x128 images and 9 for 256x256 images) -> Two stride-1/2 fractional-stride convolutions

PatchGAN from pix2pix which tries to discriminates overlapping 70x70 patches to be real/fake. Advantages of PatchGAN compared to conventional discriminator:

- Less parameters

- Can be applied to abitrary-sized images

Differences from DiscoGAN

-

Generator's archetecture

-

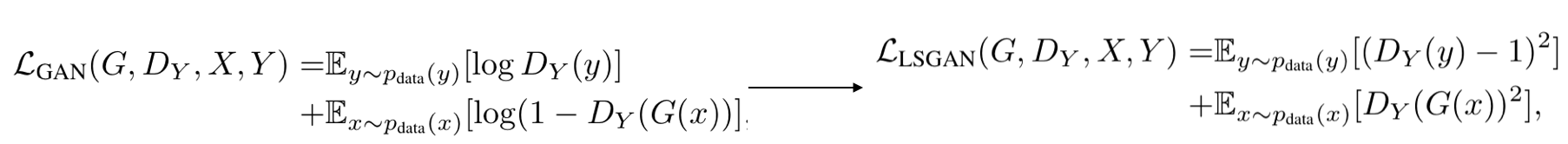

Cycle GAN replaces the negative log likelihood objective by a least square loss which is able to stable training and generate images of higher quality.

-

Cycle GAN uses a history of generated images rather than the ones produced by the latest generative networks and keeps an image buffer that stores the 50 previously generated images.

-

Cycle GAN uses instance normalization.

Zhu J Y, Park T, Isola P, et al. Unpaired Image-to-Image Translation using Cycle-Consistent Adversarial Networks[J]. arXiv preprint arXiv:1703.10593, 2017.