-

Login/Sign Up for IBM Cloud: https://ibm.biz/ExplainableAI

-

Hands-On Guide: https://ibm.biz/ExplainableAi-HandsOn

-

Workshop Replay: https://www.aisummit.io/

Imagine boarding the Titanic in 2021, and you have provided all your details as a passenger to the captain. There is are three people involved, the data scientist, captain, and the passenger. Imagine the company that has built Titanic, has created a machine ML model to predict the rate of survival of the passengers, in case of a disaster. The job of the data scientist is to make a model that is explainable to the passengers who are not technical, and that they get the answer about the reasons why they may not survive.

After pondering upon these questions, you will agree that It’s not enough to make predictions. Sometimes, you need to generate a deep understanding. Just because you model something doesn’t mean you really know how it works.

There are multiple reasons why we need to understand the underlying mechanism of the Machine learning Models -

- Human Readability

- Bias Mitigation

- Justifiability

- Interpretability

**AI Explainability 360, a comprehensive open source toolkit of state-of-the-art algorithms that support the interpretability and explainability of machine learning models.**

This Code Pattern highlights the use of the AI explainability 360 toolkits to demystify the decisions taken by the machine learning model to gain better insights and explainability which not only help the policy-makers, data scientists to develop trusted explainable AI applications but also the general public for transparency and allowing them to gain insight into the machine’s decision-making process. Understanding behind the scenes is essential to fostering trust and confidence in AI systems.

To demonstrate the use of the AI Explainability 360 Toolkit, we are using the existing Fraud Detection Code Pattern showcasing, explaining, and also guide the practitioner on choosing an appropriate explanation method or algorithm depending upon the type of customer(Data Scientist, General Public, SME, Policy Maker) that needs an explanation of the model.

This Code Pattern will also demonstrate the use of ART(Adversarial Robustness 360 Toolkit) to defend and evaluate Machine Learning models and applications against the adversarial threats of Evasion, Poisoning, Extraction, and Inference.

No single approach to explaining algorithms

Currently, AI explainability 360 toolkit provides eight state-of-the-art explainability algorithms that can add transparency throughout AI systems. Depending on the requirement and subjected to the problem statement you can choose them appropriately. The algorithms are explained in detail on this link.)

In this Code Pattern, we will demonstrate the usage of AIX360 and how we can utilize different explainers to explain the decisions made by the machine learning model.

If you are an existing user please login to IBM Cloud

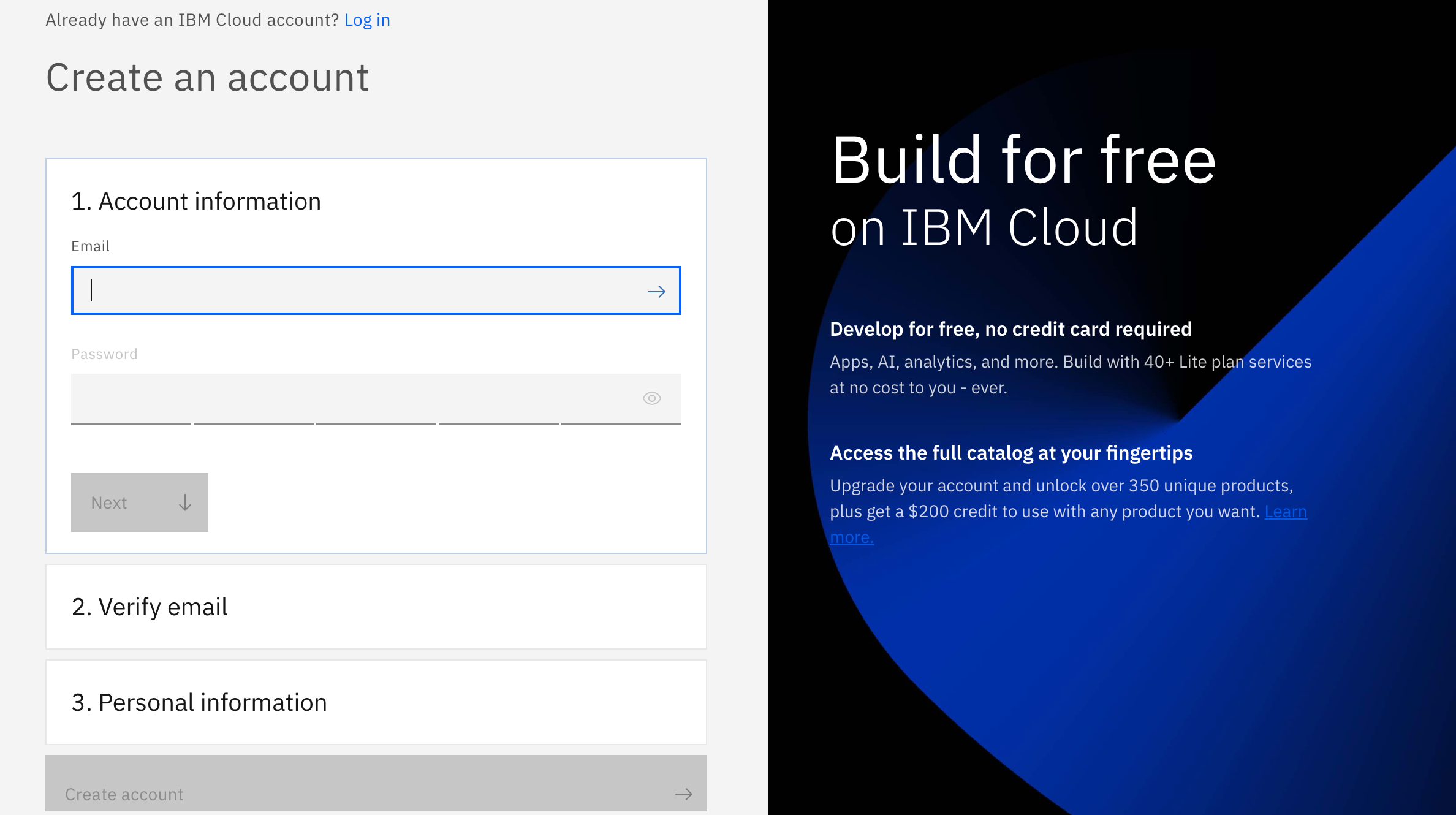

And if you are not, don't worry! We have got you covered! There are 3 steps to create your account on IBM Cloud:

1 - Put your email and password.

2 - You get a verification link with the registered email to verify your account.

3 - Fill the personal information fields.

** Please make sure you select the country you are in when asked at any step of the registration process.

- Log in to Watson Studio powered by spark, initiate Cloud Object Storage, and create a project.

- Upload the .csv data file to Object Storage.

- Load the Data File in Watson Studio Notebook.

- Install AI Explainability 360 Toolkit in the Watson Studio Notebook.

- Visualization for explainability and interpretability of AI Model for the three different types of Users.

-

IBM Watson Studio: Analyze data using RStudio, Jupyter, and Python in a configured, collaborative environment that includes IBM value-adds, such as managed Spark.

-

IBM AI Explainability 360 : The AI Explainability 360 toolkit is an open-source library that supports interpretability and explainability of datasets and machine learning models. The AI Explainability 360 Python package includes a comprehensive set of algorithms that cover different dimensions of explanations along with proxy explainability metrics.

-

IBM Cloud Object Storage: An IBM Cloud service that provides an unstructured cloud data store to build and deliver cost effective apps and services with high reliability and fast speed to market. This code pattern uses Cloud Object Storage.

- Artificial Intelligence: Any system which can mimic cognitive functions that humans associate with the human mind, such as learning and problem solving.

- Data Science: Systems and scientific methods to analyze structured and unstructured data in order to extract knowledge and insights.

- Analytics: Analytics delivers the value of data for the enterprise.

- Python: Python is a programming language that lets you work more quickly and integrate your systems more effectively.

Login to IBM Cloud and create an account using this link https://ibm.biz/ExplainableAI

Go to your IBM Cloud Dashboard, type in search box "Watson studio" select the service, on the service page click create

-

On the new project page, give your project a name. You will also need to associate an IBM Cloud Object Storage instance to store the data set.

-

Under “Select Storage Service”, click on the “Add” button. This takes you to the IBM Cloud Object Store service page. Leave the service on the “Lite” tier and then click the “Create” button at the bottom of the page. You are prompted to name the service and choose the resource group. Once you give a name, click “Create”.

-

Once the instance is created, you’re taken back to the project page. Click on “refresh” and you should see your newly created Cloud Object Storage instance under Storage.

-

Click the “Create” button at the bottom right of the page to create your project.

Click on get started it will take you to a "Cloud Pak for Data" Dashboard.

Select create a project

Click on create an empty project

Give your project a unique name and click on create

From IBM Watson Dashboard click on "Add to project" and select "Notebook"

And then add the notebook using the URL using the steps below

In the Add a Notebook section, select the From URL option, select the runtime as Default Python 3.7 XS (2 vCPU 8 GB RAM).

Add the following notebook URL in the Notebook URL field:

https://github.com/IBMDeveloperMEA/Trusted-AI-Build-Explainable-ML-Models-using-AIX360/blob/main/Titanic-aix360-v1.ipynb

The rest of of the concepts are explained in the notebook, let's go through them together!

-

Login/Sign Up for IBM Cloud: https://ibm.biz/ExplainableAI

-

Hands-On Guide: https://ibm.biz/ExplainableAi-HandsOn

-

Workshop Replay: https://www.aisummit.io/

Check out Adversarial Robustness 360 (ART):

Check out AI Fairness 360 (AIF360):

Check out AI Factsheets 360 (AIFS360):

- (Fawaz Siddiqi)[https://linktr.ee/thefaz]

- (Qamar un Nisa)[https://www.linkedin.com/in/qamarnisa/]