-

Notifications

You must be signed in to change notification settings - Fork 4.3k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Update Domino for Llama3 #7084

Open

shenzheyu

wants to merge

20

commits into

deepspeedai:master

Choose a base branch

from

shenzheyu:master

base: master

Could not load branches

Branch not found: {{ refName }}

Loading

Could not load tags

Nothing to show

Loading

Are you sure you want to change the base?

Some commits from the old base branch may be removed from the timeline,

and old review comments may become outdated.

Open

Update Domino for Llama3 #7084

Conversation

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

|

@hwchen2017 , please follow up on this pr. thank you! |

Signed-off-by: Zheyu SHEN <[email protected]>

Signed-off-by: Logan Adams <[email protected]> Signed-off-by: Zheyu SHEN <[email protected]>

Signed-off-by: Zheyu SHEN <[email protected]>

Signed-off-by: Zheyu SHEN <[email protected]>

Propagate API change. Signed-off-by: Olatunji Ruwase <[email protected]> Signed-off-by: Zheyu SHEN <[email protected]>

- add zero2 test - minor fix with transformer version update & ds master merge. Signed-off-by: inkcherry <[email protected]> Co-authored-by: Olatunji Ruwase <[email protected]> Signed-off-by: Zheyu SHEN <[email protected]>

bf16 with moe refresh optimizer state from bf16 ckpt will raise IndexError: list index out of range Signed-off-by: shaomin <[email protected]> Co-authored-by: shaomin <[email protected]> Co-authored-by: Hongwei Chen <[email protected]> Signed-off-by: Zheyu SHEN <[email protected]>

**Auto-generated PR to update version.txt after a DeepSpeed release** Released version - 0.16.4 Author - @loadams Co-authored-by: loadams <[email protected]> Signed-off-by: Zheyu SHEN <[email protected]>

@jeffra and I fixed this many years ago, so bringing this doc to a correct state. --------- Signed-off-by: Stas Bekman <[email protected]> Signed-off-by: Zheyu SHEN <[email protected]>

Description This PR includes Tecorigin SDAA accelerator support. With this PR, DeepSpeed supports SDAA as backend for training tasks. --------- Signed-off-by: siqi <[email protected]> Co-authored-by: siqi <[email protected]> Co-authored-by: Olatunji Ruwase <[email protected]> Co-authored-by: Logan Adams <[email protected]> Signed-off-by: Zheyu SHEN <[email protected]>

More information on libuv in pytorch: https://pytorch.org/tutorials/intermediate/TCPStore_libuv_backend.html Issue tracking the prevalence of the error on Windows (unresolved at the time of this PR): pytorch/pytorch#139990 LibUV github: https://github.com/libuv/libuv Windows error: ``` File "C:\hostedtoolcache\windows\Python\3.12.7\x64\Lib\site-packages\torch\distributed\rendezvous.py", line 189, in _create_c10d_store return TCPStore( ^^^^^^^^^ RuntimeError: use_libuv was requested but PyTorch was build without libuv support ``` use_libuv isn't well supported on Windows in pytorch <2.4, so we need to guard around this case. --------- Signed-off-by: Logan Adams <[email protected]> Signed-off-by: Zheyu SHEN <[email protected]>

Signed-off-by: Logan Adams <[email protected]> Signed-off-by: Zheyu SHEN <[email protected]>

@fukun07 and I discovered a bug when using the `offload_states` and `reload_states` APIs of the Zero3 optimizer. When using grouped parameters (for example, in weight decay or grouped lr scenarios), the order of the parameters mapping in `reload_states` ([here](https://github.com/deepspeedai/DeepSpeed/blob/14b3cce4aaedac69120d386953e2b4cae8c2cf2c/deepspeed/runtime/zero/stage3.py#L2953)) does not correspond with the initialization of `self.lp_param_buffer` ([here](https://github.com/deepspeedai/DeepSpeed/blob/14b3cce4aaedac69120d386953e2b4cae8c2cf2c/deepspeed/runtime/zero/stage3.py#L731)), which leads to misaligned parameter loading. This issue was overlooked by the corresponding unit tests ([here](https://github.com/deepspeedai/DeepSpeed/blob/master/tests/unit/runtime/zero/test_offload_states.py)), so we fixed the bug in our PR and added the corresponding unit tests. --------- Signed-off-by: Wei Wu <[email protected]> Co-authored-by: Masahiro Tanaka <[email protected]> Signed-off-by: Zheyu SHEN <[email protected]>

Signed-off-by: Logan Adams <[email protected]> Signed-off-by: Zheyu SHEN <[email protected]>

Following changes in Pytorch trace rules , my previous PR to avoid graph breaks caused by logger is no longer relevant. So instead I've added this functionality to torch dynamo - pytorch/pytorch@16ea0dd This commit allows the user to config torch to ignore logger methods and avoid associated graph breaks. To enable ignore logger methods - os.environ["DISABLE_LOGS_WHILE_COMPILING"] = "1" To ignore logger methods except for a specific method / methods (for example, info and isEnabledFor) - os.environ["DISABLE_LOGS_WHILE_COMPILING"] = "1" and os.environ["LOGGER_METHODS_TO_EXCLUDE_FROM_DISABLE"] = "info, isEnabledFor" Signed-off-by: ShellyNR <[email protected]> Co-authored-by: snahir <[email protected]> Signed-off-by: Zheyu SHEN <[email protected]>

The partition tensor doesn't need to move to the current device when meta load is used. Signed-off-by: Lai, Yejing <[email protected]> Co-authored-by: Olatunji Ruwase <[email protected]> Signed-off-by: Zheyu SHEN <[email protected]>

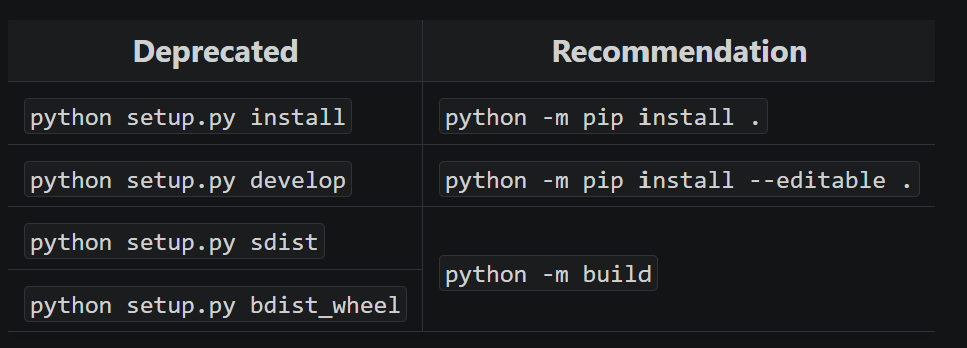

…t` (deepspeedai#7069) With future changes coming to pip/python/etc, we need to modify to no longer call `python setup.py ...` and replace it instead: https://packaging.python.org/en/latest/guides/modernize-setup-py-project/#should-setup-py-be-deleted  This means we need to install the build package which is added here as well. Additionally, we pass the `--sdist` flag to only build the sdist rather than the wheel as well here. --------- Signed-off-by: Logan Adams <[email protected]> Signed-off-by: Zheyu SHEN <[email protected]>

…eepspeedai#7076) This reverts commit 8577bd2. Fixes: deepspeedai#7072 Signed-off-by: Zheyu SHEN <[email protected]>

Add deepseekv3 autotp. Signed-off-by: Lai, Yejing <[email protected]> Signed-off-by: Zheyu SHEN <[email protected]>

Sign up for free

to join this conversation on GitHub.

Already have an account?

Sign in to comment

Add this suggestion to a batch that can be applied as a single commit.

This suggestion is invalid because no changes were made to the code.

Suggestions cannot be applied while the pull request is closed.

Suggestions cannot be applied while viewing a subset of changes.

Only one suggestion per line can be applied in a batch.

Add this suggestion to a batch that can be applied as a single commit.

Applying suggestions on deleted lines is not supported.

You must change the existing code in this line in order to create a valid suggestion.

Outdated suggestions cannot be applied.

This suggestion has been applied or marked resolved.

Suggestions cannot be applied from pending reviews.

Suggestions cannot be applied on multi-line comments.

Suggestions cannot be applied while the pull request is queued to merge.

Suggestion cannot be applied right now. Please check back later.

No description provided.