8 attacks against 9 defense methods, with 5 poisoning ratios, based on 4 datasets and 5 models

我的torch配置:

# CUDA 11.3

pip install torch==1.11.0+cu113 torchvision==0.12.0+cu113 torchaudio==0.11.0 --extra-index-url https://download.pytorch.org/whl/cu113

- attack : all attack should be put here separately

- defense : all defense should be put here separately

- config : all config file in yaml (all attack and defense config should all be put here separately)

- data : data file

- models : models that do not in the torchvision

- record : all experiment generated files and logs (also important for load_result function)

- utils : frequent-use functions and other tools

- aggregate_block : frequent-use blocks in script

- bd_img_transform : basic perturbation on img

- bd_label_transform : basic transform on label

- dataset : script for loading the dataset

- dataset_preprocess : script for preprocess transforms on dataset

- backdoor_generate_pindex.py : some function for generation of poison index

- bd_dataset.py : the wrapper of backdoored datasets

- trainer_cls.py : some basic functions for classification case

- resource : pre-trained model (eg. auto-encoder for attack), or other large file (other than data)

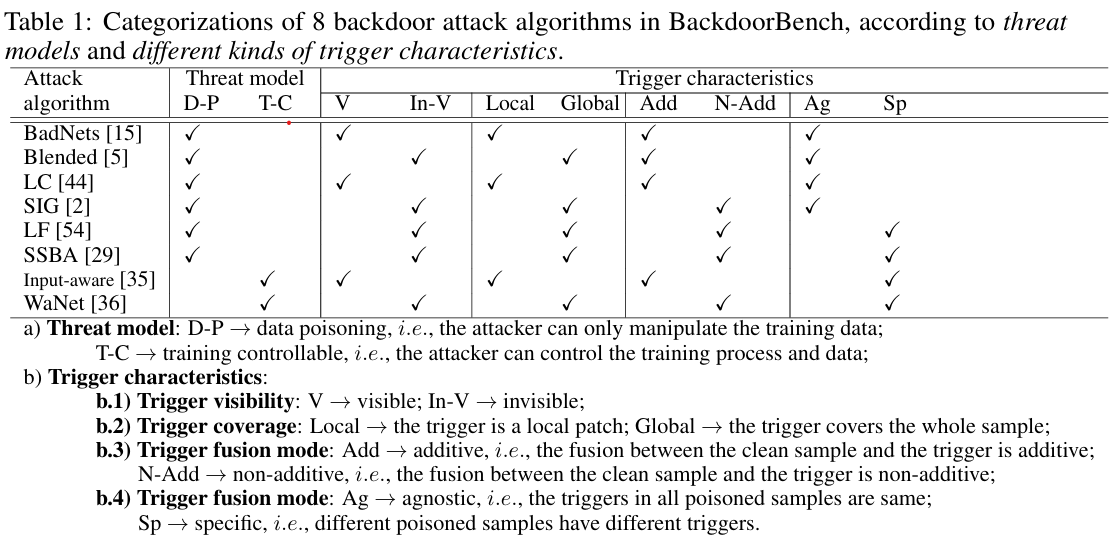

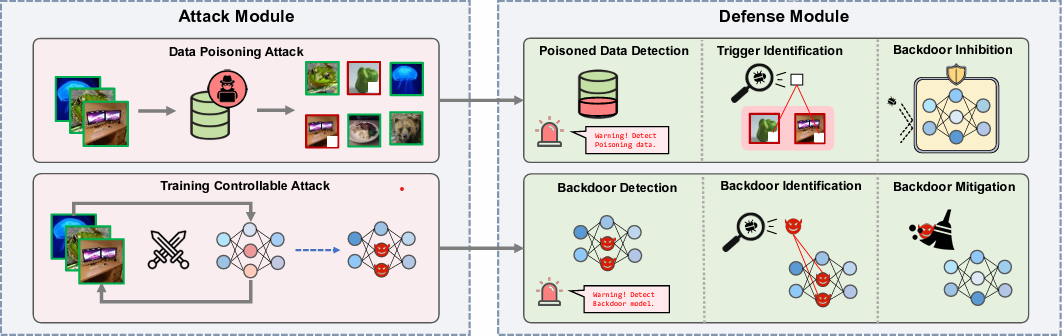

Existing backdoor attack methods can be partitioned into two general categories, including data poisoning and training controllable.

| File name | Paper | |

|---|---|---|

| BadNets | badnets_attack.py | BadNets: Identifying Vulnerabilities in the Machine Learning Model Supply Chain IEEE Access 2019 |

| Blended | blended_attack.py | Targeted Backdoor Attacks on Deep Learning Systems Using Data Poisoning Arxiv 2017 |

| Label Consistent | lc_attack.py | Label-Consistent Backdoor Attacks Arxiv 2019 |

| SIG | sig_attack.py | A new backdoor attack in cnns by training set corruption ICIP 2019 |

| Low Frequency | lf_attack.py | Rethinking the Backdoor Attacks’ Triggers: A Frequency Perspective ICCV2021 |

| SSBA | ssba_attack.py | Invisible Backdoor Attack with Sample-Specific Triggers ICCV 2021 |

| Input-aware | inputaware_attack.py | Input-Aware Dynamic Backdoor Attack NeurIPS 2020 |

| WaNet | wanet_attack.py | WaNet -- Imperceptible Warping-Based Backdoor Attack ICLR 2021 |

For SSBA, the file we used with 1-bit embedded in the images is given at https://drive.google.com/drive/folders/1QU771F2_1mKgfNQZm3OMCyegu2ONJiU2?usp=sharing .

For lc attack the file we used is at https://drive.google.com/drive/folders/1Qhj5vXX7kX74IWdrQDwguWsV8UvJmzF4 .

For lf attack the file we used is at https://drive.google.com/drive/folders/16JrANmjDtvGc3lZ_Cv4lKEODFjRebmvk .

Attacker can only manipulate the training data.

Data poisoning attack can be divided into two main categories based on the property of target labels. See the "Pre-knowledge" section below.

A set of clean samples.

For data poisoning attack, it provides some functions, including trigger generation, poisoned sample generation(i.e., inserting the trigger into the clean sample), and label changing.

It outputs a poisoned dataset with both poisoned and clean samples.

- badnets_attack.py————BadNets

- blended_attack.py————Blended

- lc_attack.py————Label Consistent

- sig_attack.py————SIG

- lf_attack.py————Low Frequency

- ssba_attack.py————SSBA

Attacker can control both the training process and training data simultaneously.

A set of clean samples and a model architecture.

A backdoored model and the learned trigger.

- inputaware_attack.py————Input-aware

- wanet_attack.py————WaNet

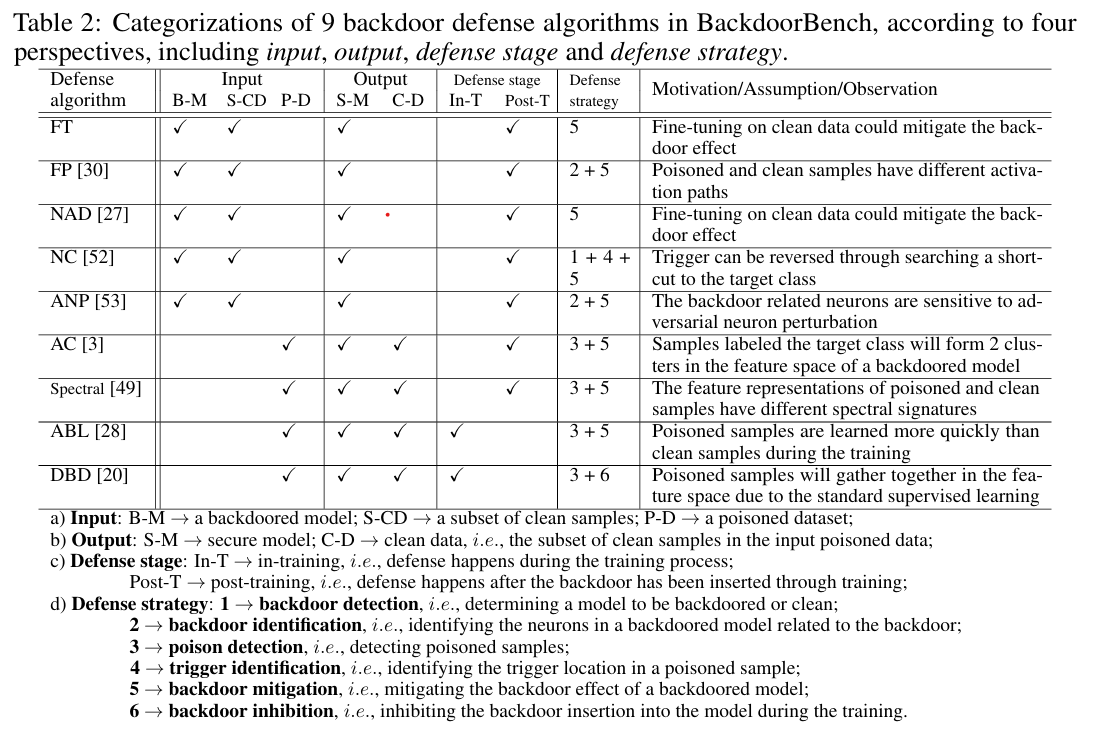

According to the defense stage in the training procedure, existing defense methods can be partitioned into three categories, including pre-training, in-training and post-training.

| File name | Paper | |

|---|---|---|

| FT | ft.py | standard fine-tuning |

| FP | fp.py | Fine-Pruning: Defending Against Backdooring Attacks on Deep Neural Networks RAID 2018 |

| NAD | nad.py | Neural Attention Distillation: Erasing Backdoor Triggers From Deep Neural Networks ICLR 2021 |

| NC | nc.py | Neural Cleanse: Identifying And Mitigating Backdoor Attacks In Neural Networks, IEEE S&P 2019 |

| ANP | anp.py | Adversarial Neuron Pruning Purifies Backdoored Deep Models NeurIPS 2021 |

| AC | ac.py | Detecting Backdoor Attacks on Deep Neural Networks by Activation Clustering ceur-ws 2018 |

| Spectral | spectral.py | Spectral Signatures in Backdoor Attacks NeurIPS 2018 |

| ABL | abl.py | Anti-Backdoor Learning: Training Clean Models on Poisoned Data NeurIPS 2021 |

| DBD | dbd.py | Backdoor Defense Via Decoupling The Training Process ICLR 2022 |

Defender aims to remove or break the poisoned samples before training.

Defender aims to inhibit the backdoor injection during the training.

- abl.py————ABL

- dbd.py————DBD

Defender aims to remove or mitigate the backdoor effect from a backdoored model

- ft.py————FT

- fp.py————FP

- nad.py————NAD

- ac.py————AC

- nc.py————NC

- anp.py————ANP

- spectral.py————Spectral

5 DNN models :

models = ['preactresnet18', 'vgg19', "efficientnet_b3", "mobilenet_v3_large", "densenet161"]4 databases:

cifar10、cifar100、gtsrb、tiny

mnist, cifar10, cifar100, gtsrb, celeba, tiny, imagenet

(MNIST, CIFAR10, CIFAR100 using the pytorch official implementation, download when it is first executed. (TinyImageNet use third-party implementation, and it will be download when first executed.) The download script for GTSRB is in ./sh. For CelebA and ImageNet, you need to download by yourself and change the dataset path argument. )

在load_result.py里跑

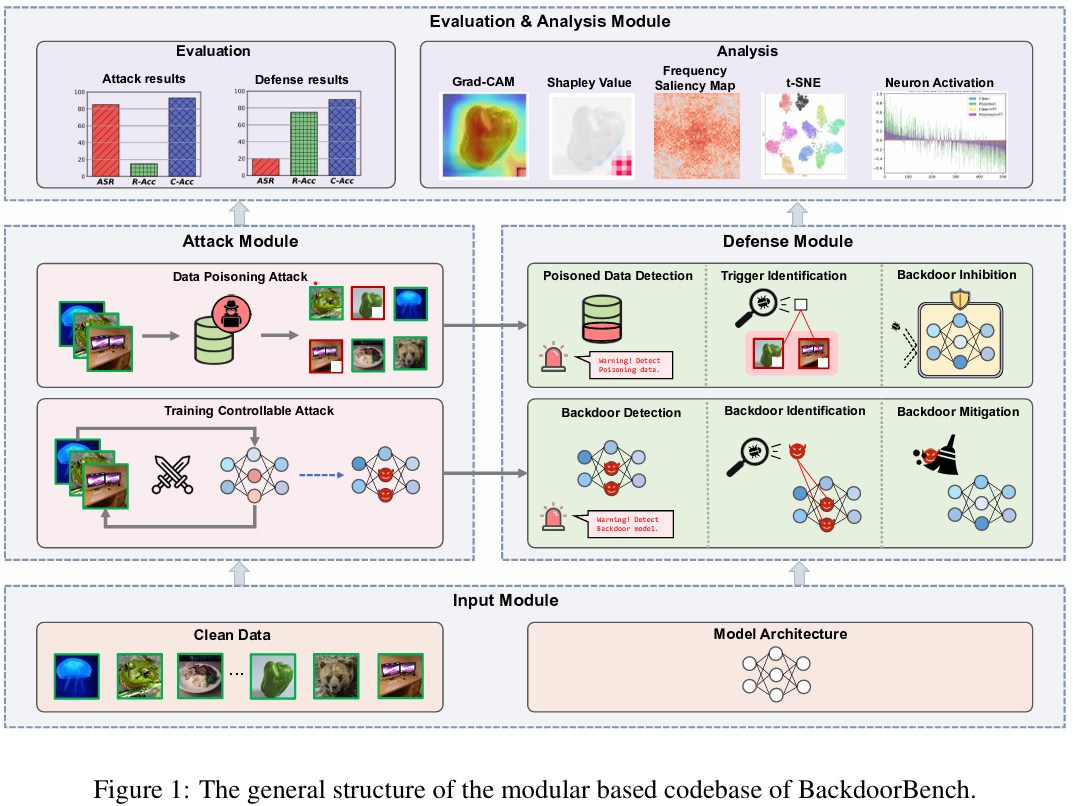

-

clean accuracy (C-Acc)————prediction accuracy of clean samples

-

attack success rate(ASR)————prediction accuracy of poisoned samples to the target class

-

robust accuracy(R-Acc)————prediction accuracy of poisoned samples to the original class

- t-SNE

- Grad-CAM

- shapley value map

- neuron activation

- frequency saliency

其中frequency saliency在visualize_fre.py里跑

其余的在Visualize.py里跑

- Apache : https://cn.linux-console.net/?p=14999

- Public IP address and domain name

- Django need to be installed

- If your server's operating system is Centos-7, you need to configure your yum correctly: https://zhuanlan.zhihu.com/p/719952763